[2024-8-18]We have completed the organization of the repository contents and will continue to update this work.[2024-7-20]We released our project website here

We introduce HDMapII, a novel approach to information interaction for connected autonomous vehicles empowered by high-definition maps. HDMapII consists of five main components: the database for HD map information management, the information interaction modes between autonomous driving vehicle and HD map, the communication methods for various types of dynamic information within HD map information database, the information communication protocol applied at different interaction modes and the approach to achieving a complete HD map information interaction process.

In the RoadRunner simulator, HDMapII utilizes open-source map data, such as OpenStreetMap(OSM), to rebuild real road environment (including lane markings, curbs, surrounding built environment, etc.) and generate HD map data for storage in HD map information database. These scenario data are then used in different information interaction modes respectively and allocated to the corresponding communication methods based on the characteristics of the information itself. Finally, in autonomous driving scenarios, all information interactions between autonomous vehicles and HD maps are conducted based on the MQTT protocol. As the process progresses, newly generated HD map data can be updated to the HD map information database for potential future interactions.

We systematically design the content and exchange format for dynamic information in HD Maps, focusing on two key components: Road Real-time Information (RRTI) and Vehicle Dynamic Information (VDI). The following figure illustrates the primary components of this dynamic information. For additional details, please refer to this Standard (English version will coming later).

We propose three information interaction modes tailored to the various data terminals within HD Maps: Vehicle-to-Cloud mode, Vehicle-to-Vehicle mode and Cloud-to-Vehicle mode.

In Vehicle-to-Cloud mode, the information exchanged from the vehicle terminal to the cloud is mainly the VDI, including vehicle status information, temporary traffic sign information, and temporary event information. Information sender is every autonomous vehicle and the reveiver is HD Map cloud databases terminals.

In Vehicle-to-Vehicle mode, the information exchanged from the vehicle end to the vehicle end is mainly the VDI, including vehicle status information and temporary road object information. The sender and receiver of the information are different autonomous vehilces.

In Cloud-to-Vehicle mode, the information exchanged from the cloud to the vehicle is mainly the RRTI, including traffic signal light information, traffic flow information, traffic control information, traffic event information, and road surface object information. The information sender is HD Map cloud database terminal, and the receivers are any autonomous vehicles that require the data.

The communication protocol used for information exchange in all modes is MQTT. Refer to the diagram below for the communication specifications of each interaction mode.

Messaging Service Levels:

- QoS 0 (At most once): Messages are delivered at most once and are not guaranteed to reach the recipient.

- QoS 1 (At least once): Messages are delivered at least once, there may be duplicate messages, and each message requires an acknowledgement.

- QoS 2 (Exactly once): Messages are ensured to reach the receiver one and only once and are not delivered repeatedly, and uniqueness is guaranteed through a two-stage acknowledgement process.

To test our proposed information interaction methods, we designed three specific scenarios representing common traffic situations in the physical world to evaluate the effectiveness of the information exchange process. The scenarios are named Pedestrian Ghost Probe, Unknown Object on the Road, and Lane-scale Traffic Control, respectively. Each scenario includes the following components: autonomous vehicles (with communication capability), ordinary vehicles (without communication capability), dynamic objects (such as pedestrian), and static objects (such as unknown objects, traffic signs, etc.).

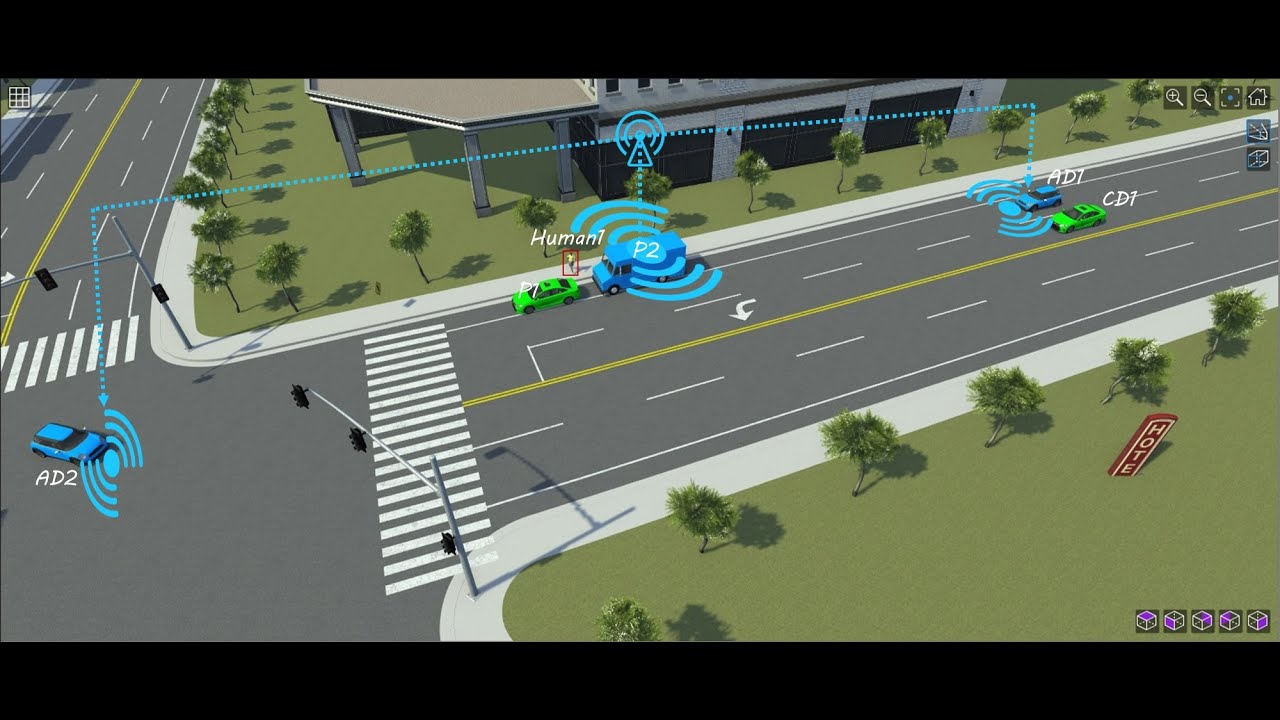

Pedestrian Ghost Probe Scenario

- Participants: 3 Autonomous Vehilces, 2 Ordinary Vehicles, 1 Pedestrian

- Information interaction mode: Vehicle-to-Vehicle

- Exchange information: VDI (Pavement Object)

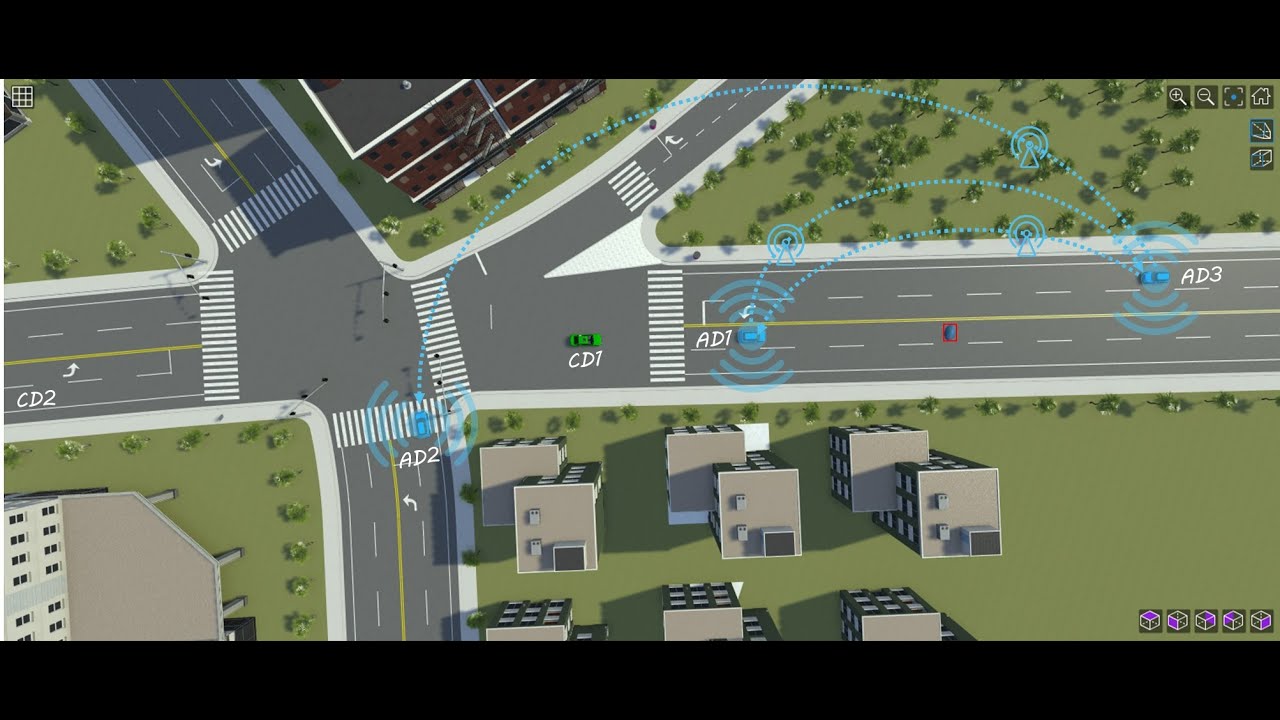

Unknown Object Scenario

- Participants: 3 Autonomous Vehicles, 2 Ordinary Vehicles, 1 Static Object

- Information interaction mode: Vehicle-to-Vehicle

- Exchange information: VDI (Pavement Object)

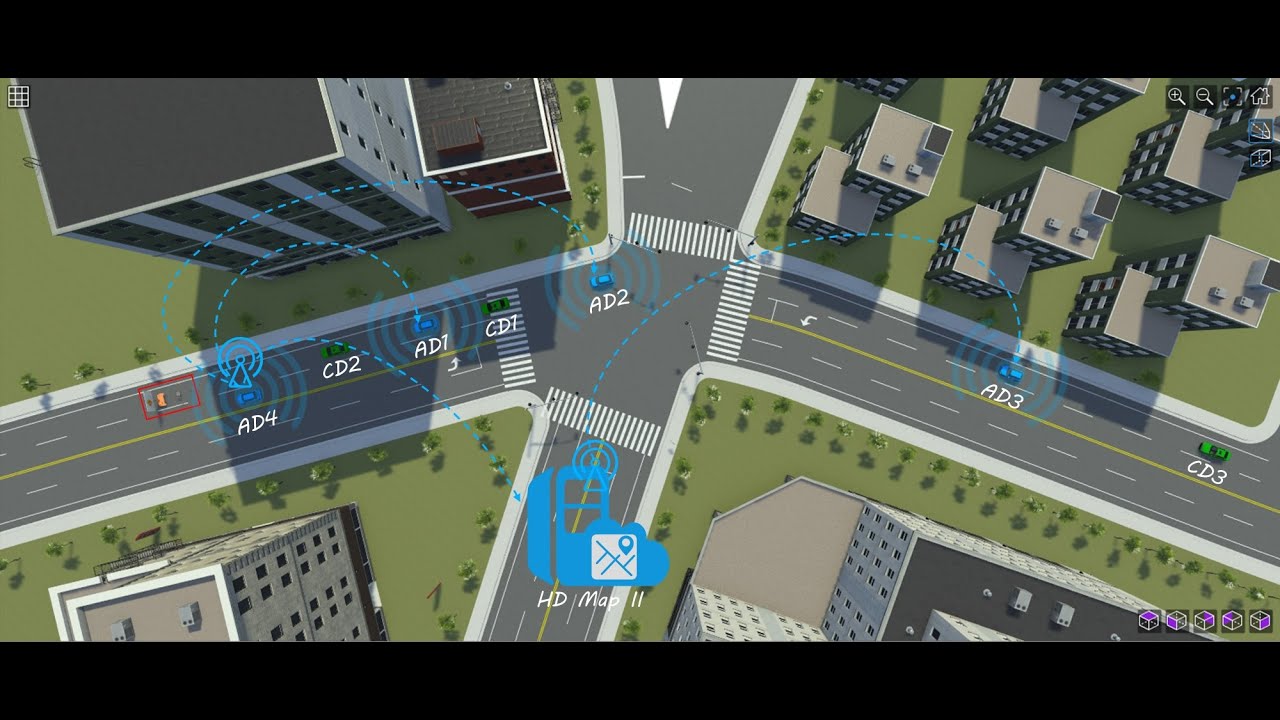

Lane-scale Traffic Control Scenario

- Participants: 4 Autonomous Vehicles, 3 Ordinary Vehicles, 1 Traffic Control Sign

- Information interaction mode: Vehicle-to-Vehicle, Vehicle-to-Cloud, Cloud-to-Vehicle

- Exchange information: VDI (Temporary Object), RRTI (Traffic Control)

The simulation code is available at ./code/PGPS_MatlabCode, ./code/UOS_MatlabCode, ./code/LSTCS_MatlabCode (Run at Matlab r2024a)

The scenario design code is available at ./code/Scenarios (Built by RoadRunner r2024a)

We conduct closed-loop tests in MATLAB. It can be seen that HDMapII can provide the prior information for connected autonomous vehicles, enabling them to make timely decisions. In contrast, regular vehicles, lacking interactive support methods, may spend more time on their journeys due to the inability to respond promptly to unexpected situations.

- Matlab r202Xa(Remain consistent with RoadRunner version. For example, Matlab r2024a with RoadRunner r2024a)

- RoadRunner r202Xa

- PostgreSQL 16

- roadrunner (Connect MATLAB and RoadRunner to control and analyze simulations)

- ThingSpeak (An IoT analytics platform service that allows you to aggregate, visualize and analyze live data streams in the cloud)

- OpenStreetMap (Static map data source)

- Simulink (Optional, only for further analysis)

Static map data is downloaded from OpenStreetMap. The experimental area includes three roads in Shanghai, China: Zhulin Road, Xiangcheng Road, Fushan Road.

Raw map data in OpenDRIVE format is collected from OpenStreetMap. The raw data is imported and preprocessed using MATLAB's Automated Driving Toolbox. Three major intersecting roads within the target area are extracted, and the road environment in that area is recreated. The processed data is then exported to RoadRunner for simulation modeling of realistic traffic scenarios.

A high-definition map information management database is created in PostgreSQL to store the information. The database creation code is available at here.

In RoadRunner r2024a, the traffic elements in the area are simulated and recreated based on Baidu Street View Maps. Then, according to the design requirements of the three typical autonomous driving scenarios, all necessary elements are added to the simulation scene. The final scenarios are available at here

- Configure the connection with RoadRunner in MATLAB.

- Establish a secure connection in MATLAB with an MQTT broker and communicate with the MQTT broker.

- The code for each scenario (PGPS, UOS, LSTCS) integrates the information interaction methods applied in the specific scenario.

- Run the simulation experiments after executing these codes in the following order (take PGPS simulation experiment for example).

-

- 1 step1.m -> step2_2.m -> step2.m

-

- 2 PGPS_AD1_V2V_Receive_final.m (Configure the information receiving port)

-

- 3 PGPS_interaction_p1ad1_2476.m (Information interaction simulation)

-

- 4 step4Velocity2Time_PGPS.m (Speed-time analysis of the actor)

-

- 5 RouteOutput_PGPS.m & RouteOutputIndividually_PGPS.m (Path trajectory analysis of the actor)

This project is released under the Apache 2.0 license.