LLMs, Agents, AI Apps, and Team—In One Place, Ready to Use and Expand

Weam is an open source platform that helps teams adopt AI systematically. It includes a complete, production-ready stack with Next.js frontend and Node.js/Python backend - ready to deploy and use immediately.

System Requirements: CPU with 4+ cores, 8GB+ RAM. Professional installation support available for non-technical teams.

Getting Started: Simply download or fork the repository and self-host it on your infrastructure.

Why Weam?

Modern teams need easy access to the latest AI models. Weam connects to all major LLM providers out of the box - just add your API keys and you're ready to go. Think of it as ChatGPT built specifically for teams.

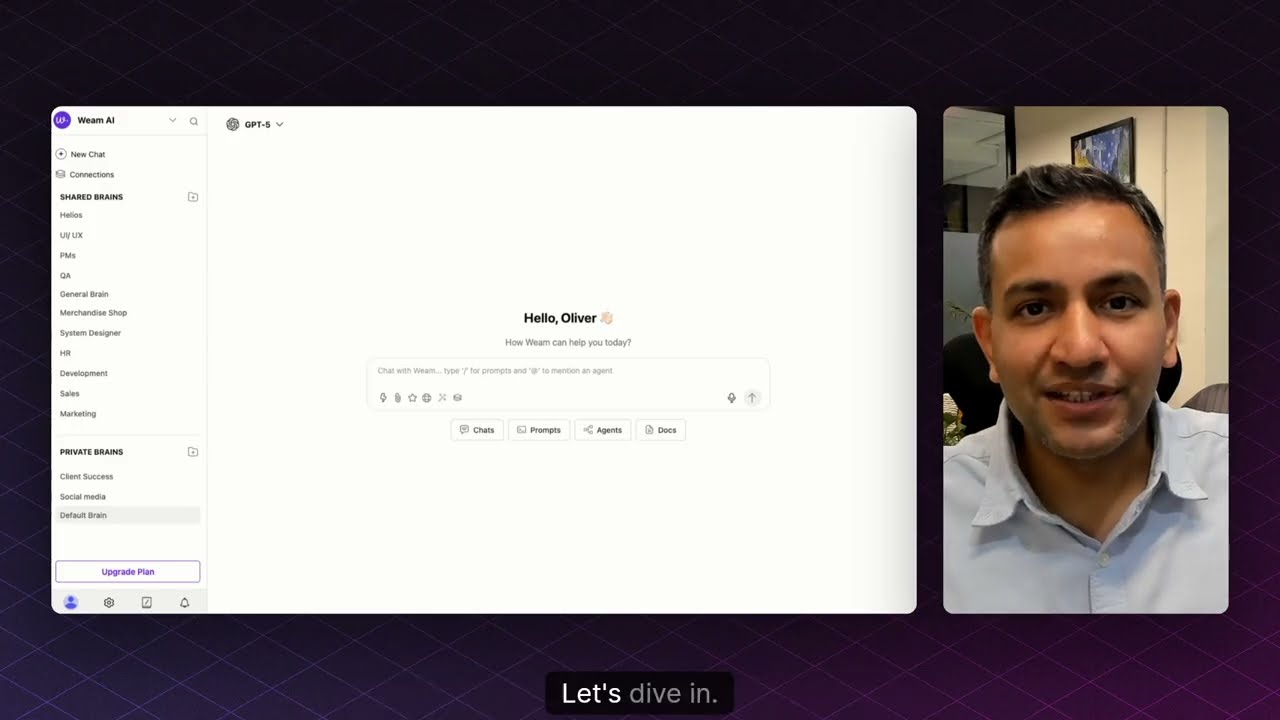

All your organization's AI interactions live in one centralized platform, organized into "Brains" (intelligent folders). These Brains contain not just chat histories, but also your custom prompts and AI agents. You can structure Brains around your departments - Marketing, Sales, Engineering, Support - whatever fits your organization.

But we didn't stop at chat. Weam is built to grow with your needs. Customize existing features or add entirely new AI applications. With the rise of AI-powered development tools like Loveable and Cursor, your team can rapidly build custom AI apps. Instead of scattered deployments, bring these apps into Weam where your entire team already works. We're building a library of open source AI apps you can use as-is, customize, or use as inspiration for your own.

Bottom line: You get a production-grade AI platform that's ready to deploy today and can expand as your needs grow. Completely free and open source.

Visit our comprehensive Documentation. for:

- Step-by-step installation guides

- Video tutorials for all features

- Technical architecture details

- API references and more

- Multi-LLM Support: OpenAI, Anthropic, Gemini, Llama, Perplexity, DeepSeek, Open Router, Hugging Face, Grok, and more

- Intelligent Context: Full conversation history maintains coherent, context-aware discussions

- Local Model Support: Connect to self-hosted models (coming soon)

- Conversation Management: Fork chats to explore different directions

- Voice Input: Speak instead of type with built-in voice-to-text

- Web Scraping: Extract content from any URL to provide context to your AI

- Web Search: Real-time information retrieval during conversations

- Team Collaboration: Multiple team members can work together in the same chat

- Threaded Comments: Slack-style commenting for organized discussions

- Team Management: Add or remove team members from specific chats

- Public Sharing: Generate shareable links for external collaboration

- Custom Prompts: Create and save prompts for repeated use

- Team Library: Build a shared repository of proven prompts

- Template Collection: Access pre-built prompts for common tasks

- Smart Generation: Auto-create prompts by scraping websites

- Organization: Tag and favorite prompts for quick access

- Quick Access: Type "/" in any chat to instantly access your prompts

- Prompt Enhancement: AI-powered feature that transforms basic queries into comprehensive prompts

- Real Example: Input a website URL and basic information → receive a complete prompt with website summary and context

- Custom Instructions: Define specific behaviors and knowledge for each agent

- Knowledge Base: Upload documents to create specialized agents

- Model Selection: Choose the optimal AI model for each agent's purpose

- MCP Integration: Model Context Protocol support coming soon

- Quick Deployment: Access any agent instantly by typing "@" in chat

- Persistent Sessions: Selected agents remain active throughout conversations

- Complete Pipeline: Built-in document processing system

- Smart Processing: Documents are automatically chunked, embedded, and vectorized

- Intelligent Retrieval: User queries trigger semantic search across document chunks

- Context Injection: Relevant information is seamlessly provided to the LLM

- Agent Integration: RAG pipeline automatically processes documents uploaded to agents

- Growing Integrations: Gmail, Slack, Google Drive, and more being added regularly

- Developer Friendly: Follow our documentation to add your own MCP connections

- Multi-Workspace: Create separate environments for different teams or projects

- Brain Organization: Shared folders for teams with private areas for individual work

- Access Control: Granular permissions for chats, prompts, and agents

- Usage Analytics: Admin dashboard showing team member activity

- Team Groups: Organize users for efficient permission management

Ready-to-use automation workflows powered by specialized APIs:

- QA Agent: Comprehensive 70-point website analysis including Google PageSpeed Insights

- Video Analyzer: Process Loom recordings by analyzing both video frames and audio to generate actionable task lists

- Sales Call Analyzer: Transform Fathom recordings into detailed call insights with customizable analysis prompts

- Proposal Generator: Input project basics and receive professionally formatted, editable multi-page proposals

- SEO Content Writer: Advanced blog creation pipeline powered by DataforSEO:

- Start with any URL for context

- Auto-generates business summary

- Suggests relevant keywords based on competition analysis

- Researches top-ranking content for each topic

- Checks sitemap to avoid duplicate content

- Produces comprehensive, Google-optimized articles using sophisticated 2-page prompts

- Build your own

Coming Soon:

- AI Presentation Maker: Create stunning presentations like Gamma

- AI Document Editor: Intelligent document creation and editing

- Voice Agent: AI that makes calls on your behalf

- Build Your Own: Integrate custom AI apps built with modern tools directly into Weam, creating a unified AI workspace for your entire organization.

git clone https://github.com/weam-ai/weam.git

cd weam

cp .env.example .env

bash build.sh (for mac/linux)

or

bash winbuild.sh (for windows)

docker-compose up -d- Docs: Quickstart Guide

- From Source / Cloud: Coming soon

- Setup Video: Uploading soon

- Install with Docker (2 minutes)

- Add your LLM API keys (OpenAI, Claude, etc.)

- Create your first Workspace

- Add a Brain with files or data

- Start chatting with AI

- Invite your team and scale collaboration

- Discord

- Docs

- GitHub Issues

- Email: hello@weam.ai

We welcome contributions of all kinds:

- Star this repo to show your support

- Report issues you encounter

- Suggest features on our roadmap

- Submit pull requests for improvements

- Improve documentation

- Design UI/UX improvements

- Contributor Guide:

CONTRIBUTING.md

- Self-hosted: Your data never leaves your infrastructure

- Role-based access: Granular permissions and workspace isolation

- Audit logs: Complete activity tracking

- API security: OAuth 2.0 and JWT authentication

Weam AI is licensed under a modified Apache License 2.0 with additional terms to:

- Protect fair use and encourage contributions

- Ensure commercial use guidelines

- Maintain open source principles

SeeLICENSEfor full terms.

SaaS-style deployment of our open source project requires a commercial license. Learn more about commercial licensing and pricing here

Need help setting up?

Installation Support — Get Weam’s production-ready AI platform running fast with our professional installation services, so your team can focus on transforming workflows with AI.

- Smart Brain Memory: Turn your Brain's chat history into organizational memory that LLMs can optionally access for smarter, context-aware responses.

- Expanded MCP Ecosystem: More MCP integrations coming soon, plus the ability to connect any MCP service directly to your agents.

- Advanced Agent Capabilities: More ready to use Pro agents and AI Apps

Built with ❤️ by the Weam AI community

The open source AI platform that brings teams and AI together.

⭐ Star us on GitHub •

Join our Discord •

Visit our Website