Open-source framework for developing real-time multimodal conversational AI agents.

The AI Agent SDK is a Python framework built on top of the VideoSDK Python SDK that enables AI-powered agents to join VideoSDK rooms as participants. This SDK serves as a real-time bridge between AI models (like OpenAI and Gemini) and your users, facilitating seamless voice and media interactions.

| # | Feature | Description |

|---|---|---|

| 1 | 🎤 Real-time Communication (Audio/Video) | Agents can listen, speak, and interact live in meetings. |

| 2 | 📞 SIP & Telephony Integration | Seamlessly connect agents to phone systems via SIP for call handling, routing, and PSTN access. |

| 3 | 🧍 Virtual Avatars | Add lifelike avatars to enhance interaction and presence using Simli. |

| 4 | 🤖 Multi-Model Support | Integrate with OpenAI, Gemini, AWS NovaSonic, and more. |

| 5 | 🧩 Cascading Pipeline | Integrates with different providers of STT, LLM, and TTS seamlessly. |

| 6 | 🧠 Conversational Flow | Manages turn detection and VAD for smooth interactions. |

| 7 | 🛠️ Function Tools | Extend agent capabilities with event scheduling, expense tracking, and more. |

| 8 | 🌐 MCP Integration | Connect agents to external data sources and tools using Model Context Protocol. |

| 9 | 🔗 A2A Protocol | Enable agent-to-agent interactions for complex workflows. |

Important

Star VideoSDK Repositories ⭐️

Get instant notifications for new releases and updates. Your support helps us grow and improve VideoSDK!

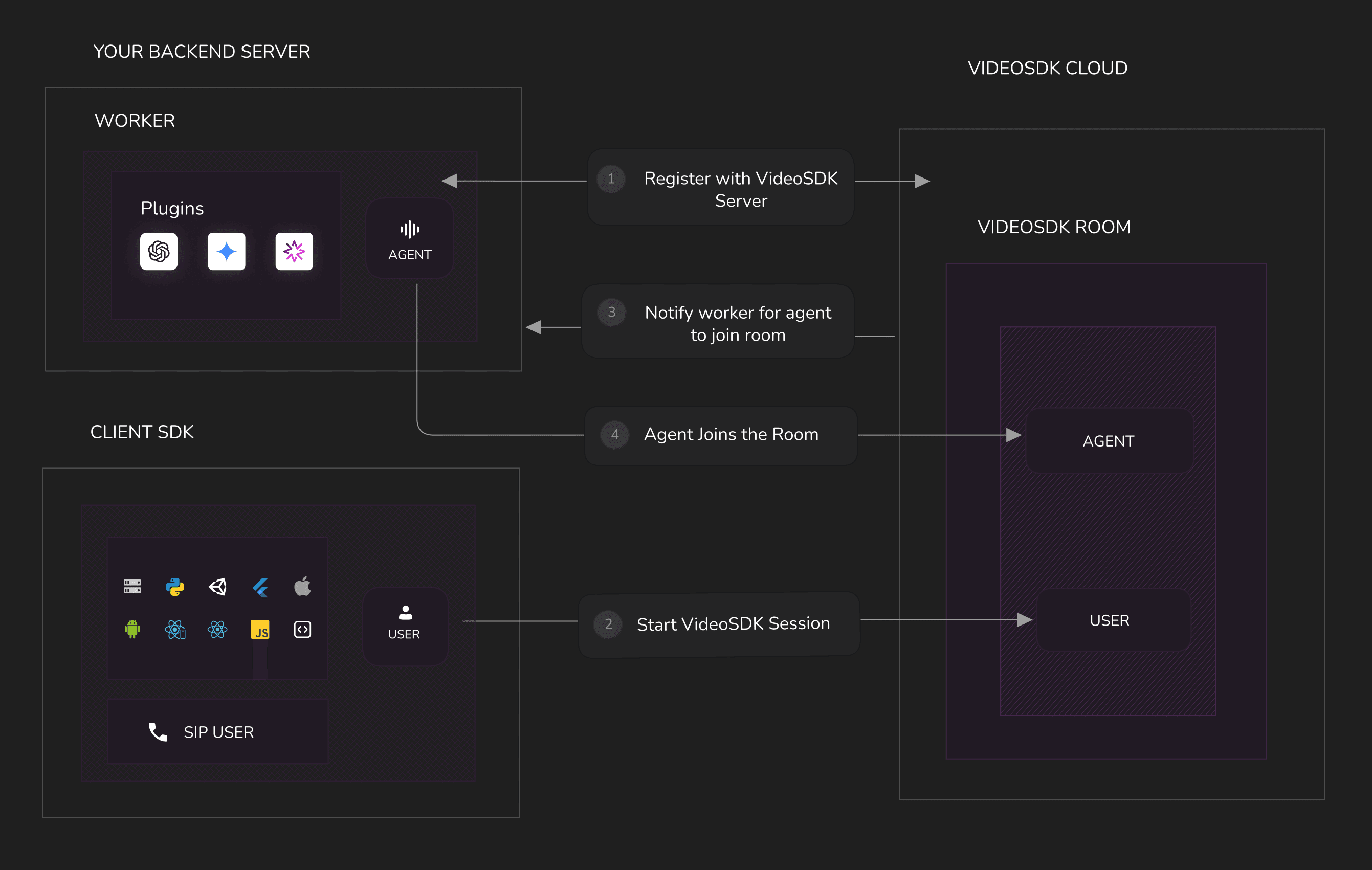

This architecture shows how AI voice agents connect to VideoSDK meetings. The system links your backend with VideoSDK's platform, allowing AI assistants to interact with users in real-time.

Before you begin, ensure you have:

- A VideoSDK authentication token (generate from app.videosdk.live)

- A VideoSDK meeting ID (you can generate one using the Create Room API or through the VideoSDK dashboard)

- Python 3.12 or higher

- Third-Party API Keys:

- API keys for the services you intend to use (e.g., OpenAI for LLM/STT/TTS, ElevenLabs for TTS, Google for Gemini etc.).

-

Create and activate a virtual environment with Python 3.12 or higher.

macOS / Linux

python3 -m venv venv source venv/bin/activateWindows

python -m venv venv venv\Scripts\activate

-

Install the core VideoSDK AI Agent package

pip install videosdk-agents

-

Install Optional Plugins. Plugins help integrate different providers for Realtime, STT, LLM, TTS, and more. Install what your use case needs:

# Example: Install the Turn Detector plugin pip install videosdk-plugins-turn-detector👉 Supported plugins (Realtime, LLM, STT, TTS, VAD, Avatar, SIP) are listed in the Supported Libraries section below.

Before your AI agent can join a meeting, you'll need to create a meeting ID. You can generate one using the VideoSDK Create Room API:

curl -X POST https://api.videosdk.live/v2/rooms \

-H "Authorization: YOUR_JWT_TOKEN_HERE" \

-H "Content-Type: application/json"For more details on the Create Room API, refer to the VideoSDK documentation.

Now that you've installed the necessary packages, you're ready to build!

First, let's create a custom voice agent by inheriting from the base Agent class:

from videosdk.agents import Agent, function_tool

# External Tool

# async def get_weather(self, latitude: str, longitude: str):

class VoiceAgent(Agent):

def __init__(self):

super().__init__(

instructions="You are a helpful voice assistant that can answer questions and help with tasks.",

tools=[get_weather] # You can register any external tool defined outside of this scope

)

async def on_enter(self) -> None:

"""Called when the agent first joins the meeting"""

await self.session.say("Hi there! How can I help you today?")

async def on_exit(self) -> None:

"""Called when the agent exits the meeting"""

await self.session.say("Goodbye!")This code defines a basic voice agent with:

- Custom instructions that define the agent's personality and capabilities

- An entry message when joining a meeting

- State change handling to track the agent's current activity

Function tools allow your agent to perform actions beyond conversation. There are two ways to define tools:

- External Tools: Defined as standalone functions outside the agent class and registered via the

toolsargument in the agent's constructor. - Internal Tools: Defined as methods inside the agent class and decorated with

@function_tool.

Below is an example of both:

import aiohttp

# External Function Tools

@function_tool

def get_weather(latitude: str, longitude: str):

print(f"Getting weather for {latitude}, {longitude}")

url = f"https://api.open-meteo.com/v1/forecast?latitude={latitude}&longitude={longitude}¤t=temperature_2m"

async with aiohttp.ClientSession() as session:

async with session.get(url) as response:

if response.status == 200:

data = await response.json()

return {

"temperature": data["current"]["temperature_2m"],

"temperature_unit": "Celsius",

}

else:

raise Exception(

f"Failed to get weather data, status code: {response.status}"

)

class VoiceAgent(Agent):

# ... previous code ...

# Internal Function Tools

@function_tool

async def get_horoscope(self, sign: str) -> dict:

horoscopes = {

"Aries": "Today is your lucky day!",

"Taurus": "Focus on your goals today.",

"Gemini": "Communication will be important today.",

}

return {

"sign": sign,

"horoscope": horoscopes.get(sign, "The stars are aligned for you today!"),

}- Use external tools for reusable, standalone functions (registered via

tools=[...]). - Use internal tools for agent-specific logic as class methods.

- Both must be decorated with

@function_toolfor the agent to recognize and use them.

The pipeline connects your agent to an AI model. Here, we are using Google's Gemini for a Real-time Pipeline. You could also use a Cascading Pipeline.

from videosdk.plugins.google import GeminiRealtime, GeminiLiveConfig

from videosdk.agents import RealTimePipeline, JobContext

async def start_session(context: JobContext):

# Initialize the AI model

model = GeminiRealtime(

model="gemini-2.0-flash-live-001",

# When GOOGLE_API_KEY is set in .env - DON'T pass api_key parameter

api_key="AKZSXXXXXXXXXXXXXXXXXXXX",

config=GeminiLiveConfig(

voice="Leda", # Puck, Charon, Kore, Fenrir, Aoede, Leda, Orus, and Zephyr.

response_modalities=["AUDIO"]

)

)

pipeline = RealTimePipeline(model=model)

# Continue to the next steps...Now, let's put everything together and start the agent session:

import asyncio

from videosdk.agents import AgentSession, WorkerJob, RoomOptions, JobContext

async def start_session(context: JobContext):

# ... previous setup code ...

# Create the agent session

session = AgentSession(

agent=VoiceAgent(),

pipeline=pipeline

)

try:

await context.connect()

# Start the session

await session.start()

# Keep the session running until manually terminated

await asyncio.Event().wait()

finally:

# Clean up resources when done

await session.close()

await context.shutdown()

def make_context() -> JobContext:

room_options = RoomOptions(

room_id="<meeting_id>", # Replace it with your actual meetingID

auth_token = "<VIDEOSDK_AUTH_TOKEN>", # When VIDEOSDK_AUTH_TOKEN is set in .env - DON'T include videosdk_auth

name="Test Agent",

playground=True,

# vision= True # Only available when using the Google Gemini Live API

)

return JobContext(room_options=room_options)

if __name__ == "__main__":

job = WorkerJob(entrypoint=start_session, jobctx=make_context)

job.start()After setting up your AI Agent, you'll need a client application to connect with it. You can use any of the VideoSDK quickstart examples to create a client that joins the same meeting:

When setting up your client application, make sure to use the same meeting ID that your AI Agent is using.

Once you have completed the setup, you can run your AI Voice Agent project using Python. Make sure your .env file is properly configured and all dependencies are installed.

python main.py- For detailed guides, tutorials, and API references, check out our official VideoSDK AI Agents Documentation.

- To see the framework in action, explore the code in the Examples directory. It is a great place to quickstart.

The framework supports integration with various AI models and tools, including:

| Category | Services |

|---|---|

| Real-time Models | OpenAI | Gemini | AWSNovaSonic |

| Speech-to-Text (STT) | OpenAI | Google | Sarvam AI | Deepgram | Cartesia |

| Language Models (LLM) | OpenAI | Google | Sarvam AI | Anthropic | Cerebras |

| Text-to-Speech (TTS) | OpenAI | Google | AWS Polly | Sarvam AI | ElevenLabs | Cartesia | Resemble AI |Smallest AI | Speechify | InWorld | Neuphonic | Rime AI | Hume AI | Groq | LMNT AI |

| Voice Activity Detection (VAD) | SileroVAD |

| Turn Detection Model | Turn Detector |

| Virtual Avatar | Simli |

| SIP Trunking | Twilio |

Explore the following examples to see the framework in action:

|

Use case: Hospital appointment booking via a voice-enabled agent. |

Use case: Ask about available flights & hotels and send email with booking info. |

👨🏫 AI AvatarUse case: Answering queries about current weather conditions using an avatar. |

Use case: E-commerce scenario with turn detection when interrupting the voice agent. |

The Agents framework is under active development in a rapidly evolving field. We welcome and appreciate contributions of any kind, be it feedback, bugfixes, features, new plugins and tools, or better documentation. You can file issues under this repo, open a PR, or chat with us in VideoSDK's Discord community.

When contributing, consider developing new plugins or enhancing existing ones to expand the framework's capabilities. Your contributions can help integrate more AI models and tools, making the framework even more versatile.

Want to create your own STT, LLM, or TTS plugin? Check out our comprehensive guide: BUILD YOUR OWN PLUGIN

This guide provides:

- Step-by-step instructions for creating custom plugins

- Directory structure and file requirements

- Implementation examples for STT, LLM, and TTS plugins

- Testing and submission guidelines

- Reference to existing plugin examples

We love our contributors! Here's how you can contribute:

- Open an issue if you believe you've encountered a bug.

- Follow the documentation guide to get your local dev environment set up.

- Make a pull request to add new features/make quality-of-life improvements/fix bugs.

- Create custom plugins following our plugin development guide.