This is the implementation of the paper "Image Matching and Localization Based on Fusion of Handcrafted and Deep Features".

- car_hynet: The code for training and validation.

- core: The core code of DeFusion.

python main.pyThe default output of running the above command is shown below. You can change parameters in main.py and matcher.py and modify the preprocessing steps in image_utils.py to fit your scene.

Overall Architecture

The system consists of four parts: a preprocessing step using specific characteristics, a CAR-HyNet for deep features extraction, a DLF and matching method combining handcrafted and deep features, and a target localization based on the proposed image matching scheme.Architecture of CA-SandGlass (CoordAtt SandGlass)

We notice that traditional convolutional operations are limited in capturing local positional relationships. To address this, we introduce a CoordAtt module to embed positional information into channel attention, allowing for capturing long-range dependencies for a more accurate description of features. Note that SandGlass is a lightweight module that focuses on feature information at different scales. Considering that CoordAtt focuses on long-range dependencies, we combine these two techniques to allow the network to generate more comprehensive and discriminative feature representations. In addition, considering that the residual connection needs to be built on high-dimensional features, we further combine these two modules to form the CA-SandGlass module. To improve the performance of the network effectively, we add CoordAtt to the second and the third FRN. Our experiments show that incorporating the CoordAtt module at these locations yields superior performance.Architecture of CAR_HyNet (Coordinate Attention Residual Network)

The original HyNet structure is the same as L2-Net, which consists of six feature extraction layers and one output layer. In contrast, we propose the addition of two layers of CA-SandGlass to increase nonlinearity for better fitting ability. Another important improvement we introduce in CAR-HyNet is to leverage the full RGB three channels as inputs. Compared with grayscale images, color images contain much richer information at a negligible computational cost. The absence of color information in processing can result in incorrect matching, particularly in regions with identical grayscale and shape but different colors.We extract handcrafted features using the RootSIFT algorithm on grayscale images. To achieve fine-grained control over the correspondence of feature points during the fusion process and reduce the computational resources of deep learning, we use handcrafted features as the prior knowledge for extracting deep features. We input patches into CAR-HyNet to extract 128-D deep features. This approach fully leverages the feature points extracted by RootSIFT as prior knowledge for CAR-HyNet, resulting in enhanced rotation invariance and eventually generating deep features for fusion.

Architecture of DLF (Decision Level Fusion)

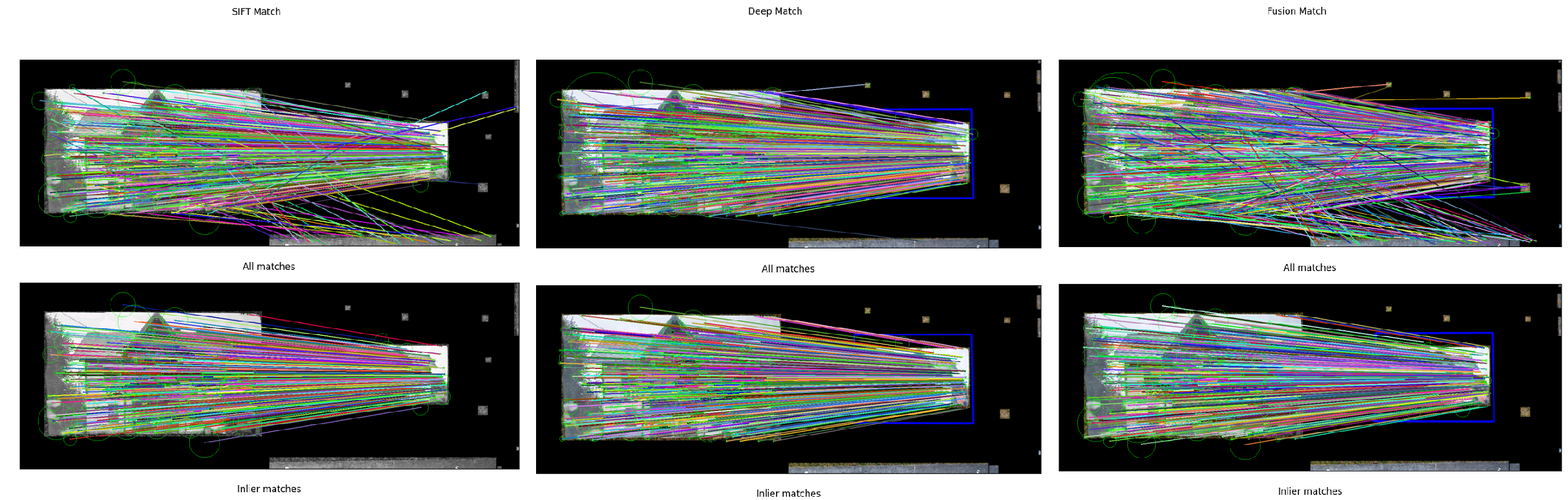

For two input images to be matched, named Image1 and Image2, we first extract their RootSIFT feature descriptors D1RootSIFT and D2RootSIFT and then extract CAR-HyNet feature descriptors D1CAR-HyNet and D2CAR-HyNet with rotation and scale invariance, respectively. Since CAR-HyNet takes RootSIFT as its prior knowledge, handcrafted features and deep features form a one-to-one mapping relationship. We calculate the Euclidean distance between the feature descriptors of each feature point in the two images under RootSIFT and CAR-HyNet, respectively. Therefore, each feature point can obtain its two nearest neighboring points, and the distances are dmi,RootSIFT and dmi,CAR-HyNet , where m=1,2 indicates the first and second closest neighbors. For the two nearest neighboring points of each feature point, we find the distances of these two points at the corresponding positions of the CAR-HyNet feature points by traversing the RootSIFT feature points in turn. We then use the NNDR method to determine whether the matching is successful from the two feature extraction algorithms.Inverse Perspective Transformation

In most cases, there is a certain degree of perspective transformation between images that need to be matched, especially in aerial scenes where the drone may be at a tilt angle. Note that two images in space can be transformed by a transformation matrix. Also, note that the attitude information of the UAV and camera is available. Therefore, we propose to correct the oblique image to a bird’s eye view using attitude-based IPT to improve feature point extraction performance as well as the matching rate. More importantly, this approach does not incur high latency from simulating the viewpoint since it performs the transformation and matching only once.If you use this repository in your work, please cite our paper:

@ARTICLE{10225672,

author={Song, Xianfeng and Zou, Yi and Shi, Zheng and Yang, Yanfeng},

journal={IEEE Sensors Journal},

title={Image Matching and Localization Based on Fusion of Handcrafted and Deep Features},

year={2023},

volume={},

number={},

pages={1-1},

doi={10.1109/JSEN.2023.3305677}

}