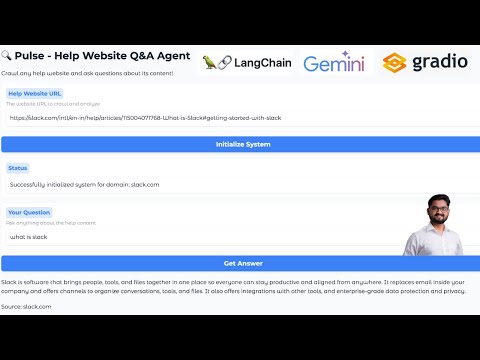

A powerful documentation QA system that crawls help websites, processes content, and provides accurate answers using RAG (Retrieval-Augmented Generation) with Google's Gemini AI.

- Flow Diagram / Architecture

- Storage Structure

- Features

- Installation

- Usage

- Contributing

Pulse-WebQA_Agent/

├──notebooks

├── text/ # Raw crawled content

│ └── domain.com/

│ ├── page1.txt

│ └── page2.txt

├── processed/ # Processed content

│ └── scraped.csv

├── chroma_db/ # Vector database

│ ├── index/

│ └── embeddings/

└── src

├── app.py

├── crawler.py

├── processor.py

└── qa_system.py

├── requirements.txt

├── run.py

├── docker-compose.yml

├── Dockerfile

└── setup.py

- Configurable depth and page limits

- Intelligent URL filtering

- Progress tracking

- Removes irrelevant elements (navigation, footers)

- Preserves document hierarchy

- Handles multiple content types

- Google's Gemini AI integration

- Semantic search capabilities

- Context-aware responses

- Chromadb vector database

- Supports appending new content

- Efficient retrieval

- Clone the repository:

git clone https://github.com/rajeshmore1/Pulse-WebQA_Agent.git

cd Pulse-WebQA_Agent

- Create a virtual environment

python -m venv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

- Install dependencies:

pip install -r requirements.txt

- Set up environment variables:

echo "GOOGLE_API_KEY=your_api_key_here" > .env

Starting the Application

python app.py

Running Unit Test

python -m unittest test_crawler.py -v

Build the Docker image:

docker build -t pulse-qa .

Run using Docker Compose:

# Create .env file with your API key

echo "GOOGLE_API_KEY=your_api_key_here" > .env

# Start the application

docker-compose up

- Fork the repository

- Create a feature branch

- Commit changes

- Open a pull request