Intuitive, Powerful, and Hassle-Free RL Training & Monitoring – All in One Place.

⭐️ If you find this project useful, please consider giving it a star! It really helps!

📚 Full Documentation: https://docs.reinforceui-studio.com

🎬 Video Demo: YouTube Tutorial

ReinforceUI Studio is a Python-based application designed to simplify Reinforcement Learning (RL) workflows through a beautiful, intuitive GUI. No more memorizing commands, no more juggling extra repos – just train, monitor, and evaluate in a few clicks!

Getting started with ReinforceUI Studio is fast and easy!

The easiest way to use ReinforceUI Studio is by installing it directly from PyPI. This provides a hassle-free installation, allowing you to get started quickly with no extra configuration.

Follow these simple steps:

- Install ReinforceUI Studio from PyPI

pip install reinforceui-studio- Run the application

reinforceui-studioThat's it! You’re ready to start training and monitoring your Reinforcement Learning agents through an intuitive GUI.

✅ Tip: If you encounter any issues, check out the Installation Guide in the full documentation.

- 🚀 Instant RL Training: Configure environments, select algorithms, set hyperparameters – all in seconds.

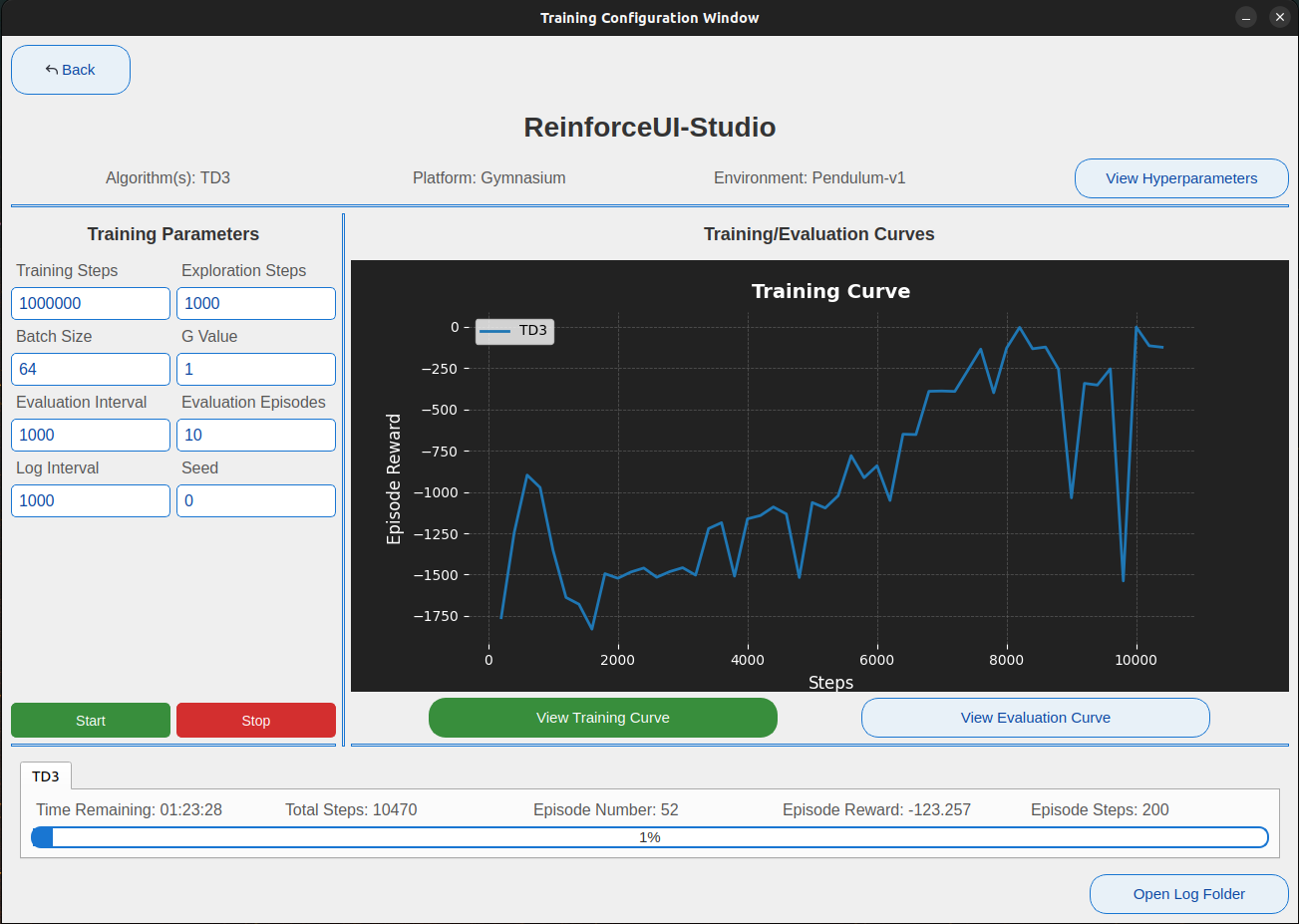

- 🖥️ Real-Time Dashboard: Watch your agents learn with live performance curves and metrics.

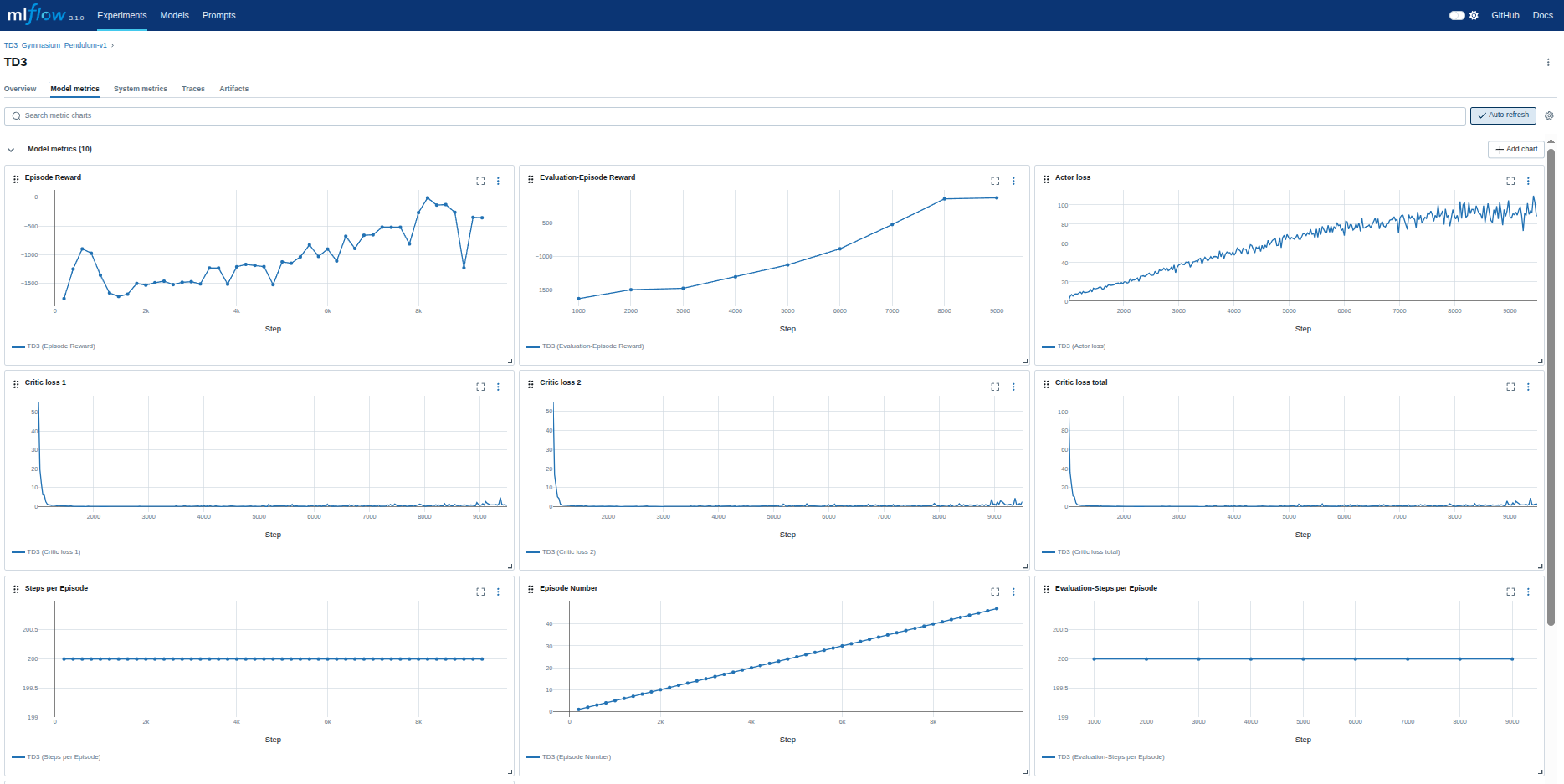

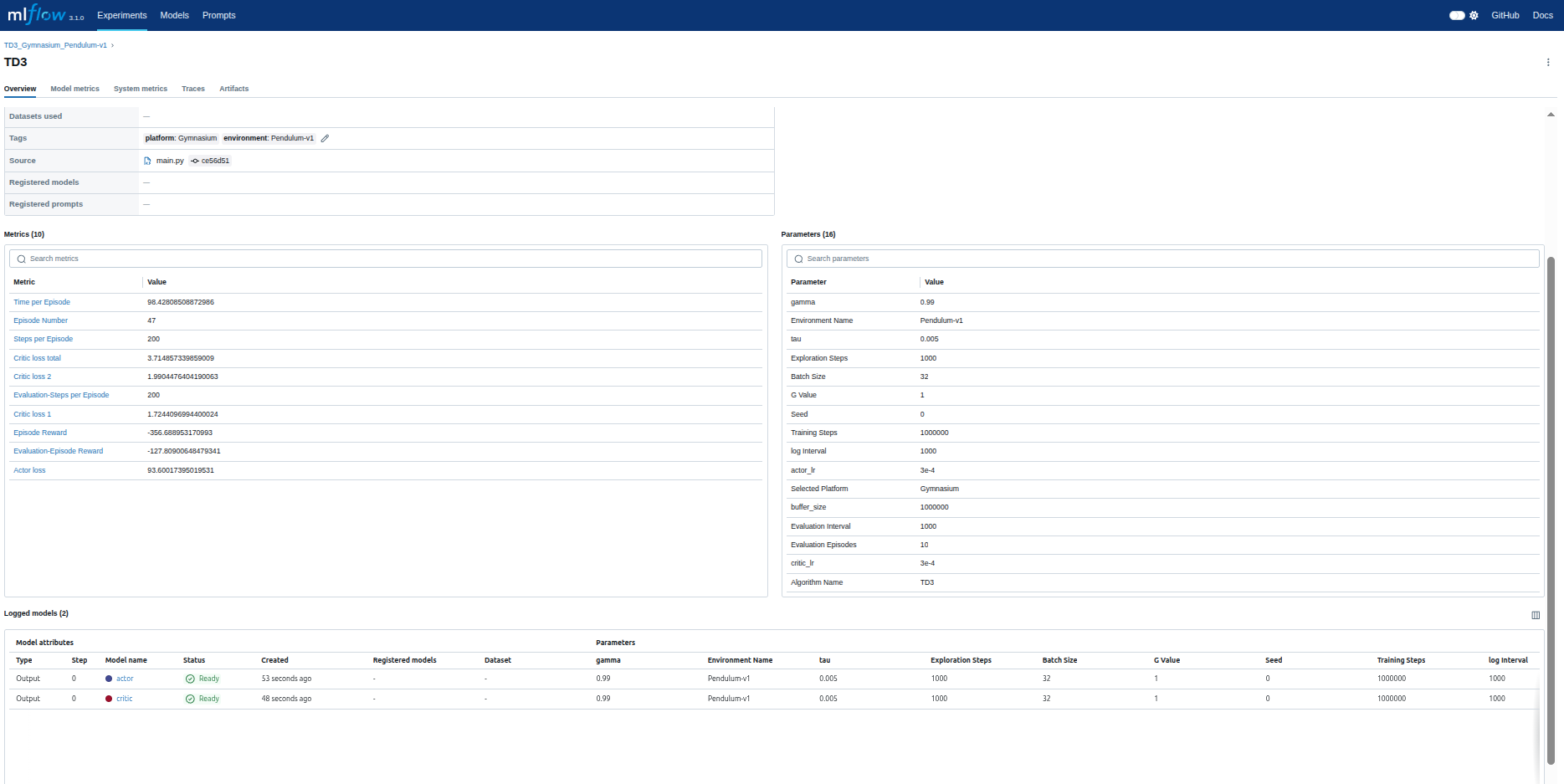

- 📊 Mlflow Integration: Automatically log and visualize your training runs with MLflow.

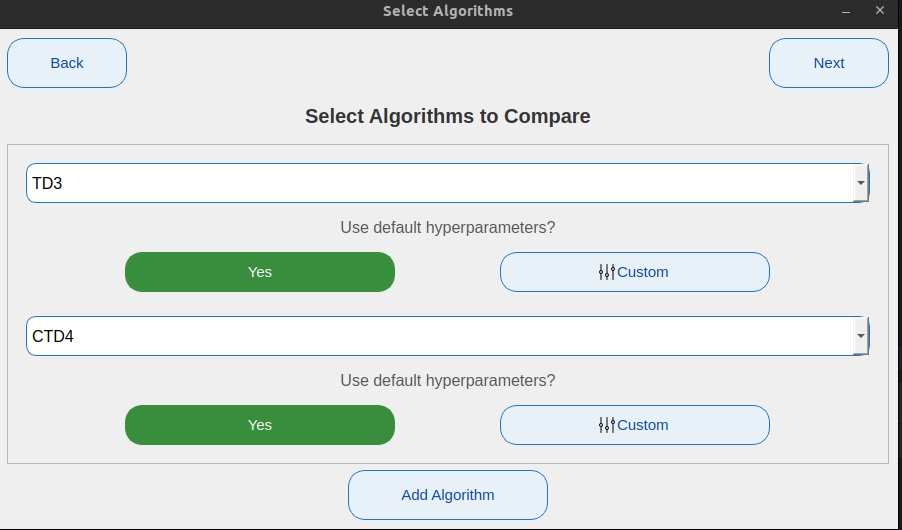

- 🧠 Multi-Algorithm Support: Train and compare multiple algorithms simultaneously.

- 📦 Full Logging: Automatically save models, plots, evaluations, videos, and training stats.

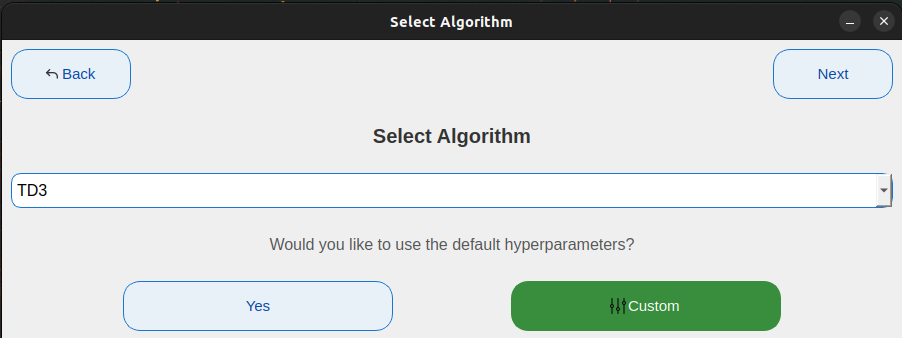

- 🔧 Easy Customization: Adjust hyperparameters or load optimized defaults.

- 🧩 Environment Support: Works with MuJoCo, OpenAI Gymnasium, and DeepMind Control Suite.

- 📊 Final Comparison Plots: Auto-generate publishable comparison graphs for your reports or papers.

-

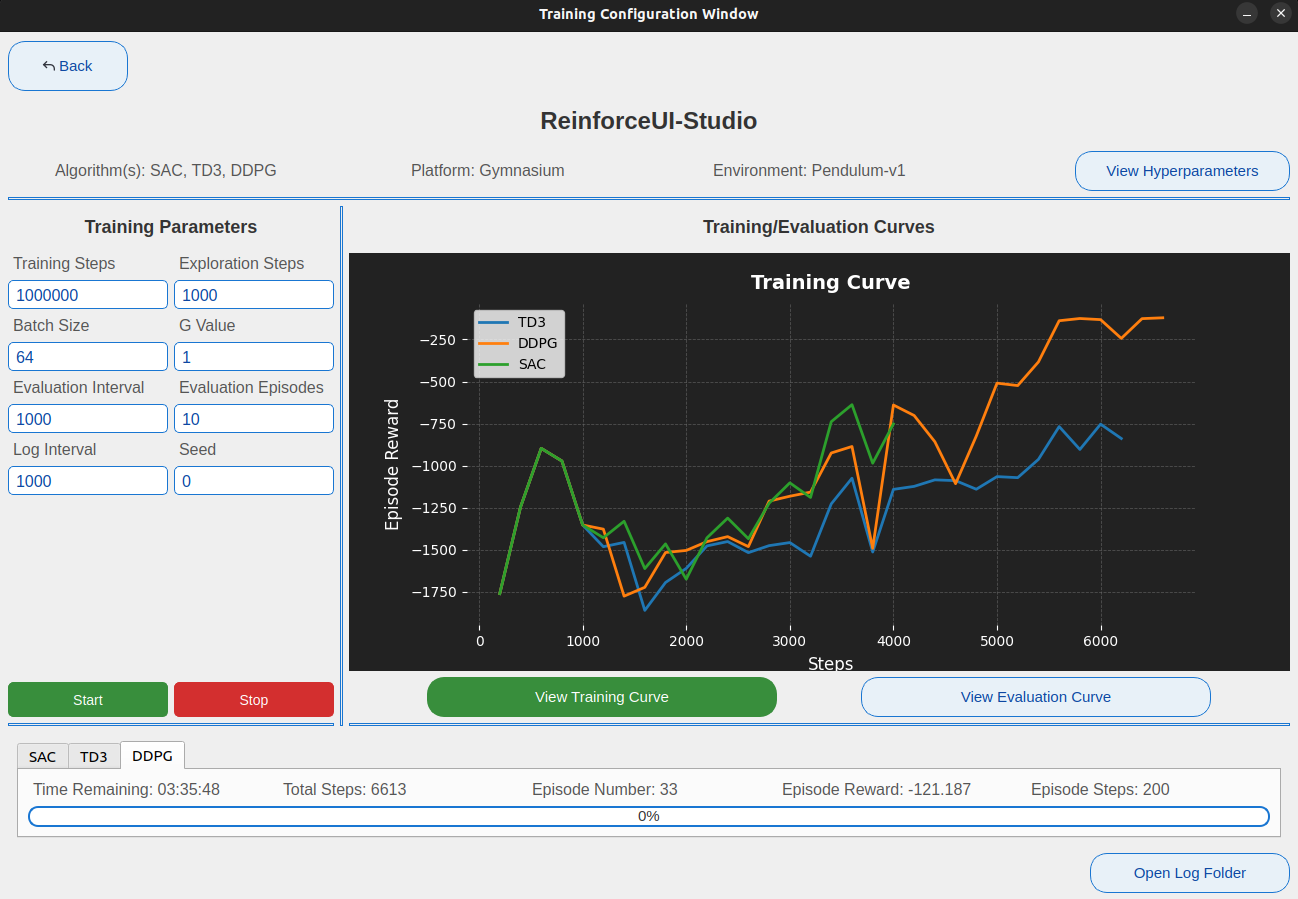

Single Training: Choose an algorithm, tweak parameters, train & visualize.

-

Multi-Training: Select several algorithms, run them simultaneously, and compare performances side-by-side.

| Selection Window | Main Window Display |

|---|---|

|

|

|

|

| Example of MLflow Dashboard | Example of MLflow Metrics |

|---|---|

|

|

ReinforceUI Studio supports the following algorithms:

| Algorithm | Description |

|---|---|

| CTD4 | Continuous Distributional Actor-Critic Agent with a Kalman Fusion of Multiple Critics |

| DDPG | Deep Deterministic Policy Gradient |

| DQN | Deep Q-Network |

| PPO | Proximal Policy Optimization |

| SAC | Soft Actor-Critic |

| TD3 | Twin Delayed Deep Deterministic Policy Gradient |

| TQC | Controlling Overestimation Bias with Truncated Mixture of Continuous Distributional Quantile Critics |

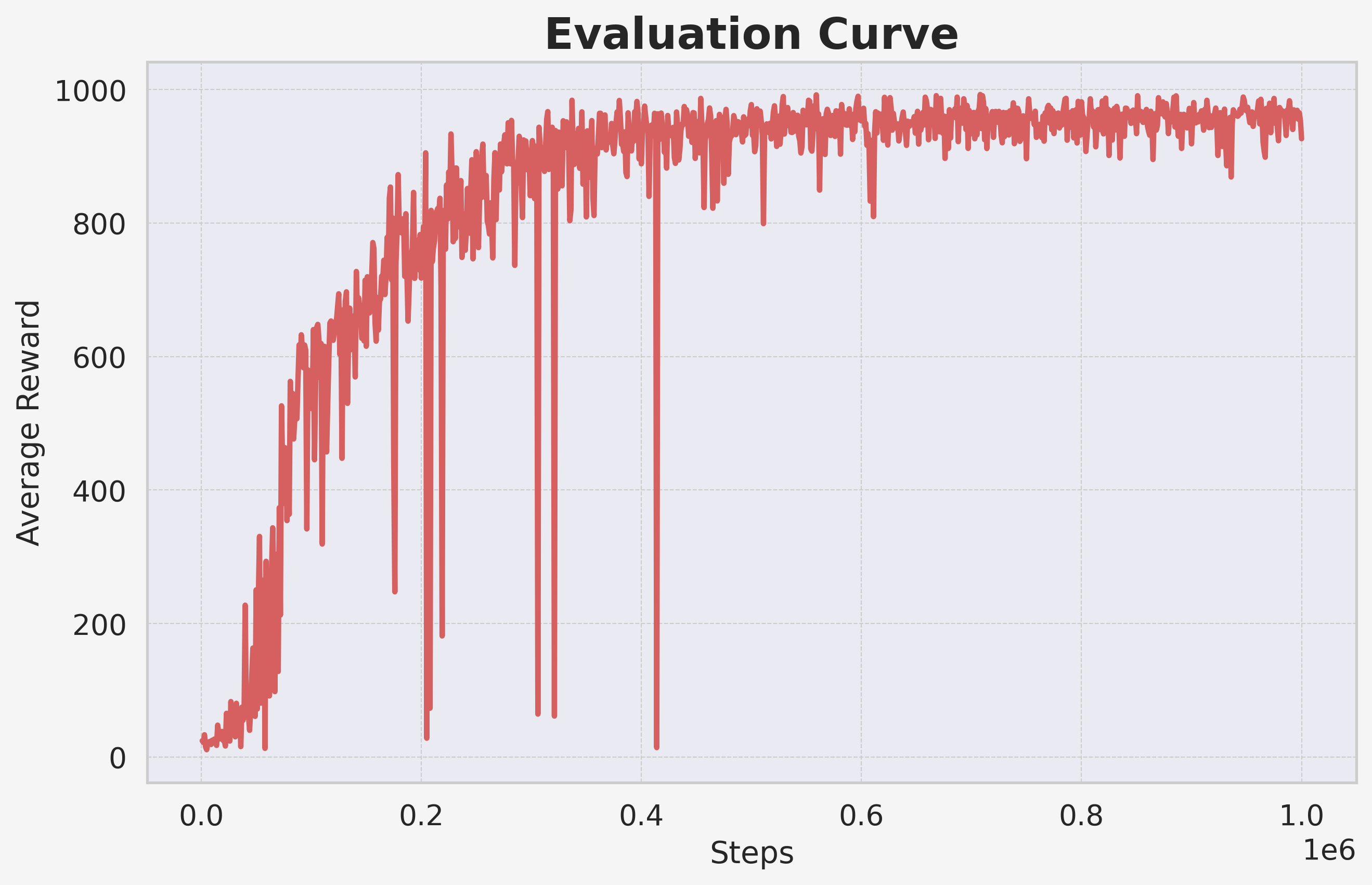

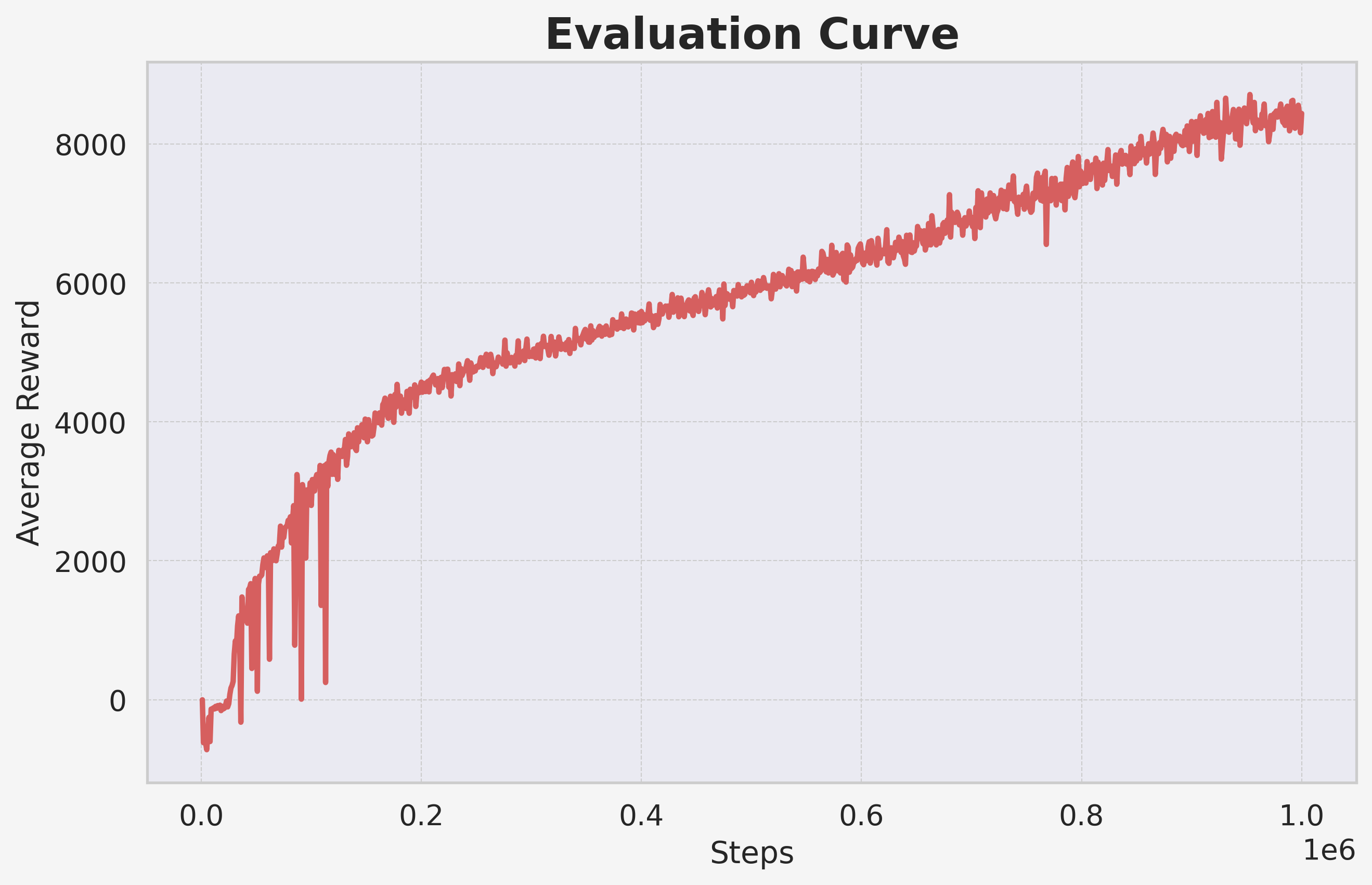

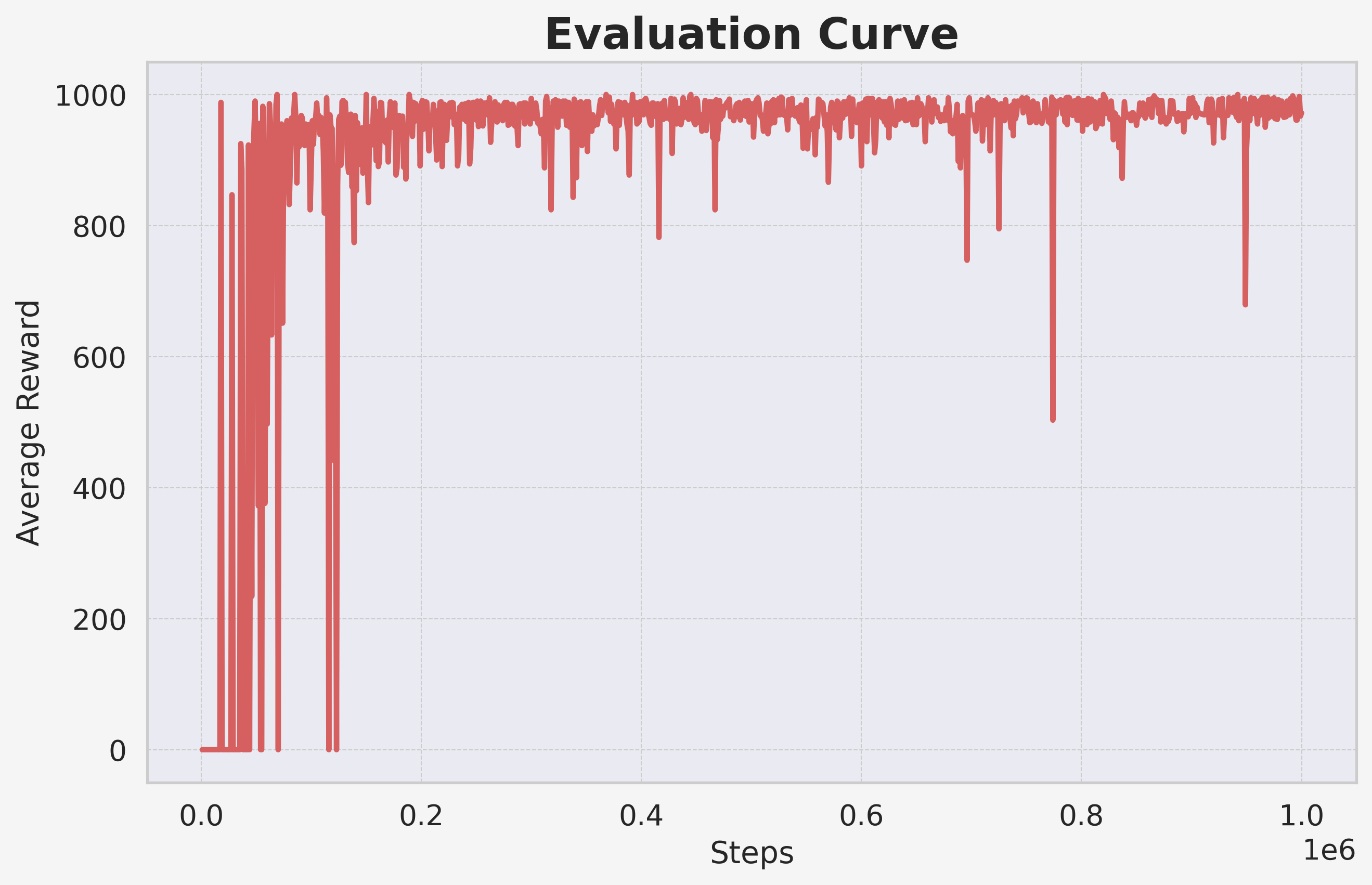

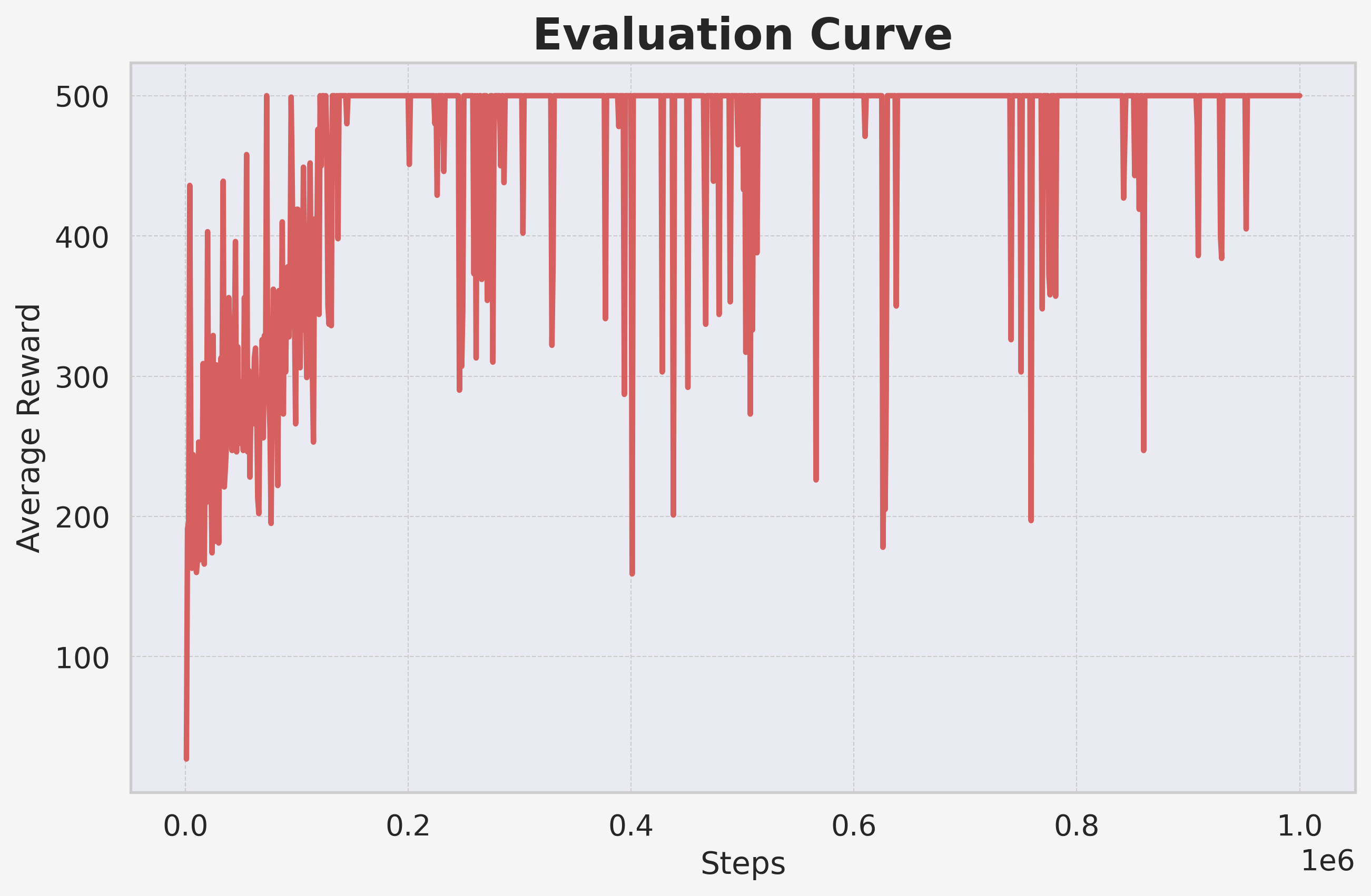

Below are some examples of results generated by ReinforceUI Studio, showcasing the evaluation curves along with snapshots of the policies in action.

| Algorithm | Platform | Environment | Curve | Video |

|---|---|---|---|---|

| SAC | DMCS | Walker Walk |  |

|

| TD3 | MuJoCo | HalfCheetah v5 |  |

|

| CDT4 | DMCS | Ball in cup catch |  |

|

| DQN | Gymnasium | CartPole v1 |  |

|

If you find ReinforceUI Studio useful for your research or project, please kindly star this repo and cite is as follows:

@misc{reinforce_ui_studio_2025,

title = { ReinforceUI Studio: Simplifying Reinforcement Learning Training and Monitoring},

author = {David Valencia Redrovan},

year = {2025},

publisher = {GitHub},

url = {https://github.com/dvalenciar/ReinforceUI-Studio.}

}

Your support helps the project grow! If you like ReinforceUI Studio, please star ⭐ this repository and share it with friends, colleagues, and the RL community! Together, we can make Reinforcement Learning accessible to everyone!

ReinforceUI Studio is licensed under the MIT License. You are free to use, modify, and distribute this software, provided that the original copyright notice and license are included in any copies or substantial portions of the software.