Savannah E-commerce Analytics is a project designed to provide insightful analytics and reporting for e-commerce platforms. The project leverages data engineering and cloud technologies to streamline data processing and visualization, enabling businesses to make data-driven decisions efficiently.

Before setting up the environment, ensure you have the following:

- Python installed (version 3.6 or higher)

- A Google Cloud Platform (GCP) account

- Terraform installed

- Open your terminal.

- Navigate to your project directory.

- Create a virtual environment by running:

python3 -m venv venv

- Activate the virtual environment:

source venv/bin/activate

- Open Command Prompt or PowerShell.

- Navigate to your project directory.

- Create a virtual environment by running:

python -m venv venv

- Activate the virtual environment:

venv\Scripts\activate

After activating your virtual environment, install the required Python modules by running:

pip install -r requirements.txtTo install Terraform, follow the instructions on the HashiCorp Terraform installation guide.

- Update your package list:

sudo apt update

- Install required packages:

sudo apt install -y apt-transport-https ca-certificates gnupg

- Add the Google Cloud SDK distribution URI as a package source:

echo "deb [signed-by=/usr/share/keyrings/cloud.google.gpg] http://packages.cloud.google.com/apt cloud-sdk main" | sudo tee -a /etc/apt/sources.list.d/google-cloud-sdk.list

- Import the Google Cloud public key:

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key --keyring /usr/share/keyrings/cloud.google.gpg add - - Update and install the Google Cloud SDK:

sudo apt update sudo apt install google-cloud-sdk

- Initialize the SDK (optional):

gcloud init

- Verify the installation:

gcloud version

To use your Google Cloud credentials, run the following command:

gcloud auth application-default loginCreate a terraform.tfvars file with the following contents:

project_id = "your-google-cloud-project-id"

region = "us-central1"

admin_email = "your-admin-email@example.com"Ensure the following Google Cloud APIs are enabled:

- Cloud Storage API

- BigQuery API

- Cloud Data Composer API

- Initialize Terraform:

terraform init

- Plan your Terraform configuration:

terraform plan

- Apply the Terraform configuration:

terraform apply

Get the Cloud Composer bucket URL using:

terraform output composer_bucketThe bucket URL will be in format:

gs://us-central1-ecommerce-airfl-d927de92-bucket

Create the scripts folder in the Composer bucket:

gsutil mkdir gs://[BUCKET_NAME]/dags/scriptsCopy the main DAG file to the root dags folder:

gsutil cp scripts/savannah-dag.py gs://[BUCKET_NAME]/dags/Upload each script to the scripts folder:

Extract files:

gsutil cp scripts/extract/api-data-extraction.py gs://[BUCKET_NAME]/dags/scripts/Load files:

gsutil cp scripts/load/cart-bq-loader.py gs://[BUCKET_NAME]/dags/scripts/

gsutil cp scripts/load/product-bq-loader.py gs://[BUCKET_NAME]/dags/scripts/

gsutil cp scripts/load/user-bq-loader.py gs://[BUCKET_NAME]/dags/scripts/Transform file:

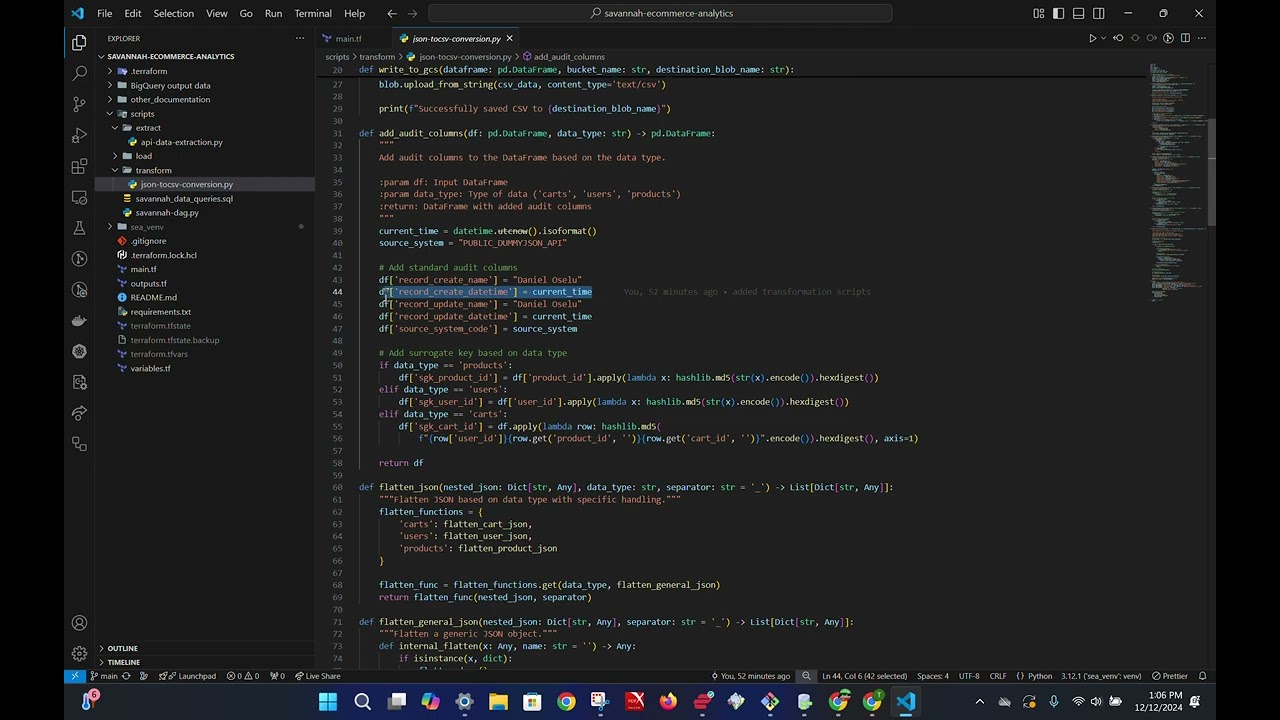

gsutil cp scripts/transform/json-tocsv-conversion.py gs://[BUCKET_NAME]/dags/scripts/Verify the files are correctly placed:

gsutil ls gs://[BUCKET_NAME]/dags/

gsutil ls gs://[BUCKET_NAME]/dags/scripts/Expected structure:

dags/

├── savannah-dag.py

└── scripts/

├── api-data-extraction.py

├── cart-bq-loader.py

├── json-tocsv-conversion.py

├── product-bq-loader.py

└── user-bq-loader.py