Project to create an automated monitoring system where the user can be monitored automatically through the webcam and microphone. The project is divided into two parts: vision and audio based functionalities.

It has six vision based functionalities right now:

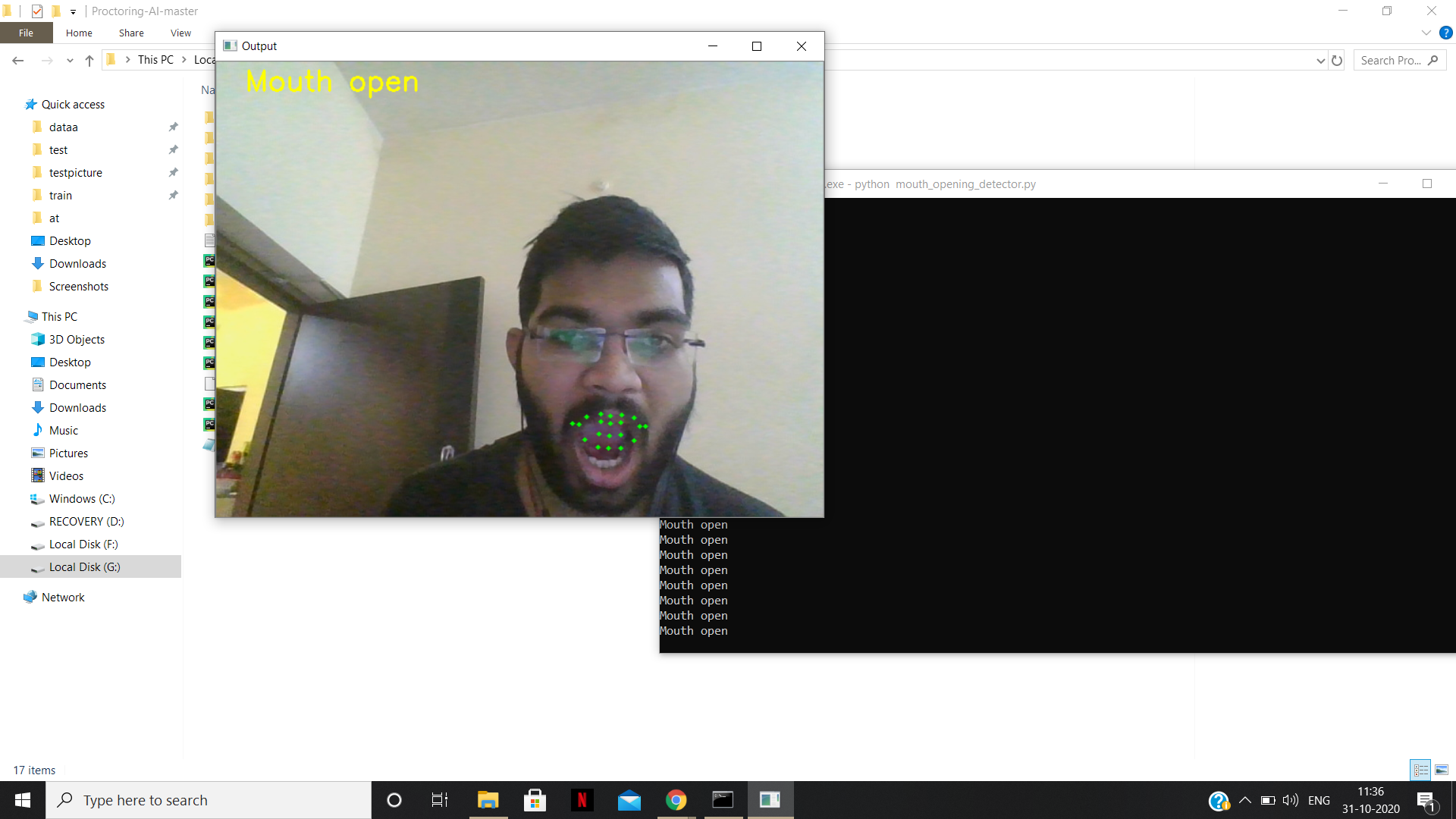

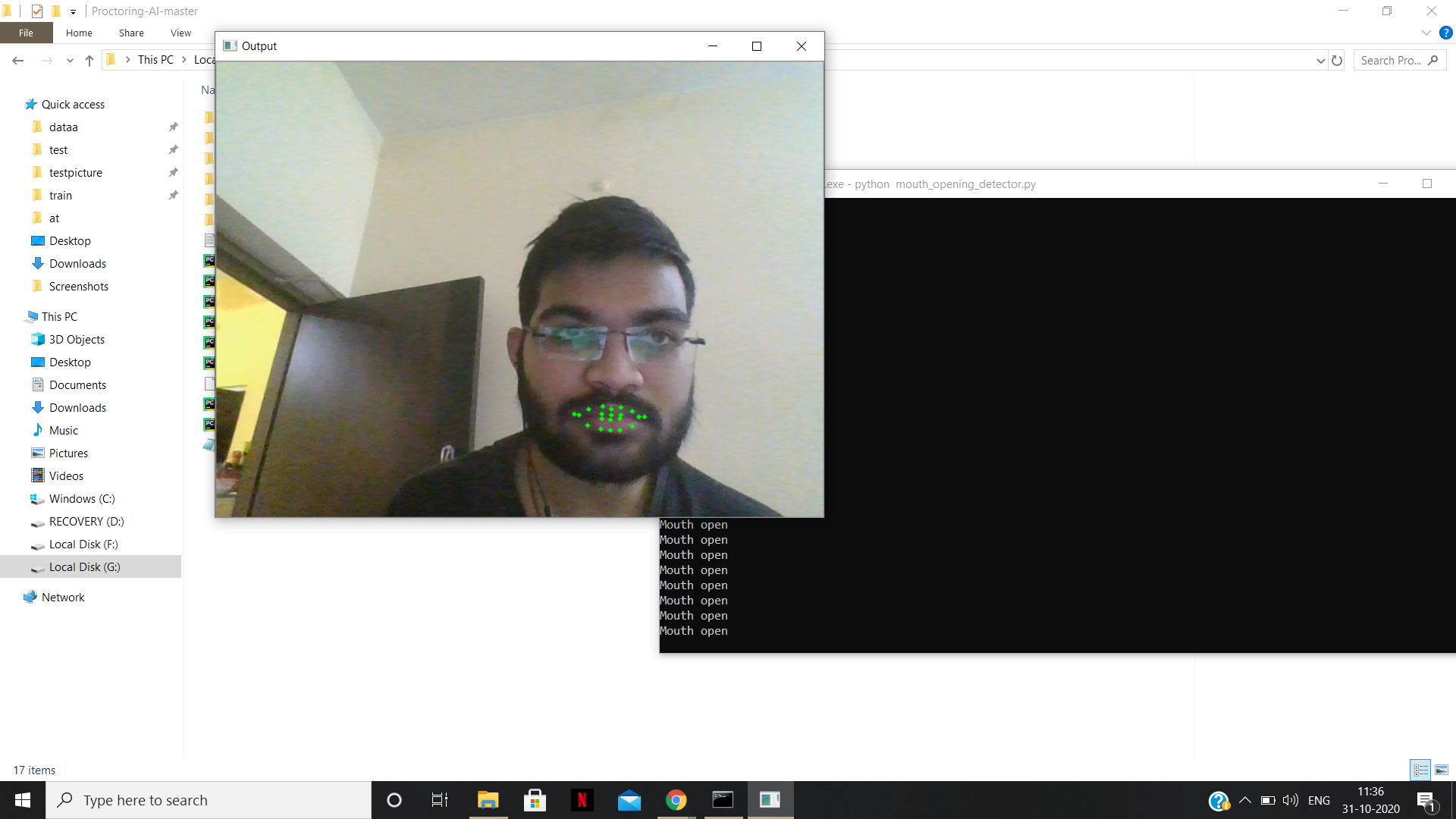

- Find if the candidate opens his mouth by recording the distance between lips at starting.

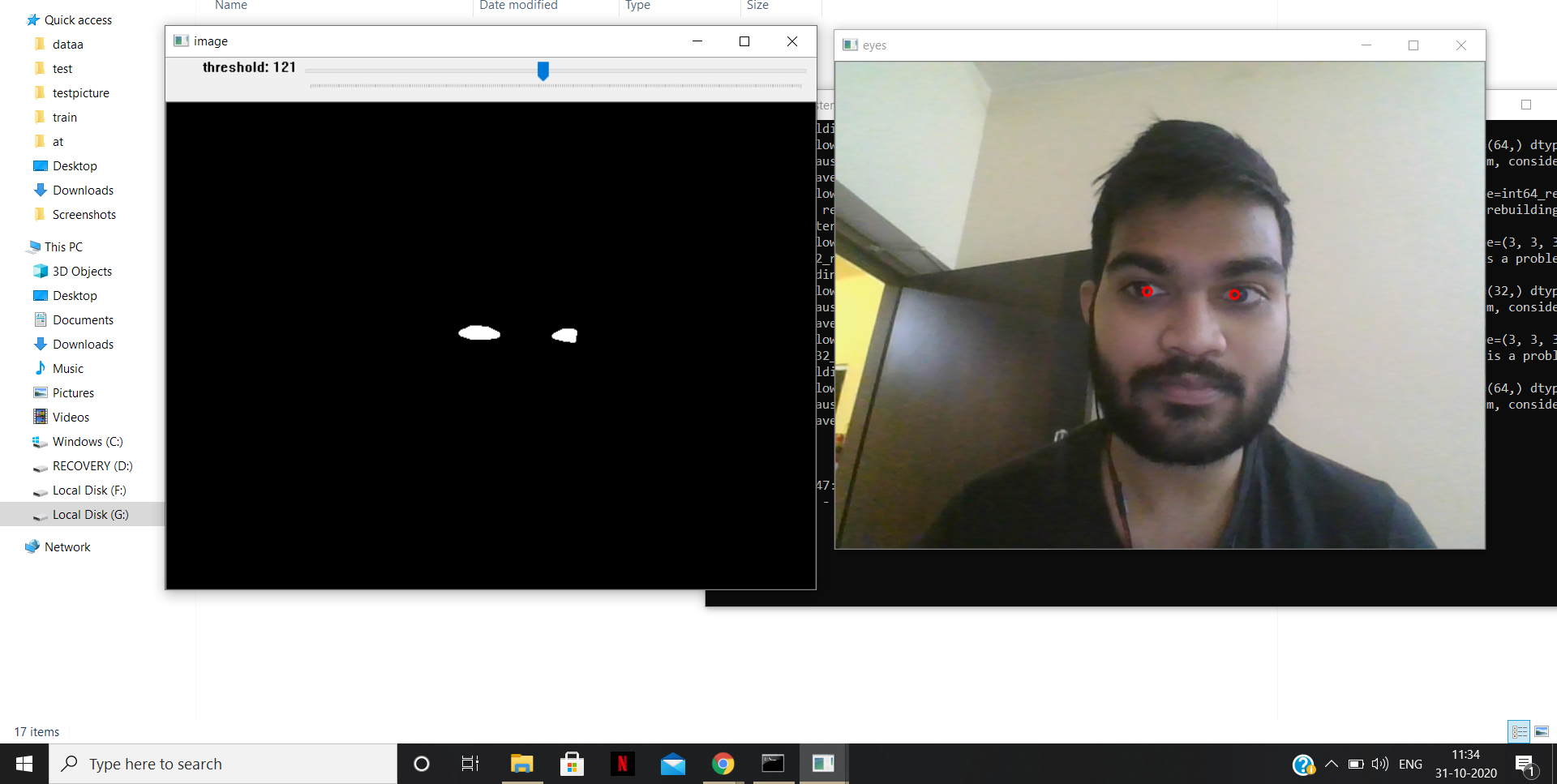

- Track eyeballs and report if candidate is looking left, right or up.

- Instance segmentation to count number of people and report if no one or more than one person detected.

- Find and report any instances of mobile phones.

- Head position estimation to find where the person is looking.

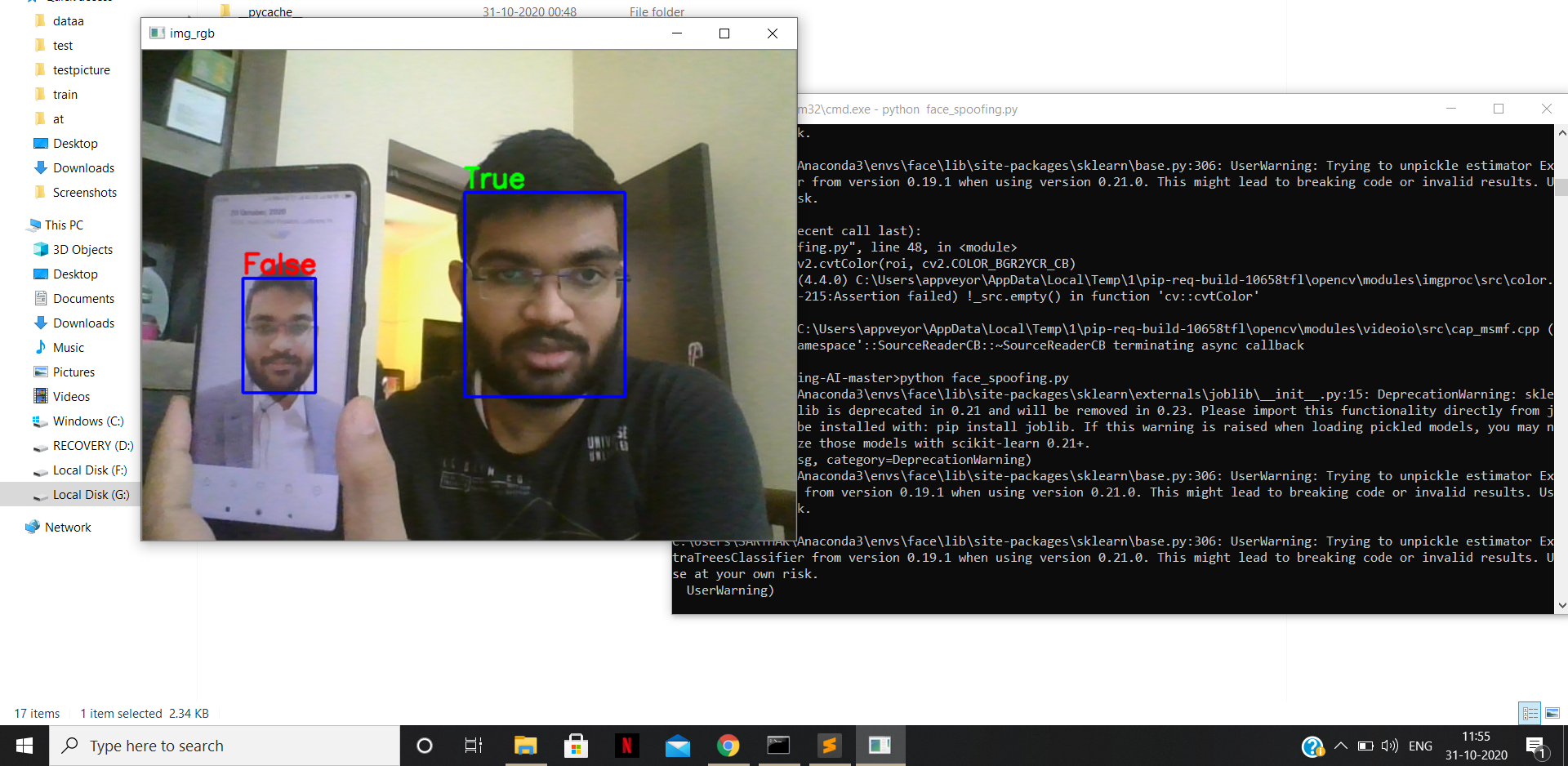

- Face spoofing detection

It is implemented in face detector.py and is used for tracking eyes, mouth opening detection, head pose estimation, and face spoofing.

An additional quantized model is also added for face detector.

It is implemented in face landmarks.py and is used for tracking eyes, mouth opening detection, and head pose estimation.

eye detector.py is to track eyes.

mouth detector.py is used to check if the candidate opens his mouth during the exam after recording it initially.

face on phone.py is for counting persons and detecting mobile phones. YOLOv3 is used in Tensorflow 2

head pose detect.py is used for finding where the head is facing.

fake face detection.py is used for finding whether the face is real or a photograph or image.

Our system is robust, scalable, cross platform and could withstand strong and weak light. The frames per second obtained is 25FPS on intel i5

It is divided into two parts:

- Audio from the microphone is recording and converted to text using Google's speech recognition API. A different thread is used to call the API such that the recording portion is not disturbed a lot, which processes the last one, appends its data to a text file and deletes it.

- NLTK we remove the stopwods from that file. The question paper (in txt format) is taken whose stopwords are also removed and their contents are compared. Finally, the common words along with its number are presented to the proctor.

- Making the entire product ready in such a short time was a major challange that we faced.

- Speech to text conversion which might not work well for all languages, we will also use NLP in later stages.