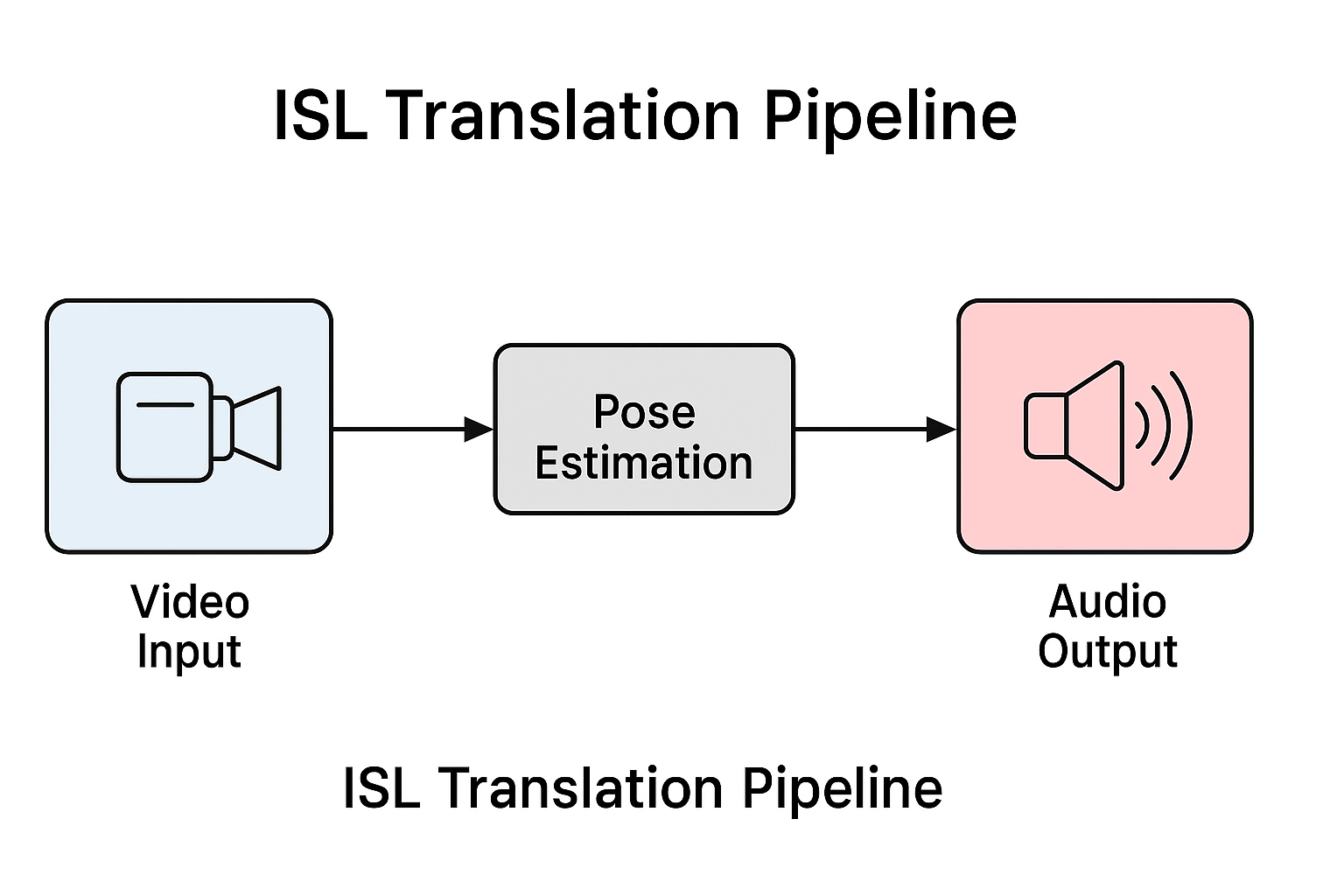

---A real-time deep learning-based system for translating Indian Sign Language (ISL) gestures into spoken text using MediaPipe, PyTorch, and TTS engines like Coqui-TTS and pyttsx3. Designed to enhance communication accessibility and bridge the gap between signers and non-signers.

- 🎥 Real-time Keypoint Extraction using MediaPipe (Hands + Pose + Face)

- 🤖 Deep Learning Model for gesture-to-text mapping

- 🔊 Text-to-Speech Integration for verbal output

- 🖥️ Supports both Webcam and Video File inputs

- 🔁 Smoothing and confidence filtering for stable prediction

- 🧠 Multi-task loss design for better gesture understanding

- 💡 Modular, production-ready pipeline with clear APIs

- 🌐 Designed for research, healthcare, education, and accessibility

| Sector | Use Case |

|---|---|

| 🏥 Healthcare | Doctor-patient communication in silent wards or speech-impaired cases |

| 🎓 Education | Teaching and learning sign language via feedback and automation |

| 🧏 Accessibility | Public service counters, train stations, airports for ISL speakers |

| 🛒 Retail | Gesture-based customer service kiosks or checkout counters |

| 🏠 Smart Homes | Hands-free control using sign commands |

| 🔬 Research | Building datasets, evaluating sign language learning, HCI studies |

git clone git@github.com:avinash064/ISL-Translator.git

cd ISL-Translatorconda create -n isl-translator python=3.10 -y

conda activate isl-translator

pip install -r requirements.txt💡 Optionally, install Coqui-TTS:

pip install TTS- 🔹 Input: 3D Keypoints (Pose + Hand + Face) → (T, D) shape

- 🔹 Encoder: BiLSTM/Transformer

- 🔹 Classifier: Fully Connected + Softmax

- 🔹 Loss: Custom Multi-task Loss combining:

- CrossEntropyLoss

- CTC Loss

- Attention Alignment (optional)

- Label Smoothing

python -m models.Training \

--keypoint_dir "/data/.../keypoints/ISL-CSLTR" \

--batch_size 32 \

--epochs 20 \

--checkpoint "checkpoints/model.pt"Key modules:

keypoint_extractor.py: Recursively extract.npykeypoints from videosloss.py: Multi-loss integration with flexibilitytraining_validation.py: Robust training script with checkpointing and logging

python -m inference.realtime_pipeline \

--model_ckpt checkpoints/model.pt \

--backend torch \

--fps 15 \

--smooth 5 \

--tts en_XX- Supports both live webcam and video input (

--video) - Smooths predictions over windowed majority voting

- Converts output to speech using pyttsx3 or Coqui-TTS

ISL-Translator/

│

├── modules/ # Key reusable modules

│ ├── keypoint_extractor.py

│ ├── keypoint_encoder.py

│ ├── model.py

│ └── text_decoder.py

│

├── datasets/

│ └── keypoint_loader.py

│

├── models/

│ ├── Training.py

│ ├── loss.py

│ └── training_validation.py

│

├── inference/

│ └── realtime_pipeline.py

│

├── data/ # Keypoint .npy files and dataset structure

├── checkpoints/

├── README.md

└── requirements.txt

- Integrate NeRF/Gaussian Splatting for 3D hand mesh

- Add language modeling (BERT/LLM) for better output fluency

- Deploy on Jetson Nano / Raspberry Pi

- Build web app with Streamlit/FastAPI

- Support bi-directional translation (text → ISL)

Contributions, feedback, and ideas are welcome!

Please raise issues or open PRs.

MIT License © 2025 Avinash Kashyap

"Empowering communication, one sign at a time." ✨