Greetings and welcome to my GitHub profile! I am Anushka Satav, a dedicated robotics enthusiast currently pursuing a Master's in Robotics and Autonomous Systems (Artificial Intelligence) at Arizona State University, Tempe, USA.

My academic and professional journey is driven by a passion for research, design, prototyping, and developing innovative robotic systems. I have a keen interest in the following areas:

- Artificial Intelligence

- Autonomous Robots

- Aerial Robots

- Underwater Robots

- Control Systems

- ROS2-based software development

- Computer Vision

| Programming Languages | Robotics & Automation | CAD / FEA Software | AI & Vision |

|---|---|---|---|

| Python, C, C++ | ROS/ROS2, Gazebo, MATLAB | Fusion 360, MSC Apex, Abaqus, Ansys | OpenCV, YOLOv7/8, ML, DL |

📚 Education

Master's in Robotics And Autonomous Systems (Artificial Intelligence)

📅 August 2024 - Present

- NAMU Scholarship & General Graduate Fellowship Holder (Fall-2024)

- Semester-I: CSE-571 Artificial Intelligence, RAS-545 Robotic Systems, EGR-501 Linear Algebra

- Semester-II: RAS-546 Robotic Systems II, RAS-598 Experimentation and Deployment of Robots, RAS-598 Space Robotics and AI

Bachelor of Technology in Robotics And Automation

📅 August 2019 - August 2023

- Silver Medallist, Second Rank Holder

- Merit Scholarship Holder (2019-2022)

- Completed Biomechanics Course (National Student Exchange Program at SRM-IST, Kattankulathur)

💼 Experience

RoboCHAMPS, Pune, India

📅 November 2023 - January 2024

- Conducted online hands-on robotics sessions for children aged 7 to 15.

- Taught programming and robotics using Arduino, ESP32, Microbit and Scratch.

Void Robotics, Florida, USA

📅 May 2023 - November 2023

- Worked on Arduino libraries, Nav2-navigation stack, and ROS2 development.

- Gained expertise in Linux, Git, and GitHub operations.

Hexagon Manufacturing Intelligence Pvt. Ltd, Pune, India

📅 February 2023 - September 2023

- Conducted advanced simulations using MSC Apex, Nastran, and Dytran.

- Conducted static, linear, non-linear, and dynamic simulations of 10 different models creating non-linear materials on MSC Apex, Nastran, and Dytran.

- Built various Custom Tools in MSC Apex to automate model building processes for FEA Analysis with Python.

- Created a tool automating model creation, constraints, boundary conditions, post-processing for Top Load Analysis on MSC Apex using Nastran for non-linear analysis. Tool reduced model creation time from 20 minutes to less than 2 minutes.

Techno-Societal 2022: 4th International Conference on Advanced Technologies for Societal Applications

International Journal on Interactive Design and Manufacturing (Q2 Journal)

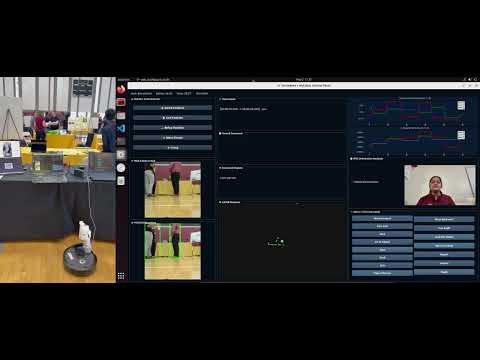

Our project, Intelligent Voice-Controlled Mobile Manipulator, transforms the TurtleBot4 mobile base and the MyCobot robotic arm into a unified, intelligent, multi-modal robotic system. Built entirely on ROS 2, the system fuses real-time perception (YOLOv8 object detection), voice interaction (Whisper speech-to-text), LiDAR-based obstacle mapping, and IMU-driven stability monitoring into a modular architecture. A custom PyQt5 GUI offers live visualization of all sensor inputs and inference feedback.

This 1-minute elevator pitch highlights our final project for the course RAS 598: Experimentation and Deployment of Robotic Systems at Arizona State University (Spring 2025).

Check more on my Project Website.

Explore projects that target real-world aquatic challenges through robotics. These boats use intelligent onboard sensing and navigation for autonomous surface missions, including environmental cleanup.

- This project showcases the Heron Unmanned Surface Vessel (USV) system, developed as part of ICRA 2025 (Robots in the Wild).

- Combining ROS2, Gazebo simulation, and real-time YOLOv8 vision, this project demonstrates a modular framework for opportunistic trash collection during scientific surveys, prioritizing environmental data integrity.

- A lightweight color-based detection proxy was used in simulation (ROS-Melodic) to validate perception-driven navigation logic, while real-world field tests on the R/V Karin Valentine confirmed YOLOv8’s ability to detect trash under natural lighting and water conditions.

| Repository | Overview |

|---|---|

Heron USV Project View Repository  |

- Developed for IEEE ICRA 2025 – Robots in the Wild Workshop - Integrated ROS-Gazebo simulation with a Heron USV digital twin - Validated detour logic and waypoint recovery using color proxy detection - Real-world detection tests on R/V Karin Valentine confirmed trash detection under natural conditions, pending full control stack integration |

ICRA Presentation Poster and Presentation  |

🌟 Highlight from IEEE International Conference on Robotics and Automation (ICRA) 2025 Our paper “Towards Robotic Trash Removal with Autonomous Surface Vessels” was accepted and presented at the Robots in the Wild Workshop, ICRA 2025, at the Georgia World Congress Center, Atlanta, Georgia. - ICRA is the flagship conference of the IEEE Robotics and Automation Society, showcasing the latest advancements in robotics and automation. - The project introduces a scalable, real-time detection and navigation framework, prioritizing scientific data integrity while enabling dynamic trash interception. - Achieved 100% waypoint recovery, 108.2% area coverage, and 54% simulated trash collection rate using color-based proxies. |

Field Testing of Detection Module Image  |

- Preliminary field tests with live onboard RGB camera confirmed the YOLOv8n model’s detection reliability under real-world lighting and water reflection conditions. - Control stack and full integration of perception, planning, and hardware-level autonomy (ROS2 + PX4) are planned for future work. - Future deployments will include environmental sensors and adaptive navigation in challenging aquatic conditions. |

✨ This project embodies a balanced approach to robotics, prioritizing both environmental monitoring and responsible automation.

| Project | Overview |

|---|---|

| Towards Robotic Trash Removal with Autonomous Surface Vessels |  PX4-Based Robotic Boat for Lake Cleanup - Built an autonomous boat platform using PX4 for real-time control - Integrated object detection with mission-level autonomy - Designed to detect and collect floating trash using vision + path planning |

- This series of mini projects showcases the advanced capabilities of the Parrot Mambo Minidrone as a research and learning platform for autonomous aerial robotics.

- Through the integration of MATLAB and Simulink, each project in this series explores key aspects of drone control—including real-time image processing, color-based object detection, autonomous navigation, and precision landing.

- From manual keyboard control to fully vision-driven flight, these projects demonstrate how a compact, classroom-grade drone can be transformed into an intelligent autonomous system suitable for real-world prototyping and experimental robotics research.

| Repository | Overview |

|---|---|

Mini Project 1: Color-Based Object Detection View Repository  |

- Detected Red, Green, Blue, and Yellow blocks using the drone’s onboard camera - Implemented color thresholding in Simulink using MATLAB Apps - Built a modular image processing pipeline using MATLAB vision toolbox - First step toward integrating autonomous perception with drone navigation |

Mini Project 2: Keyboard Navigation & Color-Based Landing View Repository  |

- Enabled manual navigation using keyboard inputs (‘w’, ‘a’, ‘s’, ‘d’) - Triggered autonomous landing on color detection - Integrated MATLAB image processing logic into the Simulink-based flight controller - Refined descent strategy to ensure smooth landings |

Mini Project 3: Autonomous Line Following and Precision Landing View Repository  |

- Fully autonomous: drone takes off, tracks R/G/B line, and lands based on symmetry detection - Used MATLAB Simulink and Stateflow for visual servoing, decision-making, and trajectory generation - Centroid-based logic and binary mask analysis guided navigation in real time - Validated with smooth takeoff-to-landing transitions in simulation and real flights |

Final Project: Vision-Guided Precision Landing on a Moving AGV View Full Project  |

- Advanced extension of Project 3 for dynamic UAV-UGV coordination - Implements precision landing on a moving line-follower robot using real-time HSV-based image processing - Robust to indoor lighting variations with tuned multi-condition color masks - Mimics multi-agent cooperation scenarios for warehouse and logistics automation - Fully completed and tested — check out the repo for code, models, and demo! |

The Fetch Robot Series is designed to help beginners build a strong foundation in simulating and controlling the Fetch Mobile Manipulator using ROS Melodic, Gazebo and MoveIt!. Whether you're just getting started with simulation environments or working toward solving manipulation tasks, this series walks you through the full journey—from setting up the simulation environment to executing motion planning tasks using MoveIt!

These repositories provide practical guidance and solved exercises to familiarize users with robot simulation, kinematics, and motion execution—critical skills for research and development in service robotics, warehouse automation, and human-robot interaction.

| Repository | Overview |

|---|---|

Getting Started with Fetch Simulation in ROS Melodic ROS-Melodic-Fetch-Robot  |

- Step-by-step setup guide for running Fetch robot simulation in ROS Melodic on Ubuntu 18.04 - Launch files for Gazebo world, Fetch robot URDF, and RViz - Instructions for setting up fetch_gazebo, fetch_moveit_config, and move_group_interface - Helps users build a reproducible and stable simulation workspace |

Motion Planning and Control Tasks with Fetch Robot fetch-ros-exercise  |

- Series of solved robotics tasks using MoveIt and ROS Melodic - Includes joint state publishing, gripper control, and planning to PoseGoal/JointGoal - Demonstrates how to visualize and execute robot plans in RViz - A practical extension for those who have completed the setup guide |

The TurtleBot4 ROS 2 Series explores autonomous navigation, voice-commanded control, and real-time object detection using TurtleBot4 and ROS 2 Humble. Whether you're working in simulation or on real hardware, these projects offer step-by-step demonstrations of core robotics concepts—from basic launch setups to full autonomy using perception and path planning.

This series is perfect for robotics enthusiasts, university students, or developers diving into ROS 2 and mobile robot systems.

| Repository | Overview |

|---|---|

| TurtleBot4-ROS2-Series |  Voice-Guided Navigation and Object Detection with YOLOv8 in ROS 2 - Launches TurtleBot4 in Gazebo and RViz with integrated TF, laser scan, and navigation stack - Integrates YOLOv8 for real-time object detection - Accepts voice commands to guide the robot across mapped environments - Combines Nav2, cmd_vel, and speech recognition pipelines for autonomy |

This series features projects centered around intelligent robotic arms—from academic research to AI-enhanced task execution. These systems explore advanced perception, planning, and manipulation in both industrial and experimental settings.

| Project | Overview |

|---|---|

| Maze Solving with MyCobot Pro 600 (M.S. RAS-545) |  Solving a 4x4 Maze with AI Camera and Digital Twin - Utilized a MyCobot Pro 600 and AI Camera Kit for solving maze paths - Built a digital twin in MATLAB for simulating trajectory planning - Integrated vision-based decision making with robotic arm actuation |

| Robotic Arm for Waste Sorting (Final Year B.Tech) |  4-DOF Arduino-Based Waste Segregation Robot - Designed and built a robotic arm for sorting waste by material type - Used YOLOv7 to classify glass, paper, plastic, and metal items - Focused on sustainable automation and smart recycling |

Explore a curated selection of individual robotics projects that highlight simulation, perception, and control using ROS, MATLAB, and Python. These projects serve as compact demonstrations of specific concepts—perfect for quick learning or as building blocks for larger applications.

| Project | Overview |

|---|---|

| Digital Twins for Robot Manipulators (MATLAB) | Simulink-based Digital Twin Modeling of a 2-DOF Robotic Arm - Built real-time digital twin using MATLAB and Simulink - Implemented kinematic modeling and parameter synchronization between physical and virtual twins - Includes comparison plots and simulation validation |

| Mini Project – ROS2 Turtlesim: Catch Them All | Multi-Agent TurtleBot Task Using ROS2 and Python - Implemented a search and capture game using multiple turtlesim nodes in ROS2 - Uses rclpy for control and publishing targets - Reinforces topics like node creation, subscriber/publisher logic, and coordinate tracking |

- Udemy:

- ROS2 for Beginners (Foxy, Humble - 2024)

- ROS2 for Beginners Level 2 (TF | URDF | RViz | Gazebo)

- NPTEL:

- Introduction to Robotics

- Introduction to Internet of Things

- Coursera:

- Python for Everybody

- Python Data Structure (University of Michigan)

“Success is not the key to happiness. Happiness is the key to success. If you love what you are doing, you will be successful.”

– Albert Schweitzer

Interests: 🎨 Sketching 🎮 Gaming 📸 Photography

If interested, check out my page at -