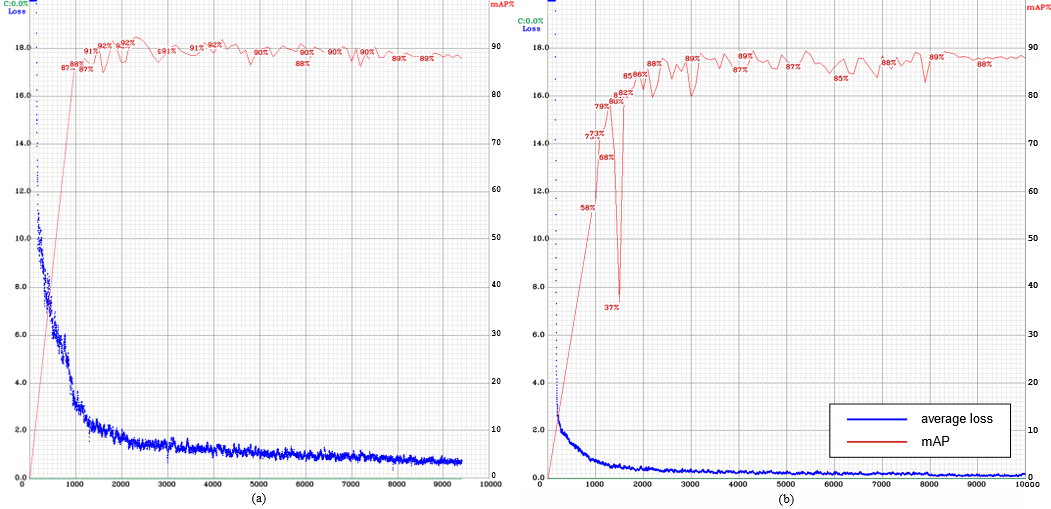

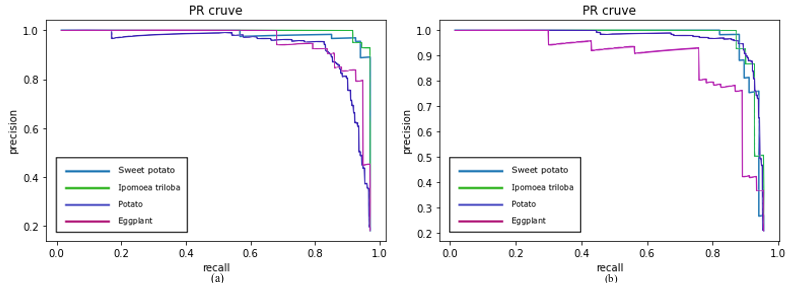

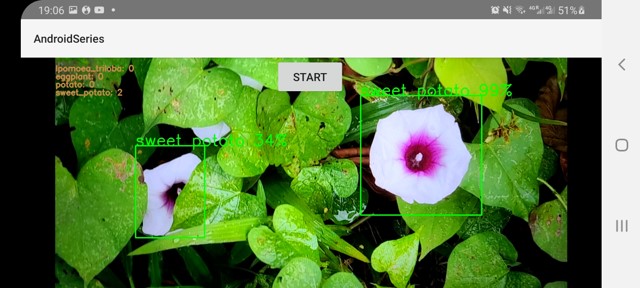

This work is about a mobile machine learning application in detecting flowers of bioenergy crops. A custom dataset containing 495 manually-labelled images is constructed for training and testing, and the latest state-of-the-art object detection models, YOLOv4 and YOLOv4-tiny, are selected as the flower detection models. Some other milestone object detection models including YOLOv3, YOLOv3-tiny, SSD and Faster-RCNN are chosen as benchmarks for performance comparison. The comparative experiment results indicate that the retrained YOLOv4 model achieves a considerable high mean average precision (mAP = 91%) but a slower inference speed (FPS) on a mobile device, while the retrained YOLOv4-tiny has a lower mAP of 87% but reach a higher FPS of 9 on a mobile device. Two mobile applications are then developed by directly deploying YOLOv4-tiny model on a mobile app and by deploying YOLOv4 on a web API, respectively. The testing experiments indicate that both applications can not only achieve real-time and accurate detection, but also reduce computation burdens on mobile devices.

The YOLO serial models are trained on Google Colab. The Colab notebook can be found in ./Training/YOLO_training_and_testing.ipynb

Before training, you should make your own obj.data and obj.name files. The obj.data and obj.name of my project are in ./Models/YOLO_series/config_files/ Other files including .cfg and .weights can be found here: https://github.com/AlexeyAB/darknet

The flower detection Mobie App should works under the Android 10+ environment. The retrained YOLOv4-tiny model is directedly deployed on the app (the mobile device). It achieves real-time detections with an average inference speed of 110 millisecond per frame. The source code of the mobile app is in the Mobile App folder and it should run in Android Studio IDE with OpenCV SDK installed.

The flower detection Web API is constructed based on FLASK framework and YOLOv4 dynamic-link library (dll)

The overall architecture of the API is as follow:

It can be divided into 2 portions: frontend and backend.

It can be divided into 2 portions: frontend and backend.

The frontend is built by HTML, CSS and Javascript. Source codes can be found in: ./Web API/frontend

The backend is built based on FLASK server and YOLO DLL. The YOLO DLL can be compiled in the Darknet Framework. The code of the FLASK server is in: ./Web API/flask_app.py