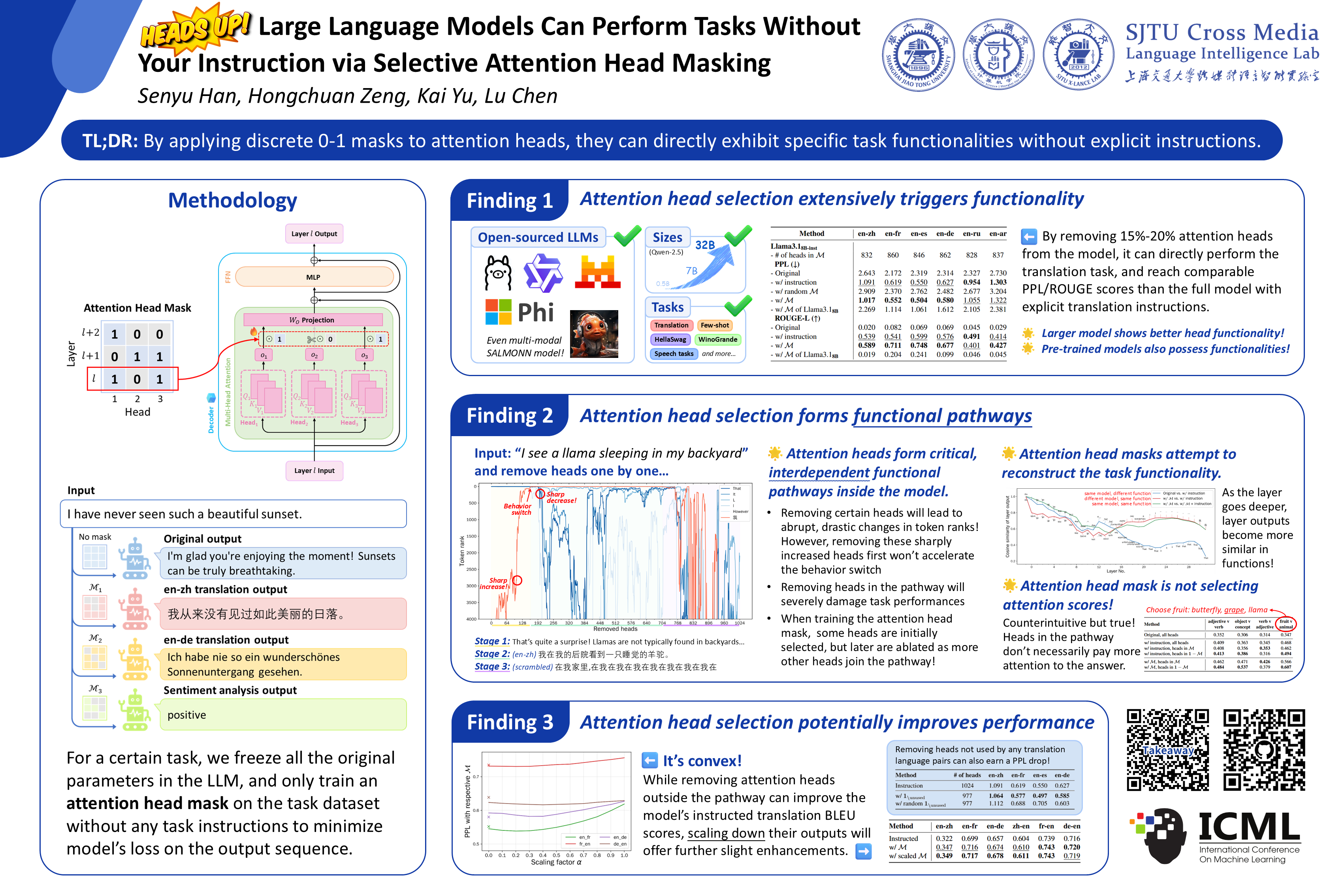

Heads up! Large Language Models Can Perform Tasks Without Your Instruction via Selective Attention Head Masking

This repository provides code for training attention head masks and for plotting some of the figures presented in our paper Heads up! Large Language Models Can Perform Tasks Without Your Instruction via Selective Attention Head Masking (ICML'25).

conda create -n headsup python=3.10 -y

conda activate headsup

pip install -r requirements.txtWe use FlashAttention for efficient training. You may install it as your need, or disable FlashAttention in train_mask.py.

Trained head mask for Meta-Llama-3.1-8B-Instruct on XNLI and FV datasets are available here (Google Drive). Put the output folder under this directory, then you can directly run the cells in eval.ipynb and partial cells in playground.ipynb.

We provide the training scripts under scripts/ directory. You may modify them to your own training settings.

bash scripts/llama_xnli.sh # Train llama-3.1 on XNLI dataset@inproceedings{han2025heads,

title={Heads up! Large Language Models Can Perform Tasks Without Your Instruction via Selective Attention Head Masking},

author={Senyu Han and Hongchuan Zeng and Kai Yu and Lu Chen},

booktitle={Forty-second International Conference on Machine Learning},

year={2025},

url={https://openreview.net/forum?id=x2Dw9aNbvw}

}