Letian Huang1

Dongwei

Ye1

Jialin Dan1

Chengzhi

Tao1

Huiwen Liu2

Kun Zhou3,4

Bo Ren2

Yuanqi Li1

Yanwen Guo1

Jie Guo*

1

1State Key Lab for Novel Software Technology, Nanjing

University

2TMCC, College of Computer Science, Nankai University

3State Key Lab of CAD&CG, Zhejiang University

4Institute of Hangzhou Holographic Intelligent Technology

[2025.08.04] 🎈 We release the code.

[2025.07.23] Birthday of the repository.

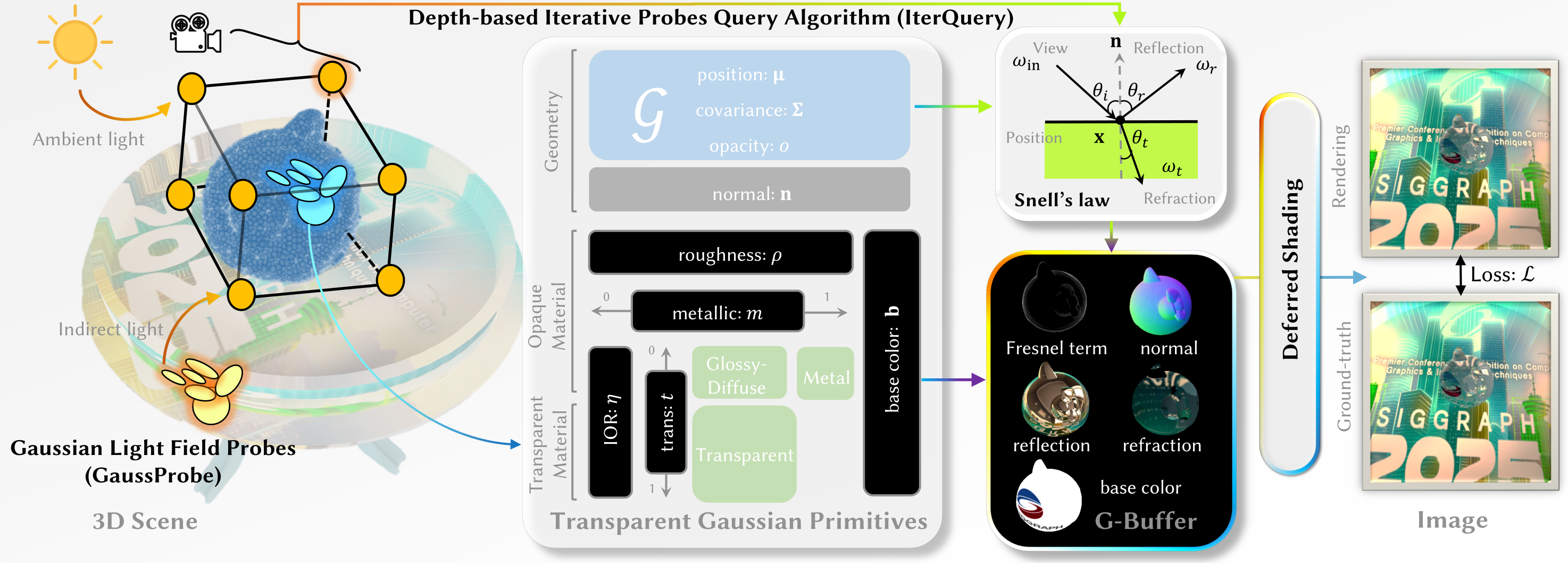

We propose TransparentGS, a fast inverse rendering pipeline for transparent objects based on 3D-GS. The main contributions are three-fold: efficient transparent Gaussian primitives for specular refraction, GaussProbe to encode ambient light and nearby contents, and the IterQuery algorithm to reduce parallax errors in our probe-based framework.

The overview of our TransparentGS pipeline. Each 3D scene is firstly separated into transparent objects and opaque environment using SAM2 [Ravi et al. 2024] guided by GroundingDINO [Liu et al. 2024]. For transparent objects, we propose transparent Gaussian primitives, which explicitly encode both geometric and material properties within 3D Gaussians. And the properties are rasterized into maps for subsequent deferred shading. For the opaque environment, we recover it with the original 3D-GS, and bake it into GaussProbe surrounding the transparent object. The GaussProbe are then queried through our IterQuery algorithm to compute reflection and refraction.

If you find this work useful in your research, please cite:

@article{transparentgs,

author = {Huang, Letian and Ye, Dongwei and Dan, Jialin and Tao, Chengzhi and Liu, Huiwen and Zhou, Kun and Ren, Bo and Li, Yuanqi and Guo, Yanwen and Guo, Jie},

title = {TransparentGS: Fast Inverse Rendering of Transparent Objects with Gaussians},

journal = {ACM Transactions on Graphics (TOG)},

number = {4},

volume = {44},

month = {July},

year = {2025},

pages = {1--17},

url = {https://doi.org/10.1145/3730892},

publisher = {ACM New York, NY, USA}

}- Real-time rendering and navigation of scenes that integrate traditional 3DGS, triangle meshes and reconstructed meshes (Highly robust to complex occlusions).

- Secondary light effects (e.g., reflection and refraction).

- Rendering with non-pinhole camera models (e.g., fisheye or panorama).

- Material editing (e.g., IOR and base color).

Clone the repository and create an anaconda environment using

git clone git@github.com:LetianHuang/transparentgs.git --recursive

cd transparentgs

SET DISTUTILS_USE_SDK=1 # Windows only

conda env create --file environment.yml

conda activate transparentgsThe repository contains several submodules, thus please check it out with

pip install . # Thanks to https://github.com/ashawkey/raytracing

pip install submodules/diff-gaussian-rasterization

pip install submodules/simple-knn

pip install submodules/diff-gaussian-rasterization-fisheye

pip install submodules/diff-gaussian-rasterization-panorama

pip install submodules/nvdiffrastor choose a faster version (1. integrated with Speedy-Splat, using SnugBox and AccuTile; 2. Employ CUDA scripting for computational acceleration of 64 probes).

pip install .

pip install submodules-speedy/diff-gaussian-rasterization

pip install submodules/simple-knn

pip install submodules-speedy/diff-gaussian-rasterization-fisheye

pip install submodules-speedy/diff-gaussian-rasterization-panorama

pip install submodules-speedy/compute-trilinear-weights

pip install submodules/nvdiffrastFirst, create a models folder inside the project path by

mkdir modelsThe data structure will be organised as follows:

transparentgs/

│── models/

│ ├── 3dgs/

│ │ ├── drjohnson.ply

│ │ ├── playroom_lego_hotdog_mouse.ply

│ │ ├── Matterport3D_h1zeeAwLh9Z_3.ply

│ │ ├── ...

│ ├── mesh/

│ │ ├── ball.ply

│ │ ├── mouse.ply

│ │ ├── bunny.ply

│ │ ├── ...

│ ├── probes/

│ │ ├── playroom_lego_hotdog_mouse/

│ │ │ ├── probes/

│ │ │ │ ├── 000_depth.exr

│ │ │ │ ├── 000.exr

│ │ │ │ ├── 333_depth.exr

│ │ │ │ ├── 333.exr

│ │ │ │ ├── ...

│ │ │ ├── probe.json

│ │ ├── ...

| ├── meshgs_proxy/

│ │ ├── mouse.ply

│ │ ├── ...

We release several ready-to-use scenes. Please download the assets from Google Drive and move the 3dgs and mesh folders into models/ folder.

To create a custom scene, simply follow the provided instructions to set it up. Instructions on the above data structure are as follows:

- Scenes in the

3dgsfolder should be in.plyformat and reconstructed using traditional 3DGS, op43dgs (for reconstruction from non-pinhole cameras) or Mip-Splatting (for anti-alias). - Objects in the

meshfolder could be in any triangle mesh format (e.g,.obj,.plyor.glb), including both traditional and reconstructed ones. - Probes in the

probesfolder could be baked usingStep I: Bake GaussProbeor similar formats. Theprobes.jsonfile specifies the positions of the probes, while theprobes/directory stores the corresponding RGB panorama and depth panorama in EXR format. - The

meshgs_proxyfolder is a byproduct ofStep I: Bake GaussProbe. It contains the object converted into 3DGS format and can be used as a proxy of the mesh inmeshto assemble a new scene (mesh+3DGS). Note: modifying the files inmeshgs_proxydoes not affect the final rendering results (i.e.,Step II: Boot up the renderer). To change the proxy configuration, you can adjust the scene’s position under the 3dgs directory and rerunStep I: Bake GaussProbe.

The first step is to bake probes for the scene that has already been set up:

python probes_bake.py --W 800 --H 800 --gs_path ./models/3dgs/playroom_lego_hotdog_mouse.ply --probes_path ./models/probes/playroom_lego_hotdog_mouse --mesh ./models/mesh/mouse.ply --begin_id 0Command Line Arguments for probes_bake.py

path to the trained 3D Gaussians directory as the environment (used to bake GaussProbe).

output path of GaussProbe to be baked

path to the mesh

width of the RGBD panorama

height of the RGBD panorama

number of probes (1/8/64). In theory, any positive integer is allowed, but the released code only supports these three fixed values.

only to prevent OOM (Out of Memory); when GPU memory is insufficient, the process can exit and resume baking from the specified ID.

bounding box scale ratio for the mesh

the voxel size (pitch), which determines the resolution of the mesh voxelization.

Next, boot the renderer to start rendering:

python renderer.py --W 960 --H 540 --gs_path ./models/3dgs/playroom_lego_hotdog_mouse.ply --probes_path ./models/probes/playroom_lego_hotdog_mouse --mesh ./models/mesh/mouse.ply --meshproxy_pitch 0.1Command Line Arguments for renderer.py

path to the mesh

the original design supports either environment map or GaussProbe. However, since a single probe with zero iteration is equivalent to the environment map, this design has been deprecated.

path to the trained 3D Gaussians directory as the environment (used to bake GaussProbe).

GUI width

GUI height

default GUI camera radius from center

default GUI camera fovy (can be modified in the GUI)

path of the baked GaussProbe

number of probes (1/8/64). In theory, any positive integer is allowed, but the released code only supports these three fixed values. (can be modified in the GUI)

count of iterations (0-10). In theory, any non-negative integer is allowed, but the released code only supports these eleven fixed values. (can be modified in the GUI)

the voxel size (pitch), which determines the resolution of the mesh voxelization.

Additionally, we offer an optional all-in-one pipeline script that produces the same effect as executing Step I and Step II independently:

python full_render_pipeline.py --W 960 --H 540 --probesW 800 --probesH 800 --gs_path ./models/3dgs/playroom_lego_hotdog_mouse.ply --probes_path ./models/probes/playroom_lego_hotdog_mouse --mesh ./models/mesh/mouse.ply --meshproxy_pitch 0.1

# equal to

# 1. python probes_bake.py --W 800 --H 800 --gs_path ./models/3dgs/playroom_lego_hotdog_mouse.ply --probes_path ./models/probes/playroom_lego_hotdog_mouse --mesh ./models/mesh/mouse.ply --begin_id 0 --meshproxy_pitch 0.1

# 2. python renderer.py --W 960 --H 540 --gs_path ./models/3dgs/playroom_lego_hotdog_mouse.ply --probes_path ./models/probes/playroom_lego_hotdog_mouse --mesh ./models/mesh/mouse.ply --meshproxy_pitch 0.1Command Line Arguments for renderer.py

path to the mesh

the original design supports either environment map or GaussProbe. However, since a single probe with zero iteration is equivalent to the environment map, this design has been deprecated.

path to the trained 3D Gaussians directory as the environment (used to bake GaussProbe).

GUI width

GUI height

default GUI camera radius from center

default GUI camera fovy (can be modified in the GUI)

path of the baked GaussProbe

number of probes (1/8/64). In theory, any positive integer is allowed, but the released code only supports these three fixed values. (can be modified in the GUI)

count of iterations (0-10). In theory, any non-negative integer is allowed, but the released code only supports these eleven fixed values. (can be modified in the GUI)

the voxel size (pitch), which determines the resolution of the mesh voxelization.

width of the RGBD panorama

height of the RGBD panorama

only to prevent OOM (Out of Memory); when GPU memory is insufficient, the process can exit and resume baking from the specified ID.

bounding box scale ratio for the mesh

if using this argument, it will be equivalent to running renderer.py.

The following GUI usage tutorial is provided based on the current release. It is recommended to watch this in conjunction with the video available on the project homepage.

Bear resemblance to raytracing.

- drag rotate: move with the left mouse button.

- drag translation: move with the middle mouse button

- move closer: move with the wheel

- Options: the main ways to control, aside from moving the camera.

- Debug: display the camera pose.

Common G-buffers in typical renderers (depth, mask, normal, position), with special attention to:

- reflect: the reflection component (mesh).

- refract: the refraction component (mesh).

- render: the weighted sum of the reflection and refraction components using the Fresnel term (mesh).

- gs_render: the rendering result obtained using only traditional Gaussian primitives (3DGS).

- semantic: the result of hybrid rendering with Gaussians and meshes (mesh + 3DGS). Pixels belonging to the mesh are replaced with a uniform color that represents the same semantic label (e.g., purple).

Select the camera model.

- pinhole: the regular camera model which 3DGS also supports.

- fisheye: It can support a field of view (FOV) of up to 180°.

- panorama: It can support a field of view (FOV) of 360°.

Select the background of the mesh.

- black: black color as the background

- white: white color as the background

- 3DGS: Hybrid rendering of 3DGS and transparent objects (

reflect,refract,render,normal).

Select whether to apply normal smoothing.

As changing the number of probes involves I/O overhead, it is not recommended to modify it through the GUI. It is advisable to configure it beforehand using terminal arguments. Additionally, increasing the number of probes demands more GPU memory.

Modify the count of iterations of IterQuery (0-10). In theory, any non-negative integer is allowed, but the released code only supports these eleven fixed values. Setting it to zero clearly demonstrates the superiority of the IterQuery.

Modifying the field of view (FOV), particularly for fisheye cameras, allows reaching up to a 180° viewing angle.

This mainly affects gbuffers with refract or render properties. When the IOR is approximately 1, the result is almost identical to the background, demonstrating the high quality of IterQuery (especially with 64 probes).

Note that only the scale of the 3dgs primitives is modified, not the overall scene scaling. Therefore, reducing the scale allows us to observe the gaps between Gaussians.

Control the sampling rate of mesh ray tracing.

Modify the color of the mesh.

- To release.

- Release the code.

- Release the code of

Standalone demo : segmentation. - Release the dataset of transparent objects that we captured ourselves.

- Code optimization.

This project is built upon 3DGS, GaussianShader, GlossyGS, op43dgs, raytracing, nvdiffrast, instant-ngp, SAM2, GroundingDINO, SAM, GroundedSAM, and so on. Please follow the licenses. We thank all the authors for their great work and repos. We sincerely thank our colleagues for their valuable contributions to this project.