- Add support for SAM2

- Add support for TensorRT

- Upload the technique report

This repository introduces new features and improvements to FoundationPose, focusing on enhancing 6D pose estimation and tracking capabilities. The key updates include:

- Pose Estimation and Tracking Using Accurate CAD Models Without Depth

- Pose Estimation and Tracking Using CAD Models With Unknown Scale

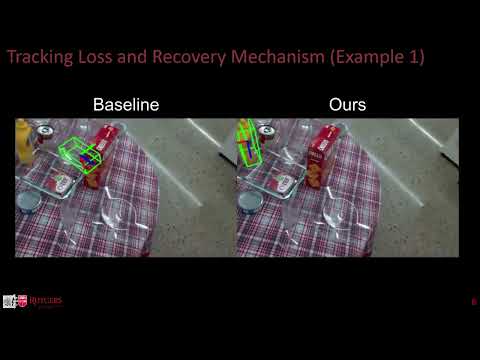

- Auto-Recovery Mechanism for Tracking Loss in Pose Tracking

To set up the enhanced FoundationPose environment:

- Follow the instructions in the original README to install FoundationPose.

- Install XMem or SAM2 for object tracking.

- Install Metric3D or ZoeDepth for monocular depth estimation.

The original FoundationPose requires depth input for both pose estimation and tracking. However, if a CAD model with accurate scale is available, depth input becomes unnecessary.

Below is the framework for pose estimation without depth. Download the demo dataset from the original FoundationPose repo then run:

python run_demo_without_depth.pydemo1.mp4

For another demo, download our custom data captured using D405 and place it in the demo_data folder. Then run

python run_demo_without_depth.py --mesh_file ./demo_data/d405_demo/mesh/model.obj --test_scene_dir ./demo_data/d405_demo/

d405.mp4

In the following demo, we scale the original CAD model. The original FoundationPose fails when the CAD model is of unkown scale. On the contrary, our scale recovery is able to recover the true scale using the first frame of depth.

python run_demo_unkown_scale.pyunkown_scale.mp4

Nowadays, many AIGC (AI-Generated Content) methods can generate 3D models from single-view or multi-view RGB images. Examples include Zero-1-to-3, One-2-3-45, One-2-3-45++, and others. These approaches can effectively create CAD models. However, the models generated by these methods lack true scale information, making them unsuitable for direct use in FoundationPose.

In this demo, we demonstrate how to combine One-2-3-45++, the monocular depth estimation model ZoeDepth, and FoundationPose to perform pose tracking and estimation using only RGB images. We first captured a video of the object using an iPhone, then generated a CAD model using One-2-3-45++. Next, we created a pseudo-depth map with ZoeDepth and used FoundationPose for pose tracking and estimation. The demo dataset can be downloaded from Demo_colacan, and the following command can be used to run the demo:

python run_demo_colacan.pyone2345.mp4

In the tracking process of FoundationPose, a refiner network and the pose from the previous frame are used to predict the object pose for the current frame. This approach is effective because the pose for the current frame is typically close to the previous frame, provided the object is not moving rapidly or remains in view. However, FoundationPose can easily lose track of an object when it is briefly obstructed by other objects. A common solution to this issue is to re-register the object's pose once it becomes visible again. This poses certain challenges.

-

Detecting Tracking Loss

- We used a pretrained network, XMem, to track the object and obtain its mask.

- If the mask of the object in the current frame is smaller than a predefined threshold, we consider the object tracking as lost.

- When the object reappears and the mask returned by XMem exceeds the threshold, we use the register network to re-evaluate the object's pose based on the mask.

-

Handling Pose Inaccuracy

- The object's pose output by the register network may still be inaccurate, especially if the object mask is small.

- To address this, we continue the re-evaluation process until the object returns to a well-tracked state.

- We use a heuristic: if the position predicted by the refiner network is sufficiently close to the coarse position estimated from the mask and depth, we terminate the re-evaluation process and switch back to the tracking state.

-

Optimizing for Real-Time Performance

- To improve real-time performance, we reduce the number of hypothesis poses by 80%, selecting only those close to the pose before tracking was lost.

- The final tracking frame rate of FoundationPose + XMem is approximately 22 FPS on an RTX 3090 under a resolution of 640 x 480, using default refining iteration parameters.

First download the ClearPose dataset.

Below is the command line to run a demo on set 8 scene 2 in the ClearPose dataset:

python run_demo_clearpose.pydemo_tracking.mp4

- Wen, B., Yang, W., Kautz, J., & Birchfield, S. (2024). Foundationpose: Unified 6d pose estimation and tracking of novel objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 17868-17879).

- Cheng, H. K., & Schwing, A. G. (2022, October). Xmem: Long-term video object segmentation with an atkinson-shiffrin memory model. In European Conference on Computer Vision (pp. 640-658). Cham: Springer Nature Switzerland.