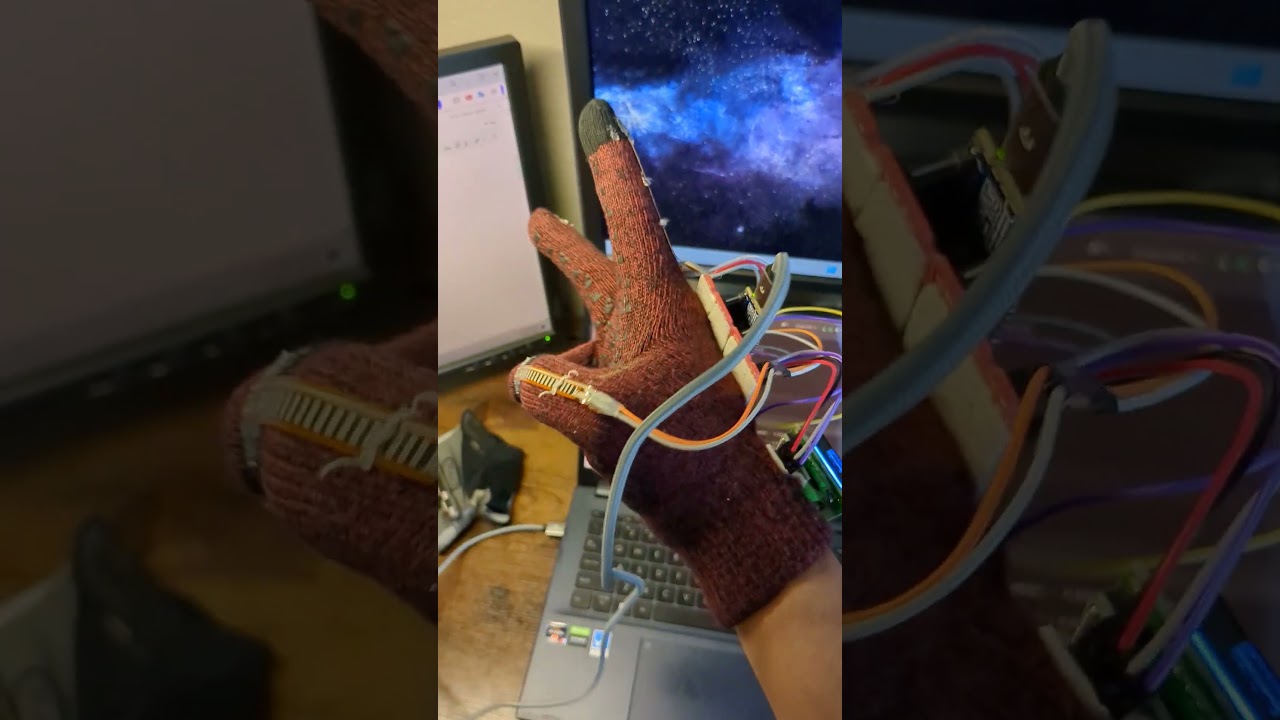

The ASL Glove is capable of translating physical hand gestures representing the ASL alphabet into text. We use the

Arduino Nano 33 BLE Sense Rev2 to get data from flex sensors (resistors), an accelerometer, and a gyroscope. This data

is sent through Serial to a computer and fed into a Python KNN algorithm to make a prediction for the current character. Finally, we show the

text through an on-glove LCD screen and a custom Bluetooth Low Energy App. This

repository aims to give a more in-depth analysis of our design choices, how the glove works, and how you can make your own.

As we can see, most of the characters in the ASL alphabet can be predicted by the individual multisets (5)

containing the relative position of {joints and phalanges} for each finger. Unfortunately, such measurements would

require a lot of expensive and very precise sensors. However, we can approximate these multisets as the individual "flexness"

of all fingers and add an extra "flexness" value to the back of the hand.

It is important to note that it is possible for two different multisets of a finger to map to

the same "flexness" value (surjective), but we assume this to be: (a) unlikely and (b) negligible when taking into account the rest of

the other fingers' measurements. For measuring this "flexness," we use Adafruit's short flex sensors.

As we can see, most of the characters in the ASL alphabet can be predicted by the individual multisets (5)

containing the relative position of {joints and phalanges} for each finger. Unfortunately, such measurements would

require a lot of expensive and very precise sensors. However, we can approximate these multisets as the individual "flexness"

of all fingers and add an extra "flexness" value to the back of the hand.

It is important to note that it is possible for two different multisets of a finger to map to

the same "flexness" value (surjective), but we assume this to be: (a) unlikely and (b) negligible when taking into account the rest of

the other fingers' measurements. For measuring this "flexness," we use Adafruit's short flex sensors.

Sadly, flex sensors alone will not be enough to accurately predict all characters. Some letters share the same multiset of relative positions but the fingers are simply: (a) oriented differently, (b) in motion, or (c) spaced differently. We can fix (a) and (b) by using an accelerometer, this would allow us to effectively have the equivalent of a position multiset with absolute orientation, but there's not much we can do about (c).

While it is true that any Arduino can perform analogReads (for flex sensors), we need something that is small and wearable. This limits our options

mostly to Arduino Nanos. When considering that we are also in need of an accelerometer, and that we want to show predictions on a custom app through Bluetooth,

we can see that the Arduino Nano 33 BLE Sense Rev2 is a good choice. Additionally,

while we initially did not have a prefrence of Low Energy over Standard Bluetooth, since this microcontroller uses BLE, is low power, and works on a 3.3v logic,

it is perfect to eventually implement on-glove predictions and power, without the need of a computer.

The easiest way to get data from the flex sensors is to map the resistance to a number by using analogRead. This function maps a [0, 3.3] voltage to a [0, 1023] value.

While Arduino cannot directly measure resistance, we can read the v_out from a voltage divider made up of a flex sensor and an extra resistance. The function we want to optimize is:

Where:

f is the range of values we can get from analogRead

- and + represent the lower and upper bounds of the flex sensor

R is the extra resistance we are looking for

Looking at the datasheet we see an expected flat resitance of 25 kΩ and max bent resistance of 125 kΩ.

Using a multimeter we double-check the values, finding an actual range of [30, 130] kΩ in our case.

We could take the derivative with respect to R to find the roots of the function, but it is not necessary if using Desmos.

By inspection, we see that the optimal value of R is 62.45 kΩ. Since we did not have that specific resistance, we built a R_eq of 60 kΩ, very close to the target.

We repeat this circuit for the back of the hand and each finger (6) which are read by [A0..., A4, A5], respectively.

short fingers[6];

...

fingers[0] = analogRead(A0);

fingers[1] = analogRead(A1);

fingers[2] = analogRead(A2);

fingers[3] = analogRead(A3);

fingers[4] = analogRead(A6);

fingers[5] = analogRead(A7);The interesting thing about the accelerometer is that it does not measure coordinate acceleration but rather proper acceleration.

This means that, even when the accelerometer is in uniform motion (a = 0) it still measures the standard gravitational acceleration.

Knowing this, we can measure the fraction of earth's gravitational acceleration that projects onto a given axis (aka dot product).

Furthermore, if we take the inverse cosine cos-¹(a) we can get the angle θ relative to the gravitational acceleration vector. In our case, we simply set a constant PARALEL_AXIS_THRESHOLD_G = 0.8,

which is the minimum value of the projection onto that axis. This would be the equivalent of:

What we can do is, for instance, since l, g, and q are pretty much the same (to the flex sensors), instead of training the KNN on those 3 different letters, we can have it train in only one of them, let's say l.

Now, when we get a prediction saying the current character is l we also check the values of the x and z axis to see if it is actually a l, g, or q.

match prediction:

case 'l':

if abs(arr[X_AXIS_G]) > PARALEL_AXIS_THRESHOLD_G:

prediction = 'g'

elif abs(arr[Z_AXIS_G]) > PARALEL_AXIS_THRESHOLD_G:

prediction = 'q'

case 'k':

if not abs(arr[Y_AXIS_G]) > PARALEL_AXIS_THRESHOLD_G:

prediction = 'p'

case 'u' | 'v':

if abs(arr[X_AXIS_G]) > PARALEL_AXIS_THRESHOLD_G:

prediction = 'h'We can do something similar for the letters that are the same but in motion, for instance, d and z. In this case we measure that the standard gravitational acceleration drops when we move the hand downwards.

Here LINEAR_THRESHOLD_G = -0.5.

if np.max(linear_acc) > LINEAR_THRESHOLD_G:

match prediction:

case 'd':

prediction = 'z'

case 'i':

prediction = 'j'While showing a letter on the LCD/App of the current prediction is already great, we also want to be able to spell and form words/sentences.

So far our prediction is based on the instantaneous values of the flex sensors and accelerometer data. We need a way to tell Python when to poll a character.

That is, we need a way to know when to append a letter to a sentence. We might make mistakes when trying to spell too, so being able to delete single characters or an entire sentence would be useful as well.

Spaces would also be neat so that we can separate words. Fortunately, the Arduino Nano 33 BLE Sense Rev2 is also equipped with a gyroscope. This sensor measures the degrees per second (DPS) ω of a given axis:

What we can do is use this data to perform these commands. We chose angular motion in:

x axis to append letters

y axis to add a space

z axis to delete a character. Three deletions in a row delete the entire sentence

We can select appropriate values by inspection:

ADD_THRESHOLD_DPS = 220

DELETE_THRESHOLD_DPS = 300

SPACE_THRESHOLD_DPS = 220We have been talking about how we can use the values from the flex sensors, accelerometer, and gyroscope, but we are still yet to talk about how we will communicate between the Arduino and the KNN.

communicate.py gets this job done. The Arduino communicates to the computer via Serial

sending a comma separated string of the flex sensors, accelerometer, and gyroscope. We use pyserial for Python to read the data. We load the Arduino Port and KNN model PATH from a data.json file.

Inside our main while loop we read the Arduino Serial data every 100 ms where we decode it, separate the values, and put into an list. Once in this list, we convert it into an array and average the last 15 values of the flex sensors to send to the KNN model. After we get a prediction, a message is sent to the Arduino to represent the command/action we want it to take. The message has the following structure:

sendMsg = prediction + ',' + commandFrom here four different things can happen:

if command is an empty character, the Arduino shows the instantaneous prediction

if command is the same as prediction, the letter is appended to a sentence

if command is *, we delete a character

if command is !, we delete the sentence

if command is , we add a space

BLE data is organized and shared through services and characteristics. As the ArduinoBLE Library

explains, BLE works kind of like a bulletin board. A peripheral device postes all services, which are a collection of characteristics, while the characteristic is the actual data value we want to share.

In our case, the peripheral device would be the board, while the central device is the phone with the Android App. We have a single service: messageService

and two characteristics: predictionCharacteristic and sentenceCharacteristic to send the instantaneous prediction and the sentence, respectively.

BLEService messageService(BLE_UUID_MESSAGE_SERVICE);

BLEStringCharacteristic predictionCharacteristic(BLE_UUID_PREDICTION_CHARACTERISTIC, BLENotify, 1);

BLEStringCharacteristic sentenceCharacteristic(BLE_UUID_SENTENCE_CHARACTERISTIC, BLENotify, COLS);We have added the BLENotify property to both characteristics, which means that, once our Android app is subscribed to those characteristics, it will automatically notify the app when the board modifies their value.

Now that we talked about what runs on the computer, let's talk about the embedded side. Arduino_ASL.ino is what runs on the board.

We can see how we are setting up our main peripherals, sensors, and communication protocols in setup. These are: (a) the built-in LED, (b) Serial,

(c) IMU, (accelerometer and gyroscope), (d) lcd, and (e) BLE.

As we loop through the code, the Arduino constantly sends data through the Serial port to the Python script. Specifically, we send comma separated values representing the analogRead values of the flex sensors, and the x, y, z values of the accelerometer and gyroscope. Lastly, we handle the prediction sent to the Arduino by the Python script, and the commands, if any. After this we update the LCD accordingly to reflect the current sentence.

The k-nearest neighbors (KNN) algorithm is a non-parametric, supervised learning classifier, which uses proximity to make classifications or predictions about the grouping of an individual data point. In our case, it uses the proximity of a current reading of the set of 6 flex sensors we have, and compares it to the data we have collected for each letter, in a 6Dimensional space. After this, it matches our current set of values to the nearest "neighbour" in this 6Dimensional space, thus obtaining our predicted letter.

- Glove

- Arduino Nano 33 BLE Sense Rev2

- Short Flex Sensors (6)

- I²C LCD

- Extra resistor

R - Breadboard/protoboard

- Jumpers

- Computer (KNN, power)

Giovanni Bernal Ramirez (embedded, App)

So Hirota (KNN)

Dehao Lin (sewing, presentation)

Li-Pin Chang (App, presentation)