This repository contains the final project of submitted during the participation in a CV Week 2024 organized by Yandex Research and SDA. It contains a comprehensive implementation of Consistency Models and their variants for accelerating multi-step diffusion models. The notebook demonstrates how to distill a teacher diffusion model (Stable Diffusion 1.5) into a student model capable of generating high-quality images in significantly fewer steps.

-

Diffusion Models Overview:

- Explanation of forward and reverse diffusion processes.

- Introduction to DDIM solvers for efficient sampling.

-

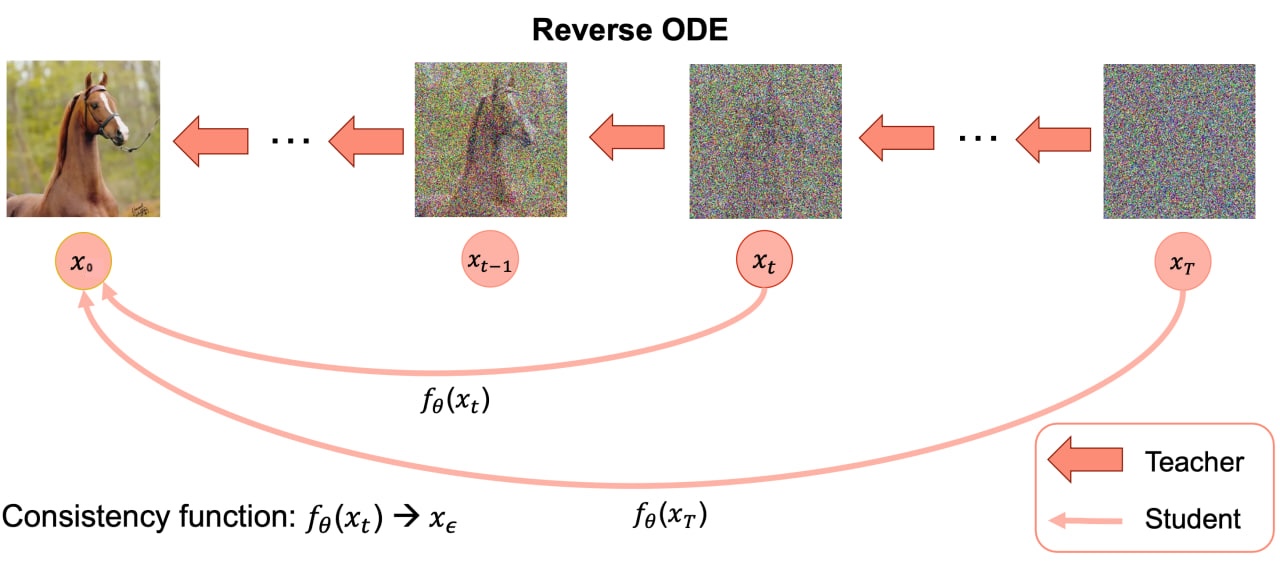

Consistency Models:

- Learn a "consistency function" to predict clean data directly from noisy data in one step.

- Train models with self-consistency and boundary conditions.

-

Consistency Distillation:

- Use a pre-trained teacher model to guide the training of the student model.

- Incorporate Classifier-Free Guidance (CFG) for improved image quality.

-

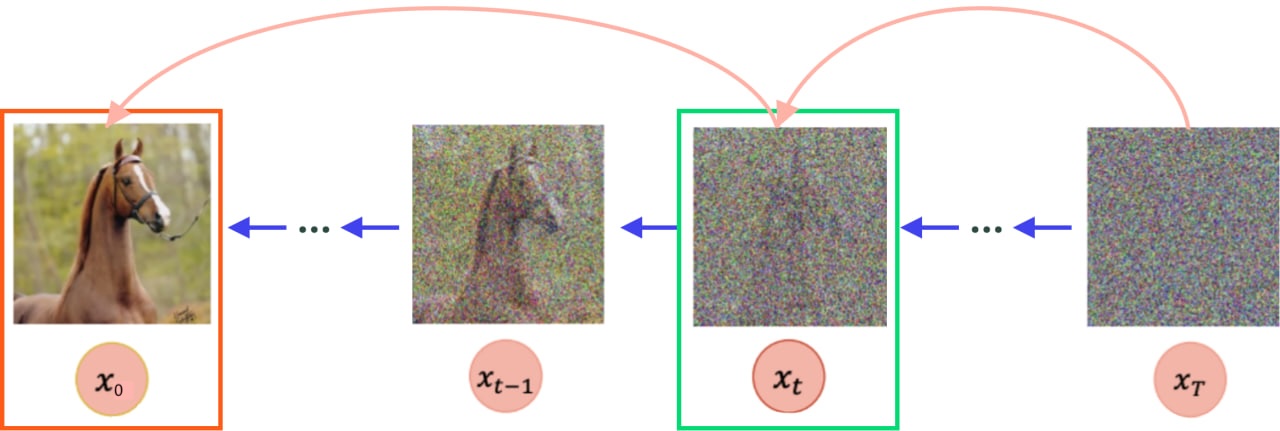

Multi-Boundary Consistency Distillation:

- Divide the diffusion trajectory into multiple segments for easier training.

- Achieve deterministic sampling with improved quality.

-

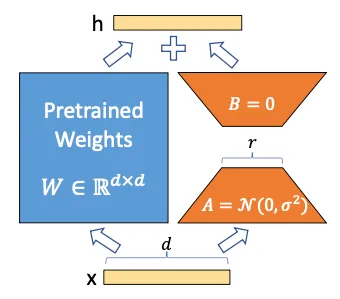

Efficient Training Techniques:

- Gradient checkpointing to save memory.

- LoRA (Low-Rank Adapters) for parameter-efficient fine-tuning.

- Mixed-precision training for speed and memory optimization.

-

Image Generation:

- Generate high-quality images in as few as 4 steps.

- Support for CFG during sampling.

Notebook Structure (Colab Version)

-

Introduction:

- Overview of diffusion models and consistency models.

- Theoretical background and mathematical formulations.

-

Teacher Model (Stable Diffusion 1.5):

- Load and configure the teacher model for image generation.

-

Dataset Preparation:

- Use a subset of the COCO dataset for training.

- Preprocess images and create data loaders.

-

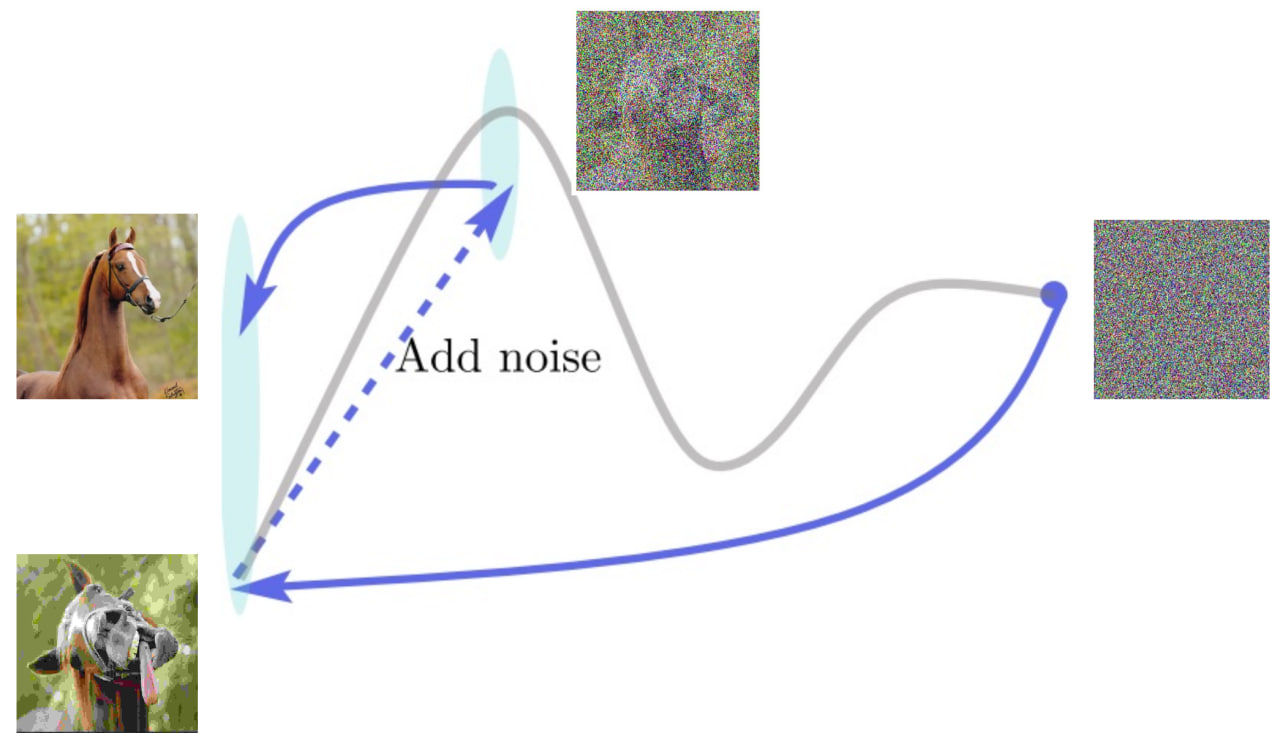

Consistency Training (CT):

- Train a standalone consistency model without a teacher.

-

Consistency Distillation (CD):

- Distill the teacher model into a student model with CFG.

-

Multi-Boundary Consistency Distillation:

- Train models with multiple boundary points for better performance.

-

Sampling:

- Generate images using the trained models.

- Compare results across different training methods.

-

Model Upload:

- Save and upload the trained models to Hugging Face for sharing.

-

Clone the repository:

git clone https://github.com/your-username/Yandex-ConsistencyModels.git cd Yandex-ConsistencyModels -

Open the notebook

ConsistencyModels.ipynbin Jupyter or Colab. -

Follow the step-by-step instructions to train and evaluate consistency models.

-

Use the provided sampling functions to generate images from text prompts.

- 4-Step Image Generation: The distilled models can generate high-quality images in just 4 steps, compared to 50 steps for the original Stable Diffusion model.

- Improved Efficiency: Multi-boundary consistency distillation achieves better results with deterministic sampling.

This project is based on research and resources provided by Yandex Research within the CV Week intensice course on Diffusion Models. You may find the lectures and tutorials here (in Russian Language).