Akash Kumar, Zsolt Kira, Yogesh S Rawat

Official code for our paper "Contextual Self-paced Learning for Weakly Supervised Spatio-Temporal Video Grounding"

- (Jan 21, 2025)

- This paper has been accepted for publication in the ICLR 2025 conference.

- (Feb 20, 2025)

- Project website is now live at cospal-webpage

- (Apr 20, 2025)

- Code for our method on weakly supervised spatio-temporal video grounding has been released.

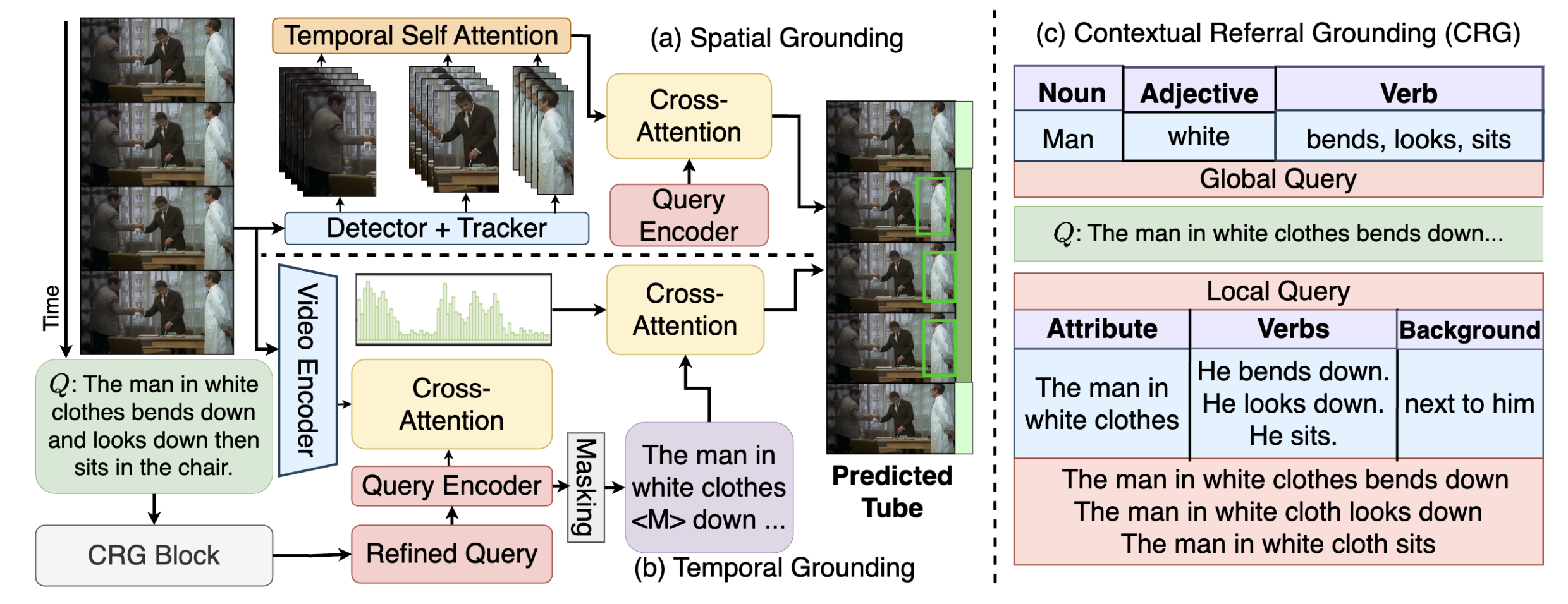

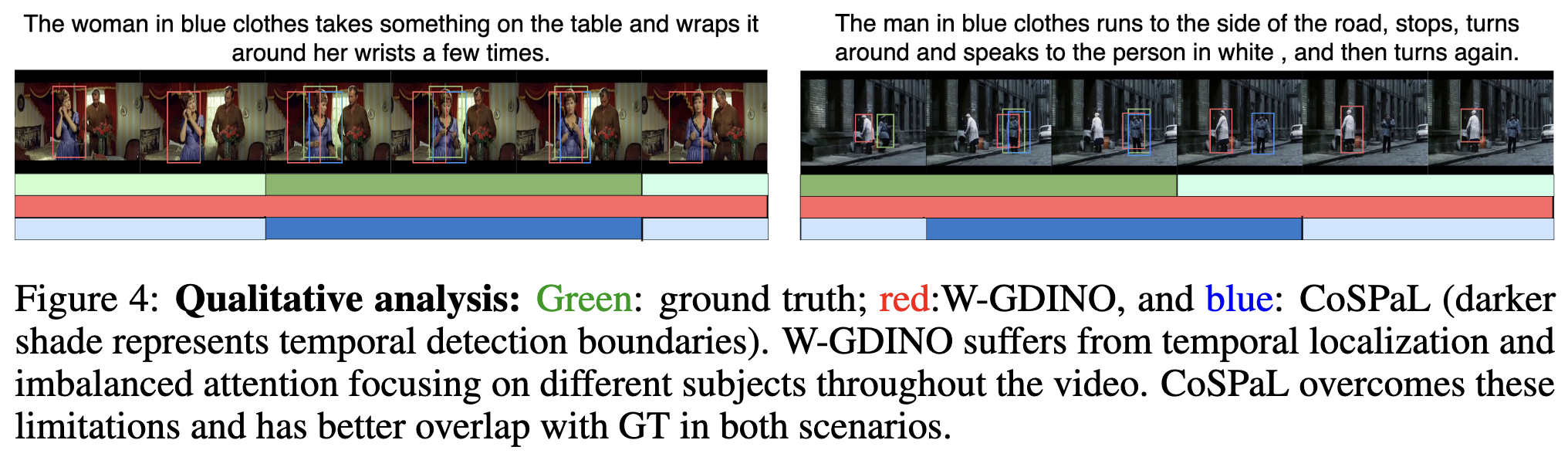

Abstract: In this work, we focus on Weakly Supervised Spatio-Temporal Video Grounding (WSTVG). It is a multimodal task aimed at localizing specific subjects spatio-temporally based on textual queries without bounding box supervision. Motivated by recent advancements in multi-modal foundation models for grounding tasks, we first explore the potential of state-of-the-art object detection models for WSTVG. Despite their robust zero-shot capabilities, our adaptation reveals significant limitations, including inconsistent temporal predictions, inadequate understanding of complex queries, and challenges in adapting to difficult scenarios. We propose CoSPaL (Contextual Self-Paced Learning), a novel approach which is designed to overcome these limitations. CoSPaL integrates three core components: (1) Tubelet Phrase Grounding (TPG), which introduces spatio-temporal prediction by linking textual queries to tubelets; (2) Contextual Referral Grounding (CRG), which improves comprehension of complex queries by extracting contextual information to refine object identification over time; and (3) Self-Paced Scene Understanding (SPS), a training paradigm that progressively increases task difficulty, enabling the model to adapt to complex scenarios by transitioning from coarse to fine-grained understanding. Code and models are publicly available.

- We establish state-of-the-art results (SOTA) in weakly-supervised video action detection on HCSTVG-v1, HCSTVG-v2 and VidSTG.

- We propose a context-aware progressive learning approach to solve the problem.

We have used python=3.8.16, and torch=1.10.0 for all the code in this repository. It is recommended to follow the below steps and setup your conda environment in the same way to replicate the results mentioned in this paper and repository.

- Clone this repository into your local machine as follows:

git clone https://github.com/AKASH2907/copsal-weakly-stvg.git- Change the current directory to the main project folder :

cd copsal-weakly-stvg- To install the project dependencies and libraries, use conda and install the defined environment from the .yml file by running:

conda env create -f environment.yml- Activate the newly created conda environment:

conda activate wstvg To download and setup the required datasets used in this work, please follow these steps:

- Download the HCSTVG-v1 dataset and annotations from their official website.

- Download the HCSTVG-v2 dataset and annotations from their official website.

- Download the VidSTG dataset and annotations from their official website.

- Use preproc files from this location to arrange the annotation.

Should you have any questions, please create an issue in this repository or contact at akash.kumar@ucf.edu

If you found our work helpful, please consider starring the repository ⭐⭐⭐ and citing our work as follows:

@article{kumar2025contextual,

title={Contextual Self-paced Learning for Weakly Supervised Spatio-Temporal Video Grounding},

author={Kumar, Akash and Kira, Zsolt and Rawat, Yogesh Singh},

journal={arXiv preprint arXiv:2501.17053},

year={2025}

}