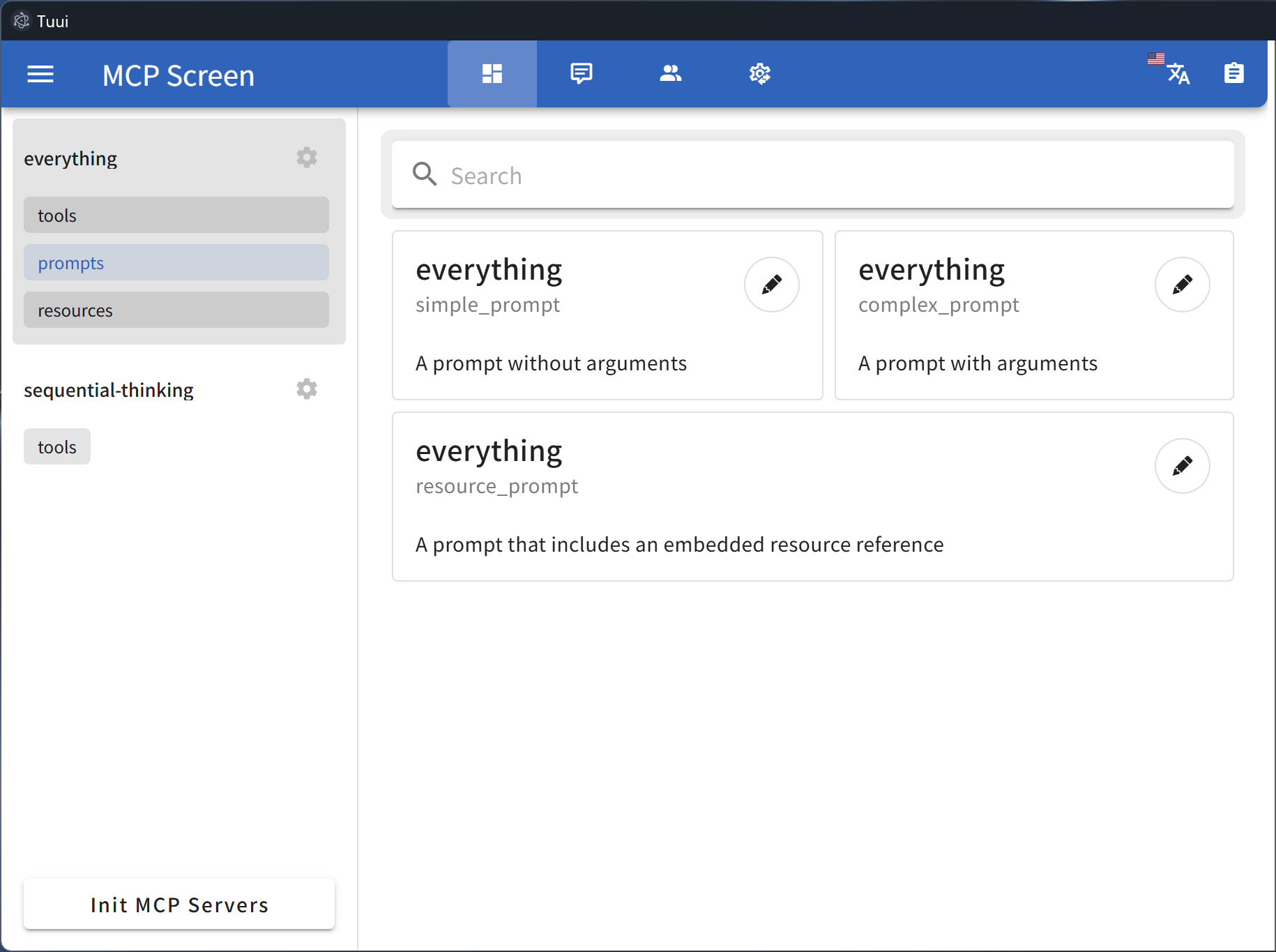

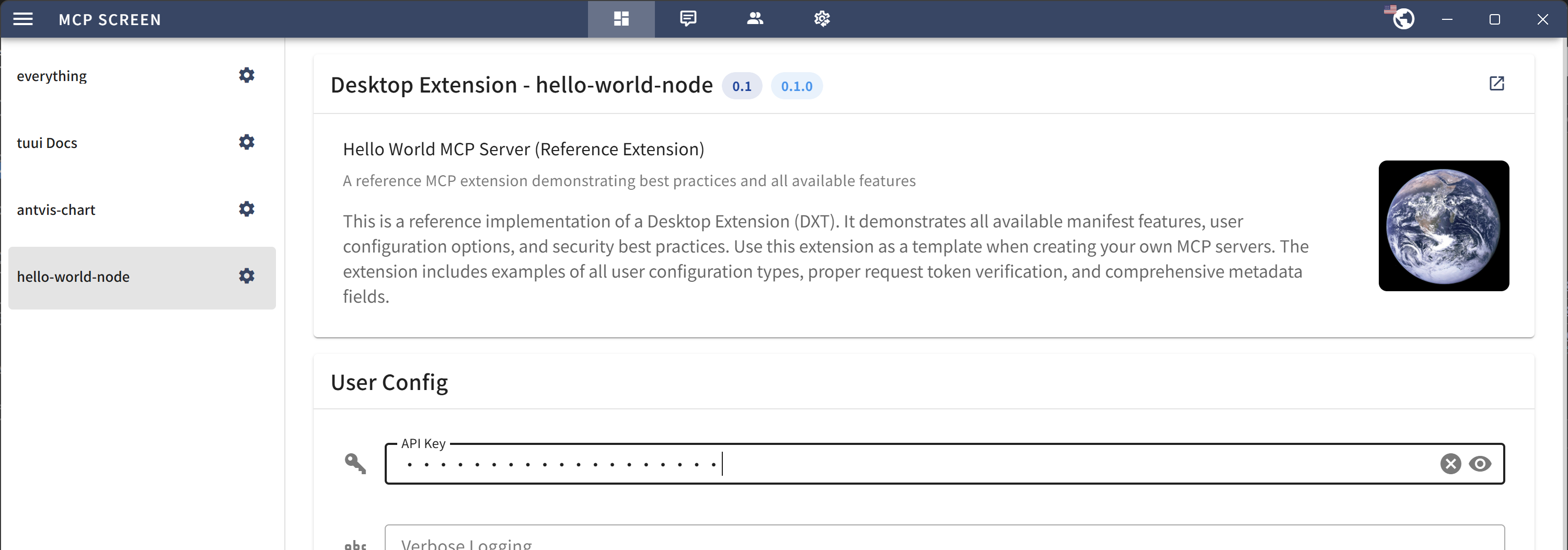

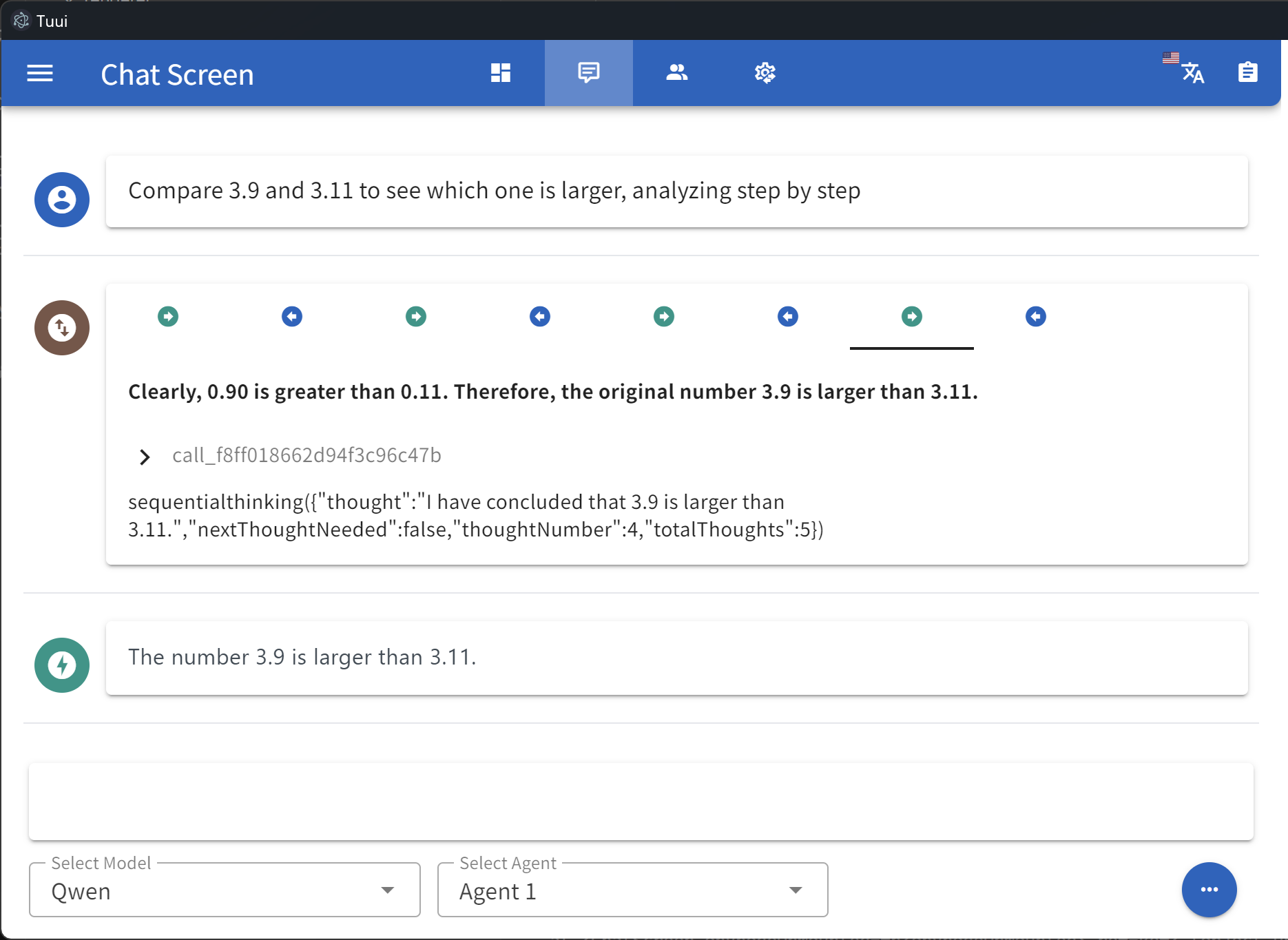

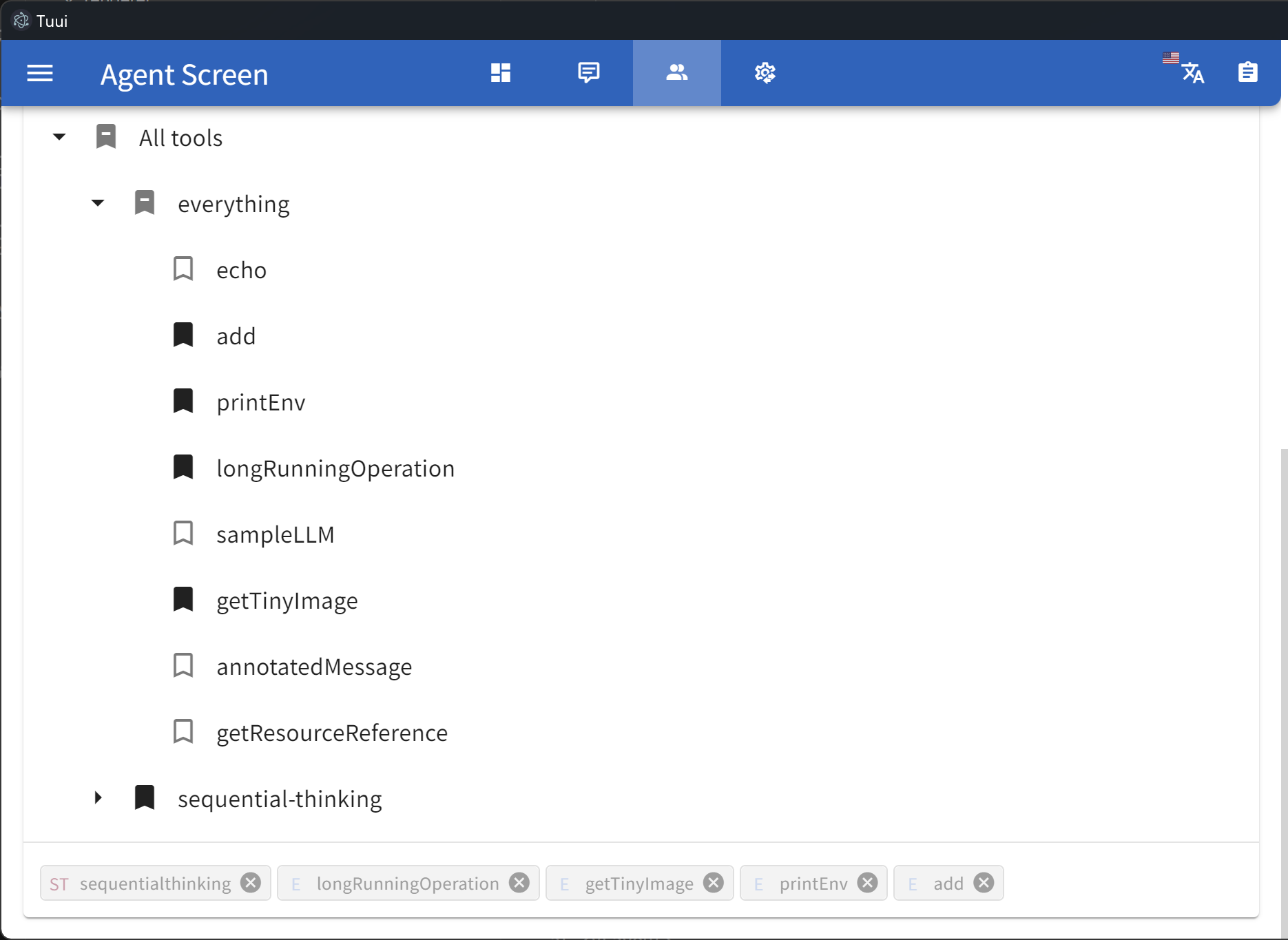

TUUI is a desktop MCP client designed as a tool unitary utility integration, accelerating AI adoption through the Model Context Protocol (MCP) and enabling cross-vendor LLM API orchestration.

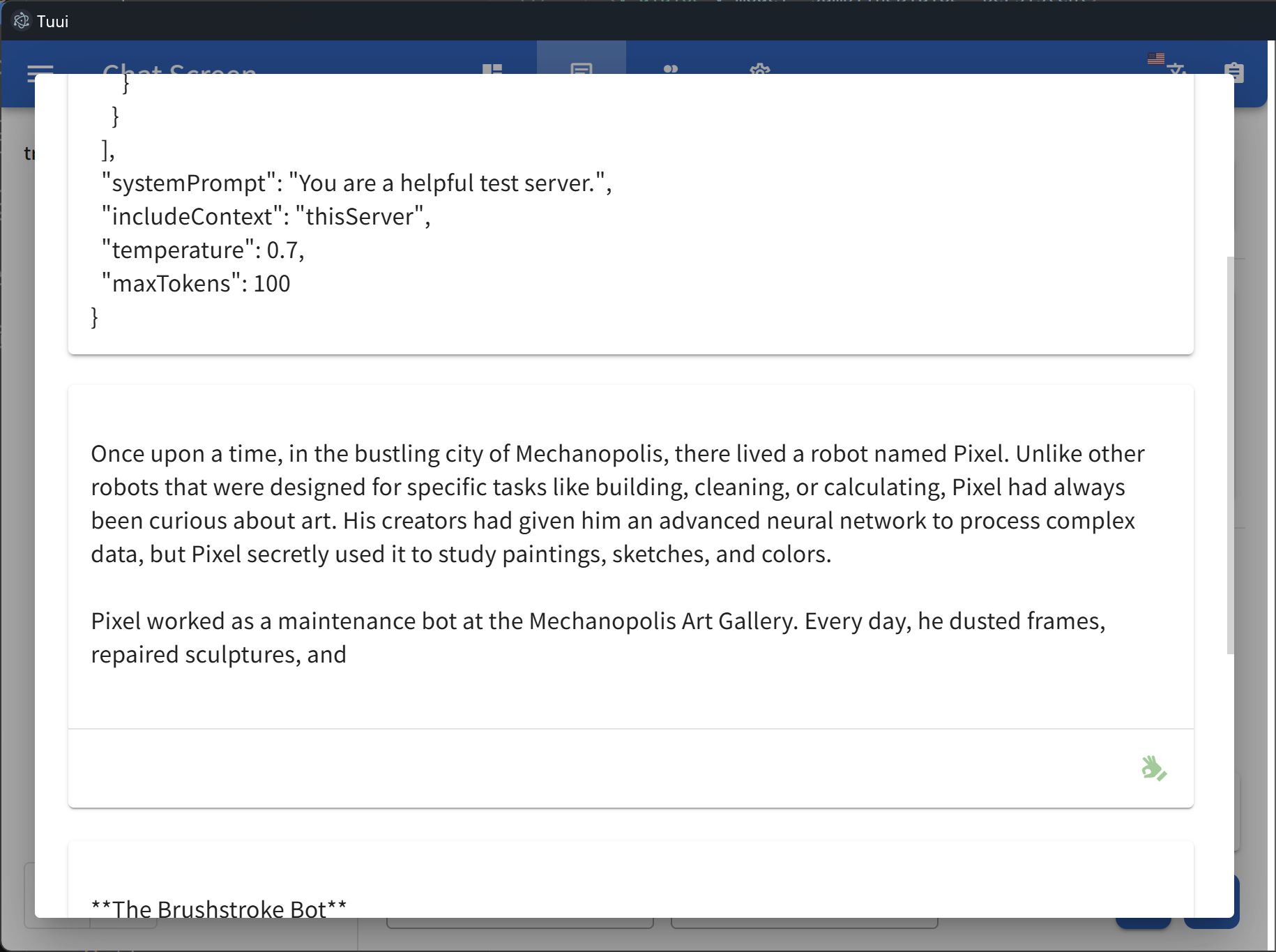

This repository is essentially an LLM chat desktop application based on MCP. It also represents a bold experiment in creating a complete project using AI. Many components within the project have been directly converted or generated from the prototype project through AI.

Given the considerations regarding the quality and safety of AI-generated content, this project employs strict syntax checks and naming conventions. Therefore, for any further development, please ensure that you use the linting tools I've set up to check and automatically fix syntax issues.

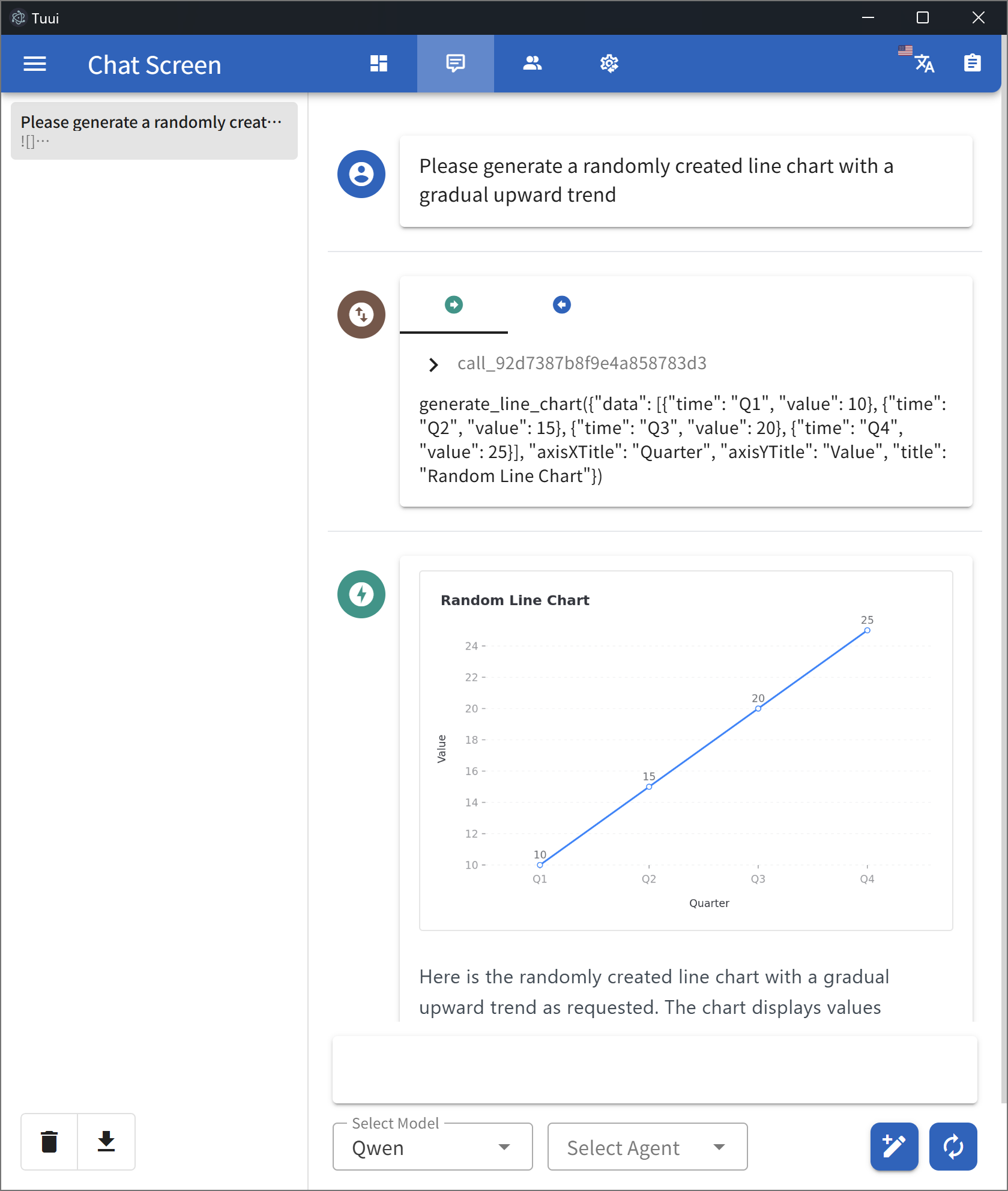

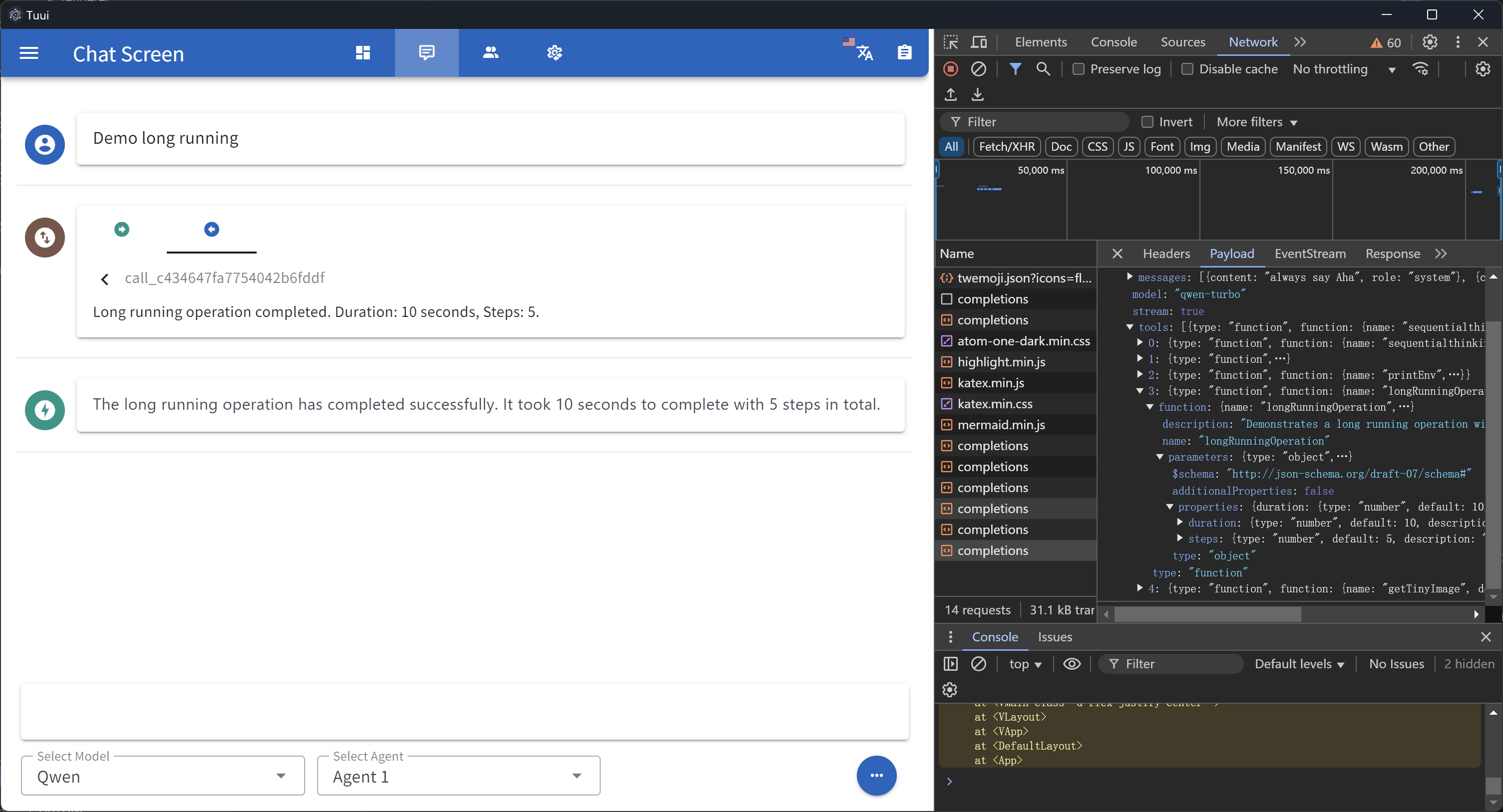

- ✨ Accelerate AI tool integration via MCP

- ✨ Orchestrate cross-vendor LLM APIs through dynamic configuring

- ✨ Automated application testing Support

- ✨ TypeScript support

- ✨ Multilingual support

- ✨ Basic layout manager

- ✨ Global state management through the Pinia store

- ✨ Quick support through the GitHub community and official documentation

To explore the project, please check wiki page: TUUI.com

You can also check the installation guide in the project documentation: Getting Started | 快速入门

You can also ask the AI directly about project-related questions: TUUI@DeepWiki

To use MCP-related features, ensure the following preconditions are met for your environment:

-

Set up an LLM backend (e.g.,

ChatGPT,Claude,Qwenor self-hosted) that supports tool/function calling. -

For NPX/NODE-based servers: Install

Node.jsto execute JavaScript/TypeScript tools. -

For UV/UVX-based servers: Install

Pythonand theUVlibrary. -

For Docker-based servers: Install

DockerHub. -

For macOS/Linux systems: Modify the default MCP configuration (e.g., adjust CLI paths or permissions).

Refer to the MCP Server Issue documentation for guidance

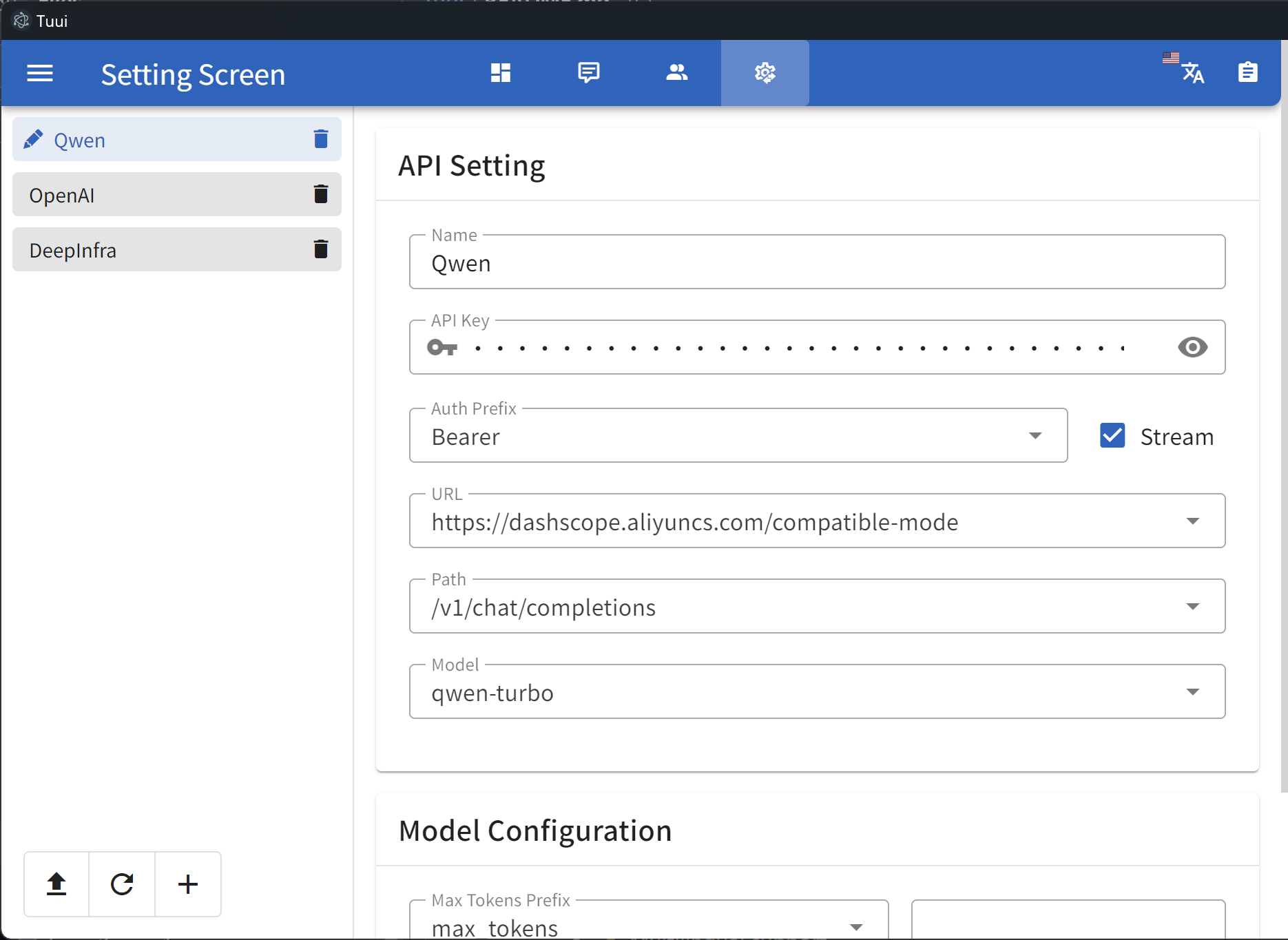

For guidance on configuring the LLM, refer to the template(i.e.: Qwen):

{

"name": "Qwen",

"apiKey": "",

"url": "https://dashscope.aliyuncs.com/compatible-mode",

"path": "/v1/chat/completions",

"model": "qwen-turbo",

"modelList": ["qwen-turbo", "qwen-plus", "qwen-max"],

"maxTokensValue": "",

"mcp": true

}The configuration accepts either a JSON object (for a single chatbot) or a JSON array (for multiple chatbots):

[

{

"name": "Openrouter && Proxy",

"apiKey": "",

"url": "https://api3.aiql.com",

"urlList": ["https://api3.aiql.com", "https://openrouter.ai/api"],

"path": "/v1/chat/completions",

"model": "openai/gpt-4.1-mini",

"modelList": [

"openai/gpt-4.1-mini",

"openai/gpt-4.1",

"anthropic/claude-sonnet-4",

"google/gemini-2.5-pro-preview"

],

"maxTokensValue": "",

"mcp": true

},

{

"name": "DeepInfra",

"apiKey": "",

"url": "https://api.deepinfra.com",

"path": "/v1/openai/chat/completions",

"model": "Qwen/Qwen3-32B",

"modelList": [

"Qwen/Qwen3-32B",

"Qwen/Qwen3-235B-A22B",

"meta-llama/Meta-Llama-3.1-70B-Instruct"

],

"mcp": true

}

]The full config and corresponding types could be found in: Config Type and Default Config.

For the decomposable package, you can also modify the default configuration of the built release in resources/assets/llm.json

Once you modify or import the LLM configuration, it will be stored in your localStorage by default. You can use the developer tools to view or clear the corresponding cache.

You can utilize Cloudflare's recommended mcp-remote to implement the full suite of remote MCP server functionalities (including Auth). For example, simply add the following to your config.json file:

{

"mcpServers": {

"cloudflare": {

"command": "npx",

"args": ["-y", "mcp-remote", "https://YOURDOMAIN.com/sse"]

}

}

}In this example, I have provided a test remote server: https://YOURDOMAIN.com on Cloudflare. This server will always approve your authentication requests.

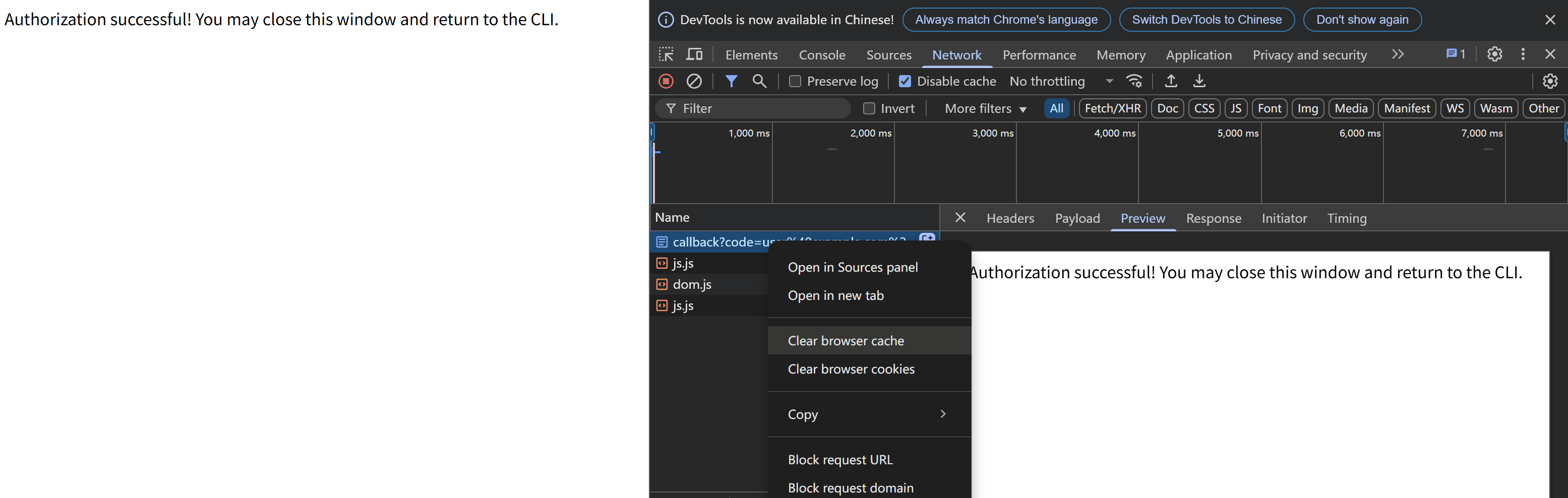

If you encounter any issues (please try to maintain OAuth auto-redirect to prevent callback delays that might cause failures), such as the common HTTP 400 error. You can resolve them by clearing your browser cache on the authentication page and then attempting verification again:

- ISSUE 64 - MCP Servers Don't Work with NVM (still open)

When launching the MCP server, if you encounter spawn errors such as ENOENT, try installing the corresponding MCP server locally and invoking it using an absolute path.

This is a common issue, and many cases remain unresolved to this day. The MCP SDK implements this workaround ISSUE 101 for Windows systems, but the problem still frequently occurs on other platforms.

We welcome contributions of any kind to this project, including feature enhancements, UI improvements, documentation updates, test case completions, and syntax corrections. I believe that a real developer can write better code than AI, so if you have concerns about certain parts of the code implementation, feel free to share your suggestions or submit a pull request.

Please review our Code of Conduct. It is in effect at all times. We expect it to be honored by everyone who contributes to this project.

For more information, please see Contributing Guidelines

Before creating an issue, check if you are using the latest version of the project. If you are not up-to-date, see if updating fixes your issue first.

Review our Security Policy. Do not file a public issue for security vulnerabilities.

Written by @AIQL.com.

Many of the ideas and prose for the statements in this project were based on or inspired by work from the following communities:

You can review the specific technical details and the license. We commend them for their efforts to facilitate collaboration in their projects.