Replies: 13 comments

-

|

503s are definitely outages. Please report these to operational monitoring. |

Beta Was this translation helpful? Give feedback.

-

|

@StephenClouse thanks. Is this project being actively maintained? |

Beta Was this translation helpful? Give feedback.

-

|

Yes, the API is an actively-developed operational system. |

Beta Was this translation helpful? Give feedback.

-

|

@StephenClouse I experienced these outages as well and happy to report as I get them. How can I report to operational monitoring? |

Beta Was this translation helpful? Give feedback.

-

|

@charliesneath https://weather-gov.github.io/api/reporting-issues |

Beta Was this translation helpful? Give feedback.

-

|

Does anyone know why they are having these issues or when it might be fixed? I've been getting a lot of 503s for the past couple weeks. |

Beta Was this translation helpful? Give feedback.

-

|

NCO is working on infrastructure improvements that should resolve 503s, but will take through July to be fully complete. |

Beta Was this translation helpful? Give feedback.

-

|

Thanks @scadergit. I filed a ticket with NCO and until this morning the thread was just other people submitting the same issues. This update, however, came through this morning -- in line with your update as well:

|

Beta Was this translation helpful? Give feedback.

-

|

That message is a little miss-leading (albeit unintentionally, think telephone game). The upgrade is not the cause of the issue, but it did change how a key issue is communicated. Those that have been around a while know that nginx "Your's truly" error pages were a familiar sight. This came from nginx timing out waiting on the API to respond, which itself came from the API waiting on the database to respond (and not the application itself). The upgrade implemented a timeout so the API would return a machine-friendly 503 vs an the unfriendly HTML error page. These are the now common 503's (granted, 503 existed before for other reasons like missing forecast data that happend last week). That error is any block that prevents the API from fulfilling the request. They've also increased because the timeout is 10 seconds, which is intentionally lower than the nginx timeout. Given the performance requirements of the API, and general user expectation, responses that keep connectionis open longer than 10s ultimately cause the API to stumble over itself. So circling back around, the upgrade didn't cause the 503's, it's just being more transparent and more machine friendly when unable to fulfill the request due to the timeout. If you still see an nginx error message, it's because the endpoint makes multiple requests to the database. Each of those took less than new timeout, but collectively took longer than the nginx timeout. We might change the default error message to a machine-friendly response as well. The infrastructure upgrade is the actual resolution needed to improve the latency of the database. That is what we're hoping happens by end of July. |

Beta Was this translation helpful? Give feedback.

-

|

@scadergit |

Beta Was this translation helpful? Give feedback.

-

|

No infrastructure upgrades yet (that's another department with different pirorities), but we have spent considerable effort to find performance improvement on what we manage (software/configuration). There were changes earlier this week that made a big difference, but not enough to make it visible externally. More refinements today that should help. |

Beta Was this translation helpful? Give feedback.

-

|

Ok, thanks for the quick reply! Just let me know when there are any changes to the API (software/hardware) that may help reduce the number of 503s. |

Beta Was this translation helpful? Give feedback.

-

|

I've been experiencing 503s as well. But I've noticed that they tend to clear themselves up after several seconds. This self-clearing behavior is so consistent that I've had a lot of success by implementing a one-time (I don't want to hammer the servers with requests if they're truly down) automatic retry in my code after a 1-2 second delay. Here's an example URL: https://api.weather.gov/gridpoints/LOT/59,73 I can't say for sure, but it seems like it happens after the forecast is updated for a specific gridpoint (typically 15 after the hour from this WFO) and you're the first one to load the forecast. To try and prove out this theory I've been finding really small towns (less people likely to be loading that specific forecast/gridpoint) and loading them up. It's not 100% of the time, but it's pretty often that the first fetch will fail with a 503 and a second fetch a few seconds later will succeeded. Here's the response headers that I've received for two consecutive fetches to the endpoint above, about 3s apart per the timestamps in the data. Failed response Successful |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

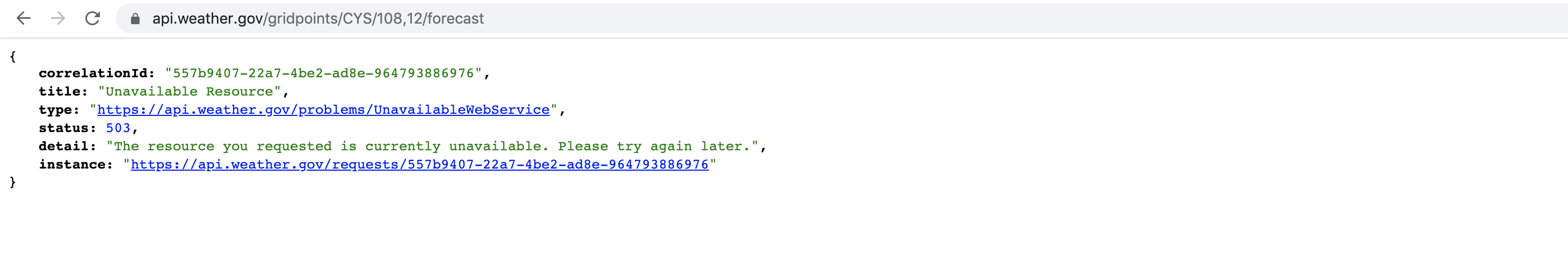

Describe the bug

I'm not sure if this is considered an "outage" or not, but I receive a lot of 503s from the forecast API, and am wondering if there's awareness of this?

It's incredibly inconsistent, but if I make a request for say 10 forecast locations in a row, 90%+ of the time at least one of those requests will fail with a 503, usually multiple ones.

To Reproduce

Access a forecast url such as https://api.weather.gov/gridpoints/CYS/108,12/forecast, note that it often will fail with a 503 (screenshot below).

CorrelationId:

557b9407-22a7-4be2-ad8e-964793886976Expected behavior

No 503s, or only rarely.

Screenshots

Environment

Javascript request, and hitting the url directly in the browser.

Beta Was this translation helpful? Give feedback.

All reactions