+

+

+

+

+

+

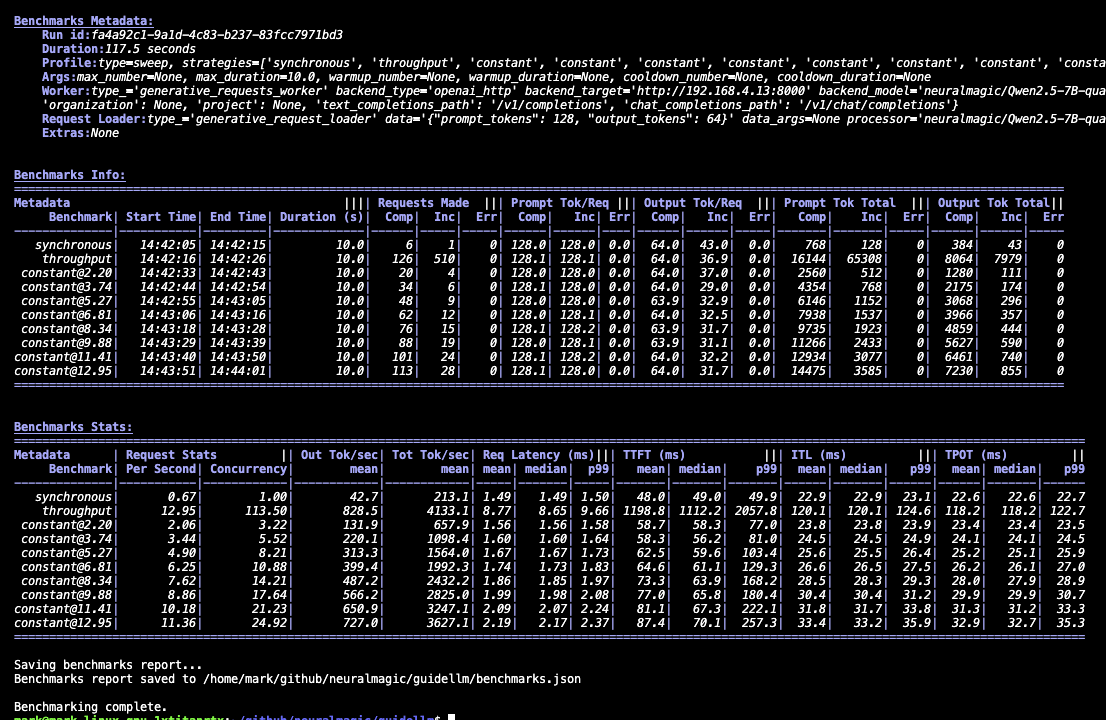

+The above command will begin the evaluation and provide progress updates similar to the following:

+The above command will begin the evaluation and provide progress updates similar to the following:  #### 3. Analyze the Results

@@ -90,15 +90,15 @@ After the evaluation is completed, GuideLLM will summarize the results into thre

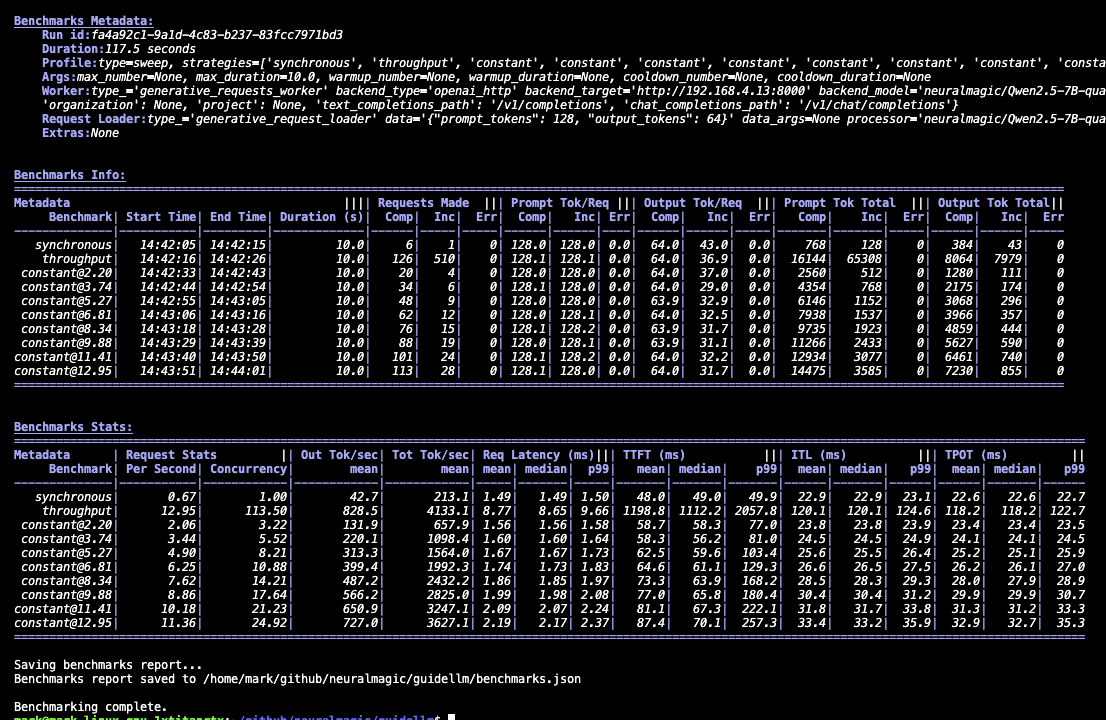

2. Benchmarks Info: A high-level view of each benchmark and the requests that were run, including the type, duration, request statuses, and number of tokens.

3. Benchmarks Stats: A summary of the statistics for each benchmark run, including the request rate, concurrency, latency, and token-level metrics such as TTFT, ITL, and more.

-The sections will look similar to the following:

#### 3. Analyze the Results

@@ -90,15 +90,15 @@ After the evaluation is completed, GuideLLM will summarize the results into thre

2. Benchmarks Info: A high-level view of each benchmark and the requests that were run, including the type, duration, request statuses, and number of tokens.

3. Benchmarks Stats: A summary of the statistics for each benchmark run, including the request rate, concurrency, latency, and token-level metrics such as TTFT, ITL, and more.

-The sections will look similar to the following:  +The sections will look similar to the following:

+The sections will look similar to the following:  -For more details about the metrics and definitions, please refer to the [Metrics Documentation](https://github.com/neuralmagic/guidellm/blob/main/docs/metrics.md).

+For more details about the metrics and definitions, please refer to the [Metrics Documentation](https://github.com/vllm-project/guidellm/blob/main/docs/metrics.md).

#### 4. Explore the Results File

By default, the full results, including complete statistics and request data, are saved to a file `benchmarks.json` in the current working directory. This file can be used for further analysis or reporting, and additionally can be reloaded into Python for further analysis using the `guidellm.benchmark.GenerativeBenchmarksReport` class. You can specify a different file name and extension with the `--output` argument.

-For more details about the supported output file types, please take a look at the [Outputs Documentation](https://github.com/neuralmagic/guidellm/blob/main/docs/outputs.md).

+For more details about the supported output file types, please take a look at the [Outputs Documentation](https://github.com/vllm-project/guidellm/blob/main/docs/outputs.md).

#### 5. Use the Results

@@ -106,7 +106,7 @@ The results from GuideLLM are used to optimize your LLM deployment for performan

For example, when deploying a chat application, we likely want to ensure that our time to first token (TTFT) and inter-token latency (ITL) are under certain thresholds to meet our service level objectives (SLOs) or service level agreements (SLAs). For example, setting TTFT to 200ms and ITL 25ms for the sample data provided in the example above, we can see that even though the server is capable of handling up to 13 requests per second, we would only be able to meet our SLOs for 99% of users at a request rate of 3.5 requests per second. If we relax our constraints on ITL to 50 ms, then we can meet the TTFT SLA for 99% of users at a request rate of approximately 10 requests per second.

-For further details on determining the optimal request rate and SLOs, refer to the [SLOs Documentation](https://github.com/neuralmagic/guidellm/blob/main/docs/service_level_objectives.md).

+For further details on determining the optimal request rate and SLOs, refer to the [SLOs Documentation](https://github.com/vllm-project/guidellm/blob/main/docs/service_level_objectives.md).

### Configurations

@@ -165,16 +165,16 @@ The UI is a WIP, check more recent PRs for the latest updates

### Documentation

-Our comprehensive documentation offers detailed guides and resources to help you maximize the benefits of GuideLLM. Whether just getting started or looking to dive deeper into advanced topics, you can find what you need in our [Documentation](https://github.com/neuralmagic/guidellm/blob/main/docs).

+Our comprehensive documentation offers detailed guides and resources to help you maximize the benefits of GuideLLM. Whether just getting started or looking to dive deeper into advanced topics, you can find what you need in our [Documentation](https://github.com/vllm-project/guidellm/blob/main/docs).

### Core Docs

-- [**Installation Guide**](https://github.com/neuralmagic/guidellm/blob/main/docs/install.md) - This guide provides step-by-step instructions for installing GuideLLM, including prerequisites and setup tips.

-- [**Backends Guide**](https://github.com/neuralmagic/guidellm/blob/main/docs/backends.md) - A comprehensive overview of supported backends and how to set them up for use with GuideLLM.

-- [**Data/Datasets Guide**](https://github.com/neuralmagic/guidellm/blob/main/docs/datasets.md) - Information on supported datasets, including how to use them for benchmarking.

-- [**Metrics Guide**](https://github.com/neuralmagic/guidellm/blob/main/docs/metrics.md) - Detailed explanations of the metrics used in GuideLLM, including definitions and how to interpret them.

-- [**Outputs Guide**](https://github.com/neuralmagic/guidellm/blob/main/docs/outputs.md) - Information on the different output formats supported by GuideLLM and how to use them.

-- [**Architecture Overview**](https://github.com/neuralmagic/guidellm/blob/main/docs/architecture.md) - A detailed look at GuideLLM's design, components, and how they interact.

+- [**Installation Guide**](https://github.com/vllm-project/guidellm/blob/main/docs/install.md) - This guide provides step-by-step instructions for installing GuideLLM, including prerequisites and setup tips.

+- [**Backends Guide**](https://github.com/vllm-project/guidellm/blob/main/docs/backends.md) - A comprehensive overview of supported backends and how to set them up for use with GuideLLM.

+- [**Data/Datasets Guide**](https://github.com/vllm-project/guidellm/blob/main/docs/datasets.md) - Information on supported datasets, including how to use them for benchmarking.

+- [**Metrics Guide**](https://github.com/vllm-project/guidellm/blob/main/docs/metrics.md) - Detailed explanations of the metrics used in GuideLLM, including definitions and how to interpret them.

+- [**Outputs Guide**](https://github.com/vllm-project/guidellm/blob/main/docs/outputs.md) - Information on the different output formats supported by GuideLLM and how to use them.

+- [**Architecture Overview**](https://github.com/vllm-project/guidellm/blob/main/docs/architecture.md) - A detailed look at GuideLLM's design, components, and how they interact.

### Supporting External Documentation

@@ -184,17 +184,17 @@ Our comprehensive documentation offers detailed guides and resources to help you

We appreciate contributions to the code, examples, integrations, documentation, bug reports, and feature requests! Your feedback and involvement are crucial in helping GuideLLM grow and improve. Below are some ways you can get involved:

-- [**DEVELOPING.md**](https://github.com/neuralmagic/guidellm/blob/main/DEVELOPING.md) - Development guide for setting up your environment and making contributions.

-- [**CONTRIBUTING.md**](https://github.com/neuralmagic/guidellm/blob/main/CONTRIBUTING.md) - Guidelines for contributing to the project, including code standards, pull request processes, and more.

-- [**CODE_OF_CONDUCT.md**](https://github.com/neuralmagic/guidellm/blob/main/CODE_OF_CONDUCT.md) - Our expectations for community behavior to ensure a welcoming and inclusive environment.

+- [**DEVELOPING.md**](https://github.com/vllm-project/guidellm/blob/main/DEVELOPING.md) - Development guide for setting up your environment and making contributions.

+- [**CONTRIBUTING.md**](https://github.com/vllm-project/guidellm/blob/main/CONTRIBUTING.md) - Guidelines for contributing to the project, including code standards, pull request processes, and more.

+- [**CODE_OF_CONDUCT.md**](https://github.com/vllm-project/guidellm/blob/main/CODE_OF_CONDUCT.md) - Our expectations for community behavior to ensure a welcoming and inclusive environment.

### Releases

-Visit our [GitHub Releases Page](https://github.com/neuralmagic/guidellm/releases) and review the release notes to stay updated with the latest releases.

+Visit our [GitHub Releases Page](https://github.com/vllm-project/guidellm/releases) and review the release notes to stay updated with the latest releases.

### License

-GuideLLM is licensed under the [Apache License 2.0](https://github.com/neuralmagic/guidellm/blob/main/LICENSE).

+GuideLLM is licensed under the [Apache License 2.0](https://github.com/vllm-project/guidellm/blob/main/LICENSE).

### Cite

@@ -205,6 +205,6 @@ If you find GuideLLM helpful in your research or projects, please consider citin

title={GuideLLM: Scalable Inference and Optimization for Large Language Models},

author={Neural Magic, Inc.},

year={2024},

- howpublished={\url{https://github.com/neuralmagic/guidellm}},

+ howpublished={\url{https://github.com/vllm-project/guidellm}},

}

```

diff --git a/deploy/Containerfile b/deploy/Containerfile

index 6c7d5613..2702e24d 100644

--- a/deploy/Containerfile

+++ b/deploy/Containerfile

@@ -32,7 +32,7 @@ USER guidellm

WORKDIR /results

# Metadata

-LABEL org.opencontainers.image.source="https://github.com/neuralmagic/guidellm" \

+LABEL org.opencontainers.image.source="https://github.com/vllm-project/guidellm" \

org.opencontainers.image.description="GuideLLM Performance Benchmarking Container"

# Set the environment variable for the benchmark script

diff --git a/docs/backends.md b/docs/backends.md

index 9bb053f0..b21319aa 100644

--- a/docs/backends.md

+++ b/docs/backends.md

@@ -42,4 +42,4 @@ For more information on starting a TGI server, see the [TGI Documentation](https

## Expanding Backend Support

-GuideLLM is an open platform, and we encourage contributions to extend its backend support. Whether it's adding new server implementations, integrating with Python-based backends, or enhancing existing capabilities, your contributions are welcome. For more details on how to contribute, see the [CONTRIBUTING.md](https://github.com/neuralmagic/guidellm/blob/main/CONTRIBUTING.md) file.

+GuideLLM is an open platform, and we encourage contributions to extend its backend support. Whether it's adding new server implementations, integrating with Python-based backends, or enhancing existing capabilities, your contributions are welcome. For more details on how to contribute, see the [CONTRIBUTING.md](https://github.com/vllm-project/guidellm/blob/main/CONTRIBUTING.md) file.

diff --git a/docs/install.md b/docs/install.md

index 2237f8af..c25c465b 100644

--- a/docs/install.md

+++ b/docs/install.md

@@ -41,7 +41,7 @@ pip install guidellm==0.2.0

To install the latest development version of GuideLLM from the main branch, use the following command:

```bash

-pip install git+https://github.com/neuralmagic/guidellm.git

+pip install git+https://github.com/vllm-project/guidellm.git

```

This will clone the repository and install GuideLLM directly from the main branch.

@@ -51,7 +51,7 @@ This will clone the repository and install GuideLLM directly from the main branc

If you want to install GuideLLM from a specific branch (e.g., `feature-branch`), use the following command:

```bash

-pip install git+https://github.com/neuralmagic/guidellm.git@feature-branch

+pip install git+https://github.com/vllm-project/guidellm.git@feature-branch

```

Replace `feature-branch` with the name of the branch you want to install.

@@ -84,4 +84,4 @@ This should display the installed version of GuideLLM.

## Troubleshooting

-If you encounter any issues during installation, ensure that your Python and pip versions meet the prerequisites. For further assistance, please refer to the [GitHub Issues](https://github.com/neuralmagic/guidellm/issues) page or consult the [Documentation](https://github.com/neuralmagic/guidellm/tree/main/docs).

+If you encounter any issues during installation, ensure that your Python and pip versions meet the prerequisites. For further assistance, please refer to the [GitHub Issues](https://github.com/vllm-project/guidellm/issues) page or consult the [Documentation](https://github.com/vllm-project/guidellm/tree/main/docs).

diff --git a/docs/outputs.md b/docs/outputs.md

index ea3d9a6f..7a42c52a 100644

--- a/docs/outputs.md

+++ b/docs/outputs.md

@@ -106,4 +106,4 @@ for benchmark in benchmarks:

print(benchmark.id_)

```

-For more details on the `GenerativeBenchmarksReport` class and its methods, refer to the [source code](https://github.com/neuralmagic/guidellm/blob/main/src/guidellm/benchmark/output.py).

+For more details on the `GenerativeBenchmarksReport` class and its methods, refer to the [source code](https://github.com/vllm-project/guidellm/blob/main/src/guidellm/benchmark/output.py).

diff --git a/pyproject.toml b/pyproject.toml

index 2aec3a27..8115b1fd 100644

--- a/pyproject.toml

+++ b/pyproject.toml

@@ -100,10 +100,10 @@ dev = [

]

[project.urls]

-homepage = "https://github.com/neuralmagic/guidellm"

-source = "https://github.com/neuralmagic/guidellm"

-issues = "https://github.com/neuralmagic/guidellm/issues"

-docs = "https://github.com/neuralmagic/guidellm/tree/main/docs"

+homepage = "https://github.com/vllm-project/guidellm"

+source = "https://github.com/vllm-project/guidellm"

+issues = "https://github.com/vllm-project/guidellm/issues"

+docs = "https://github.com/vllm-project/guidellm/tree/main/docs"

[project.entry-points.console_scripts]

-For more details about the metrics and definitions, please refer to the [Metrics Documentation](https://github.com/neuralmagic/guidellm/blob/main/docs/metrics.md).

+For more details about the metrics and definitions, please refer to the [Metrics Documentation](https://github.com/vllm-project/guidellm/blob/main/docs/metrics.md).

#### 4. Explore the Results File

By default, the full results, including complete statistics and request data, are saved to a file `benchmarks.json` in the current working directory. This file can be used for further analysis or reporting, and additionally can be reloaded into Python for further analysis using the `guidellm.benchmark.GenerativeBenchmarksReport` class. You can specify a different file name and extension with the `--output` argument.

-For more details about the supported output file types, please take a look at the [Outputs Documentation](https://github.com/neuralmagic/guidellm/blob/main/docs/outputs.md).

+For more details about the supported output file types, please take a look at the [Outputs Documentation](https://github.com/vllm-project/guidellm/blob/main/docs/outputs.md).

#### 5. Use the Results

@@ -106,7 +106,7 @@ The results from GuideLLM are used to optimize your LLM deployment for performan

For example, when deploying a chat application, we likely want to ensure that our time to first token (TTFT) and inter-token latency (ITL) are under certain thresholds to meet our service level objectives (SLOs) or service level agreements (SLAs). For example, setting TTFT to 200ms and ITL 25ms for the sample data provided in the example above, we can see that even though the server is capable of handling up to 13 requests per second, we would only be able to meet our SLOs for 99% of users at a request rate of 3.5 requests per second. If we relax our constraints on ITL to 50 ms, then we can meet the TTFT SLA for 99% of users at a request rate of approximately 10 requests per second.

-For further details on determining the optimal request rate and SLOs, refer to the [SLOs Documentation](https://github.com/neuralmagic/guidellm/blob/main/docs/service_level_objectives.md).

+For further details on determining the optimal request rate and SLOs, refer to the [SLOs Documentation](https://github.com/vllm-project/guidellm/blob/main/docs/service_level_objectives.md).

### Configurations

@@ -165,16 +165,16 @@ The UI is a WIP, check more recent PRs for the latest updates

### Documentation

-Our comprehensive documentation offers detailed guides and resources to help you maximize the benefits of GuideLLM. Whether just getting started or looking to dive deeper into advanced topics, you can find what you need in our [Documentation](https://github.com/neuralmagic/guidellm/blob/main/docs).

+Our comprehensive documentation offers detailed guides and resources to help you maximize the benefits of GuideLLM. Whether just getting started or looking to dive deeper into advanced topics, you can find what you need in our [Documentation](https://github.com/vllm-project/guidellm/blob/main/docs).

### Core Docs

-- [**Installation Guide**](https://github.com/neuralmagic/guidellm/blob/main/docs/install.md) - This guide provides step-by-step instructions for installing GuideLLM, including prerequisites and setup tips.

-- [**Backends Guide**](https://github.com/neuralmagic/guidellm/blob/main/docs/backends.md) - A comprehensive overview of supported backends and how to set them up for use with GuideLLM.

-- [**Data/Datasets Guide**](https://github.com/neuralmagic/guidellm/blob/main/docs/datasets.md) - Information on supported datasets, including how to use them for benchmarking.

-- [**Metrics Guide**](https://github.com/neuralmagic/guidellm/blob/main/docs/metrics.md) - Detailed explanations of the metrics used in GuideLLM, including definitions and how to interpret them.

-- [**Outputs Guide**](https://github.com/neuralmagic/guidellm/blob/main/docs/outputs.md) - Information on the different output formats supported by GuideLLM and how to use them.

-- [**Architecture Overview**](https://github.com/neuralmagic/guidellm/blob/main/docs/architecture.md) - A detailed look at GuideLLM's design, components, and how they interact.

+- [**Installation Guide**](https://github.com/vllm-project/guidellm/blob/main/docs/install.md) - This guide provides step-by-step instructions for installing GuideLLM, including prerequisites and setup tips.

+- [**Backends Guide**](https://github.com/vllm-project/guidellm/blob/main/docs/backends.md) - A comprehensive overview of supported backends and how to set them up for use with GuideLLM.

+- [**Data/Datasets Guide**](https://github.com/vllm-project/guidellm/blob/main/docs/datasets.md) - Information on supported datasets, including how to use them for benchmarking.

+- [**Metrics Guide**](https://github.com/vllm-project/guidellm/blob/main/docs/metrics.md) - Detailed explanations of the metrics used in GuideLLM, including definitions and how to interpret them.

+- [**Outputs Guide**](https://github.com/vllm-project/guidellm/blob/main/docs/outputs.md) - Information on the different output formats supported by GuideLLM and how to use them.

+- [**Architecture Overview**](https://github.com/vllm-project/guidellm/blob/main/docs/architecture.md) - A detailed look at GuideLLM's design, components, and how they interact.

### Supporting External Documentation

@@ -184,17 +184,17 @@ Our comprehensive documentation offers detailed guides and resources to help you

We appreciate contributions to the code, examples, integrations, documentation, bug reports, and feature requests! Your feedback and involvement are crucial in helping GuideLLM grow and improve. Below are some ways you can get involved:

-- [**DEVELOPING.md**](https://github.com/neuralmagic/guidellm/blob/main/DEVELOPING.md) - Development guide for setting up your environment and making contributions.

-- [**CONTRIBUTING.md**](https://github.com/neuralmagic/guidellm/blob/main/CONTRIBUTING.md) - Guidelines for contributing to the project, including code standards, pull request processes, and more.

-- [**CODE_OF_CONDUCT.md**](https://github.com/neuralmagic/guidellm/blob/main/CODE_OF_CONDUCT.md) - Our expectations for community behavior to ensure a welcoming and inclusive environment.

+- [**DEVELOPING.md**](https://github.com/vllm-project/guidellm/blob/main/DEVELOPING.md) - Development guide for setting up your environment and making contributions.

+- [**CONTRIBUTING.md**](https://github.com/vllm-project/guidellm/blob/main/CONTRIBUTING.md) - Guidelines for contributing to the project, including code standards, pull request processes, and more.

+- [**CODE_OF_CONDUCT.md**](https://github.com/vllm-project/guidellm/blob/main/CODE_OF_CONDUCT.md) - Our expectations for community behavior to ensure a welcoming and inclusive environment.

### Releases

-Visit our [GitHub Releases Page](https://github.com/neuralmagic/guidellm/releases) and review the release notes to stay updated with the latest releases.

+Visit our [GitHub Releases Page](https://github.com/vllm-project/guidellm/releases) and review the release notes to stay updated with the latest releases.

### License

-GuideLLM is licensed under the [Apache License 2.0](https://github.com/neuralmagic/guidellm/blob/main/LICENSE).

+GuideLLM is licensed under the [Apache License 2.0](https://github.com/vllm-project/guidellm/blob/main/LICENSE).

### Cite

@@ -205,6 +205,6 @@ If you find GuideLLM helpful in your research or projects, please consider citin

title={GuideLLM: Scalable Inference and Optimization for Large Language Models},

author={Neural Magic, Inc.},

year={2024},

- howpublished={\url{https://github.com/neuralmagic/guidellm}},

+ howpublished={\url{https://github.com/vllm-project/guidellm}},

}

```

diff --git a/deploy/Containerfile b/deploy/Containerfile

index 6c7d5613..2702e24d 100644

--- a/deploy/Containerfile

+++ b/deploy/Containerfile

@@ -32,7 +32,7 @@ USER guidellm

WORKDIR /results

# Metadata

-LABEL org.opencontainers.image.source="https://github.com/neuralmagic/guidellm" \

+LABEL org.opencontainers.image.source="https://github.com/vllm-project/guidellm" \

org.opencontainers.image.description="GuideLLM Performance Benchmarking Container"

# Set the environment variable for the benchmark script

diff --git a/docs/backends.md b/docs/backends.md

index 9bb053f0..b21319aa 100644

--- a/docs/backends.md

+++ b/docs/backends.md

@@ -42,4 +42,4 @@ For more information on starting a TGI server, see the [TGI Documentation](https

## Expanding Backend Support

-GuideLLM is an open platform, and we encourage contributions to extend its backend support. Whether it's adding new server implementations, integrating with Python-based backends, or enhancing existing capabilities, your contributions are welcome. For more details on how to contribute, see the [CONTRIBUTING.md](https://github.com/neuralmagic/guidellm/blob/main/CONTRIBUTING.md) file.

+GuideLLM is an open platform, and we encourage contributions to extend its backend support. Whether it's adding new server implementations, integrating with Python-based backends, or enhancing existing capabilities, your contributions are welcome. For more details on how to contribute, see the [CONTRIBUTING.md](https://github.com/vllm-project/guidellm/blob/main/CONTRIBUTING.md) file.

diff --git a/docs/install.md b/docs/install.md

index 2237f8af..c25c465b 100644

--- a/docs/install.md

+++ b/docs/install.md

@@ -41,7 +41,7 @@ pip install guidellm==0.2.0

To install the latest development version of GuideLLM from the main branch, use the following command:

```bash

-pip install git+https://github.com/neuralmagic/guidellm.git

+pip install git+https://github.com/vllm-project/guidellm.git

```

This will clone the repository and install GuideLLM directly from the main branch.

@@ -51,7 +51,7 @@ This will clone the repository and install GuideLLM directly from the main branc

If you want to install GuideLLM from a specific branch (e.g., `feature-branch`), use the following command:

```bash

-pip install git+https://github.com/neuralmagic/guidellm.git@feature-branch

+pip install git+https://github.com/vllm-project/guidellm.git@feature-branch

```

Replace `feature-branch` with the name of the branch you want to install.

@@ -84,4 +84,4 @@ This should display the installed version of GuideLLM.

## Troubleshooting

-If you encounter any issues during installation, ensure that your Python and pip versions meet the prerequisites. For further assistance, please refer to the [GitHub Issues](https://github.com/neuralmagic/guidellm/issues) page or consult the [Documentation](https://github.com/neuralmagic/guidellm/tree/main/docs).

+If you encounter any issues during installation, ensure that your Python and pip versions meet the prerequisites. For further assistance, please refer to the [GitHub Issues](https://github.com/vllm-project/guidellm/issues) page or consult the [Documentation](https://github.com/vllm-project/guidellm/tree/main/docs).

diff --git a/docs/outputs.md b/docs/outputs.md

index ea3d9a6f..7a42c52a 100644

--- a/docs/outputs.md

+++ b/docs/outputs.md

@@ -106,4 +106,4 @@ for benchmark in benchmarks:

print(benchmark.id_)

```

-For more details on the `GenerativeBenchmarksReport` class and its methods, refer to the [source code](https://github.com/neuralmagic/guidellm/blob/main/src/guidellm/benchmark/output.py).

+For more details on the `GenerativeBenchmarksReport` class and its methods, refer to the [source code](https://github.com/vllm-project/guidellm/blob/main/src/guidellm/benchmark/output.py).

diff --git a/pyproject.toml b/pyproject.toml

index 2aec3a27..8115b1fd 100644

--- a/pyproject.toml

+++ b/pyproject.toml

@@ -100,10 +100,10 @@ dev = [

]

[project.urls]

-homepage = "https://github.com/neuralmagic/guidellm"

-source = "https://github.com/neuralmagic/guidellm"

-issues = "https://github.com/neuralmagic/guidellm/issues"

-docs = "https://github.com/neuralmagic/guidellm/tree/main/docs"

+homepage = "https://github.com/vllm-project/guidellm"

+source = "https://github.com/vllm-project/guidellm"

+issues = "https://github.com/vllm-project/guidellm/issues"

+docs = "https://github.com/vllm-project/guidellm/tree/main/docs"

[project.entry-points.console_scripts]