Replies: 1 comment 1 reply

-

|

I like the idea (for us). Not sure how we can make this easy / straightforward for outside contributors, but I like it. |

Beta Was this translation helpful? Give feedback.

1 reply

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

-

Hi @AnthonyMockler

Just wanted to get your feedback on how we can standardize the implementation of #5.

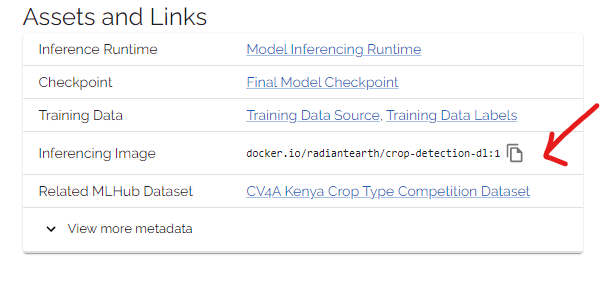

One way to implement this is to recommend that model providers create a docker image that encapsulates an environment that can run model inference and to provide additional instructions on how they can input their own data and extract the results. As an example, this is how the Radiant ML Hub serve up their models.

IMO, this is a better alternative than asking them to setup a virtual env (for python) and something else if its R or Julia, as it can apply to almost any model.

I think this is probably doable for the poverty mapping project, since it can fetch most of the datasets it needs (via geowrangler modules), assuming they are available for that country/year.

See this example

Beta Was this translation helpful? Give feedback.

All reactions