Writing tiles to numpy in parallel #7473

Replies: 3 comments 12 replies

-

|

You could always just use dask.delayed like we did here. Basically create a function which when called pulls out one chunk of your array and writes just that chunk to a file. Then wrap that function in dask.delayed and I think dask should be able to parallelize that. |

Beta Was this translation helpful? Give feedback.

-

|

Why not just use Zarr? That's basically what Zarr does: split arrays into a bunch of chunks. |

Beta Was this translation helpful? Give feedback.

-

|

You might want to consider using xbatcher which is developed for ML applications. It basically makes it easy to feed Xarray objects to machine learning libraries such as PyTorch or Keras. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

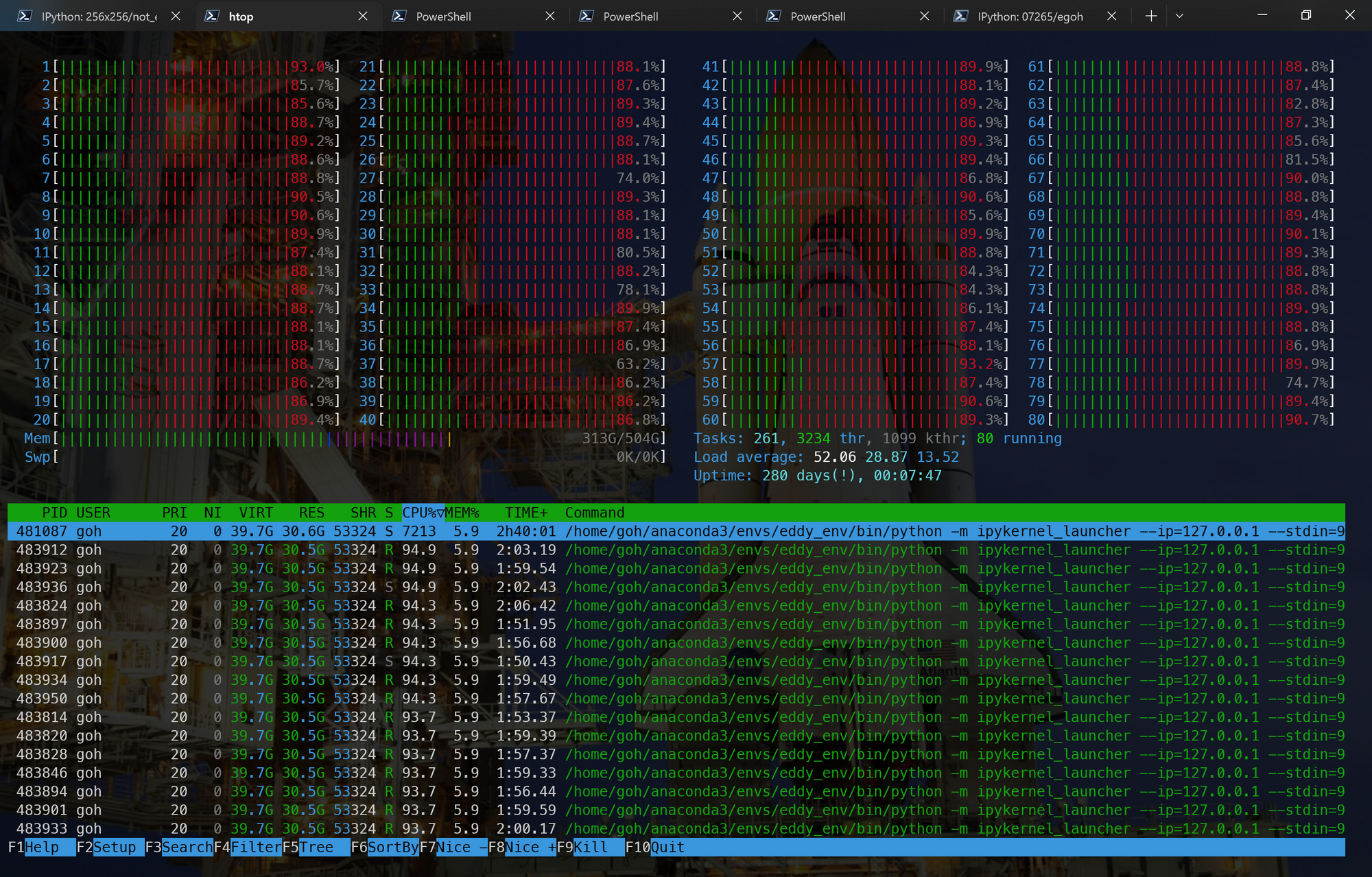

I am trying to improve the performance of my script that takes an xarray dataset containing a variable with shape (~2e6, 256, 256) that corresponds to the coordinates

index,i_local, andj_local, respectively. My goal is to cast the entire variable to float16 and write eachindexto individual 256x256 PNG files.This dataset is a subset of a 5PB ocean simulation (LLC4320) that is lazily loaded using xarray, so I'm essentially streaming from one location on disk into numpy files in another location on disk. However, my current implementation is very slow, taking ~8 seconds per tile. I have looked into using map_blocks and GroupBy.apply, but those seem to require helper functions that return arrays, whereas my

save_to_numpyfunction doesn't return anything.Is there any way to parallelize this operation using xarray and dask? Are there any best practices for this type of operation? Any help would be greatly appreciated.

Just in case, here's my

save_to_numpyfunction:Beta Was this translation helpful? Give feedback.

All reactions