-

|

Hello everyone! I'm looking to implement a xarray backend which would allow to read a series of HDF5 files (not written following netCFD conventions). I managed to get a backend which allows lazy-loading the data into xarray (I can provide the code if it's relevant, let me know), import xarray as xr

ds = xr.open_mfdataset('/BIG14_TB/tmp/data_*.h5', engine='mytest', parallel=True)

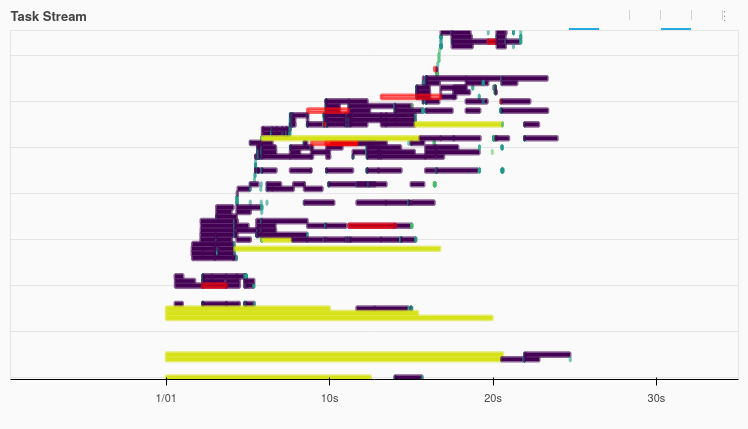

print(ds)In this case, the full array is about 22GB and could still fit into memory (I've been experimenting with 32GB RAM). I'm looking to open multi-variable datasets which are in the range of 1TB. First off, let's consider the following computation: pixel_in_time = ds['myvar'].isel(t=slice(-50, None)).mean(('x', 'y','z')).persist()I can't help but notice that most of the time reported by the Dask profile is spent in the Secondly, when the dataset I'm manipulating approaches the size of the RAM, computations will freeze. This only happens when I'm using Dask's distributed scheduler. The task stream appears to create a lot of threads at the start, and at some point, Dask starts to complain about having too much unmanaged memory and starts to spill to disk (leading to the freeze). Such a behaviour could be triggered by the following code (I halted the client before it started spilling, but the task stream would continue to grow diagonally like shown below), import numpy as np

time_average = np.sqrt(ds['myvar']).mean(('x', 'y')).persist()I tried rechunking the data in various ways to no avail. Thank you very much in advance! |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment

-

|

I've investigated a bit more, and traced back the issue to calls to

I will close (I'd prefer delete) this issue as it fails to describe the problem correctly, and eventually come back with a MCVE in the future. |

Beta Was this translation helpful? Give feedback.

I've investigated a bit more, and traced back the issue to calls to

dask.array.stackthat I made withinxarray.backends.BackendArray. It seems Dask Distributed does not like it,I will close (I'd prefer delete) this issue as it fails to describe the problem correctly, and eventually come back with a MCVE in the future.