Replies: 2 comments 2 replies

-

|

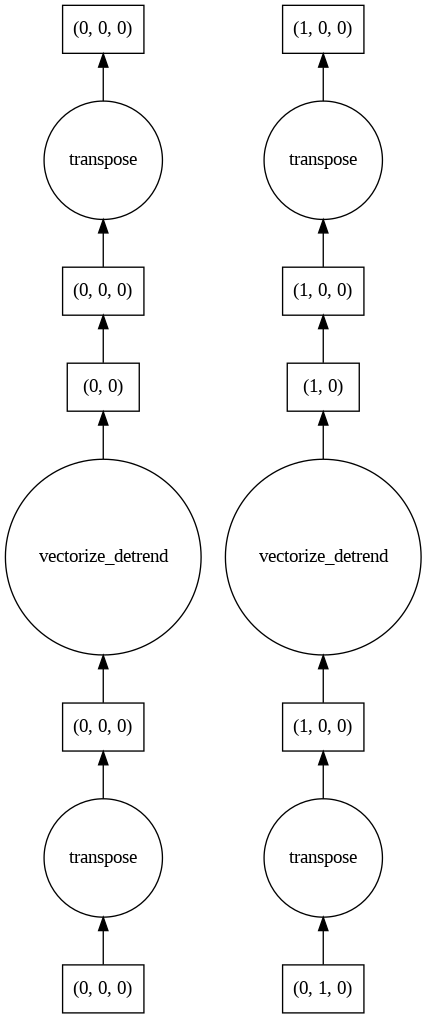

I tried changing The compute step Here is the task graph: |

Beta Was this translation helpful? Give feedback.

-

|

It might not be possible (yet?). I have had what I believe are similar issues when wrapping scipy functions and applying them to dask arrays. There are cases where the scipy function tries to load everything into memory, so even if using I haven't had time to look into the root of either issue yet, but if you want to play and see for yourself, I have some of the experiments up on this github repo. If you try |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

(Edit: I removed the task graph as there is no graph generated for

dask='allowed'which is the setting used in the working MVE below.)[This is related to another active thread I had started but feel like the question here is specific enough to warrant a separate thread. ]

What I am trying to do: Detrend an xarray dataarray using

scipy.signal.detrendcalled withinxr.apply_ufuncWhat is the problem: The MVE below works for small sizes

(10000,1000,3)but fails if I progressively increase the array size(10000,10000,3)even when I have more than enough memory. I get a 'kernel died' message right after theapply_ufuncstep is finished.Beta Was this translation helpful? Give feedback.

All reactions