Replies: 2 comments 5 replies

-

|

The map_blocks trick should be unnecessary given dask/dask#8015 is in 2021.09.0. Your error is because you're using I don't know what's happening. Is there any difference in the memory usage? It would really help if you could provide a reproducible example |

Beta Was this translation helpful? Give feedback.

-

|

@dcherian Thanks for the quick response.

I do not see significant difference in memory usage in test examples on my personal laptop. On the other hand, I saw huge memory usage (with isel approach) when was running on hpc cluster with dask-mpi. It was probably because I set memory target, spill, pause options to False in distributed.yaml file to check actual memory usage (>32gb for 300 GB data). Eventually, my code crashed.

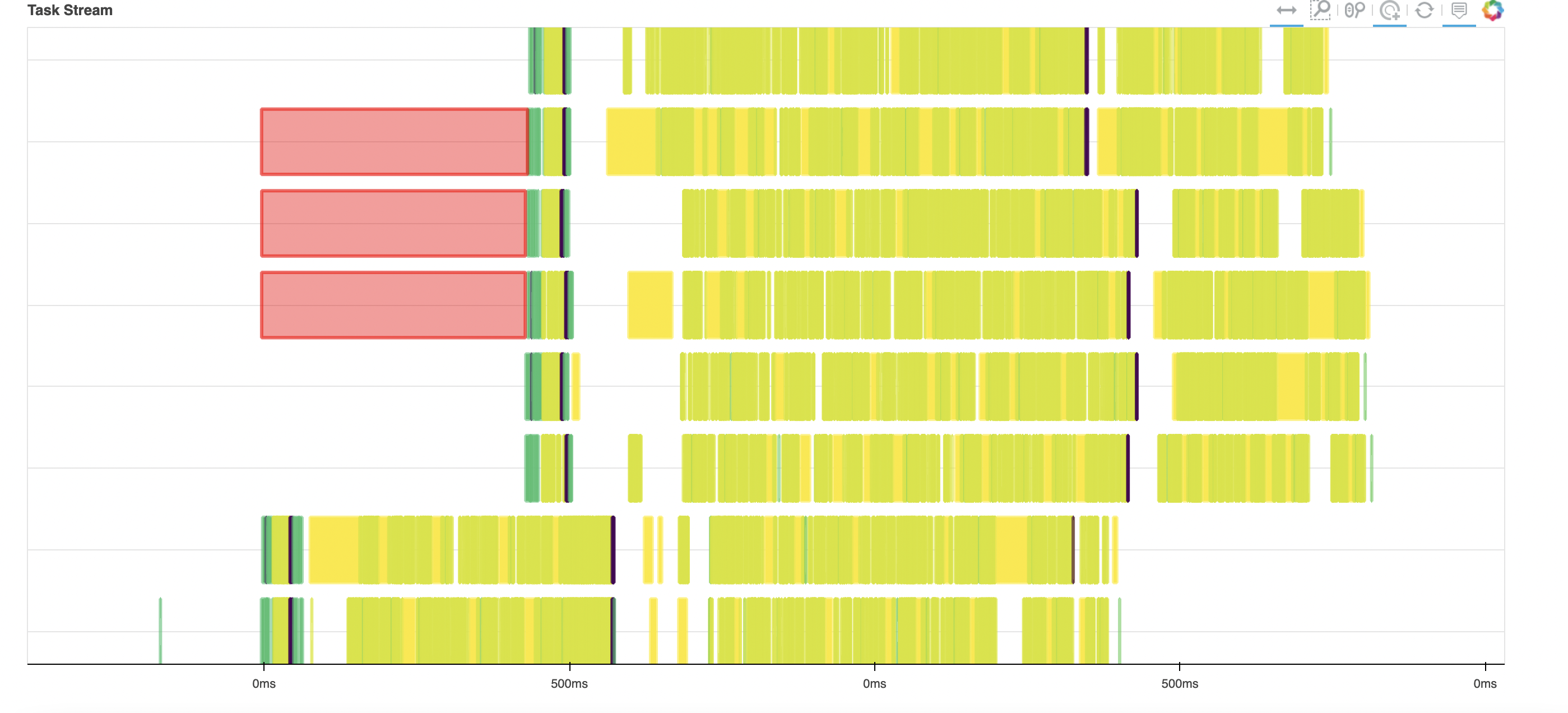

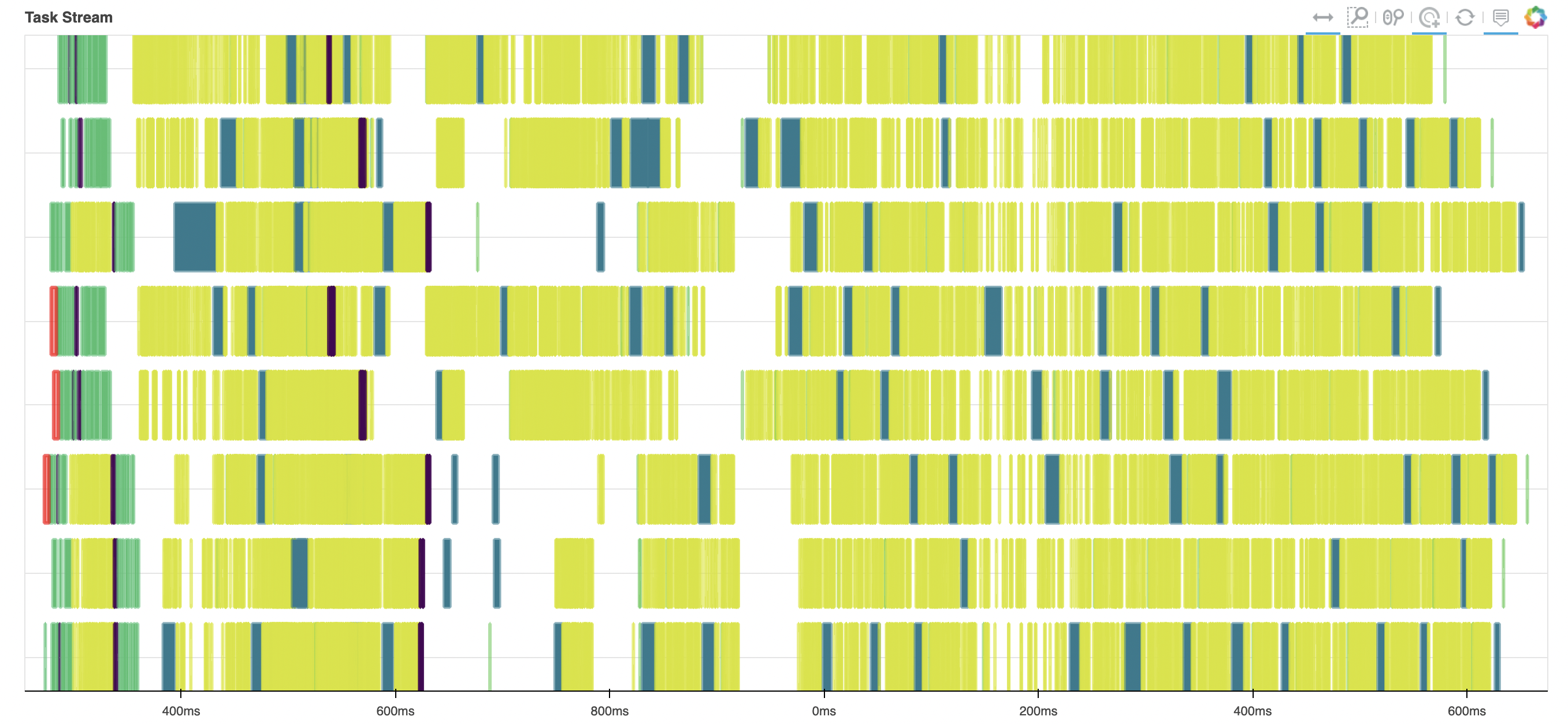

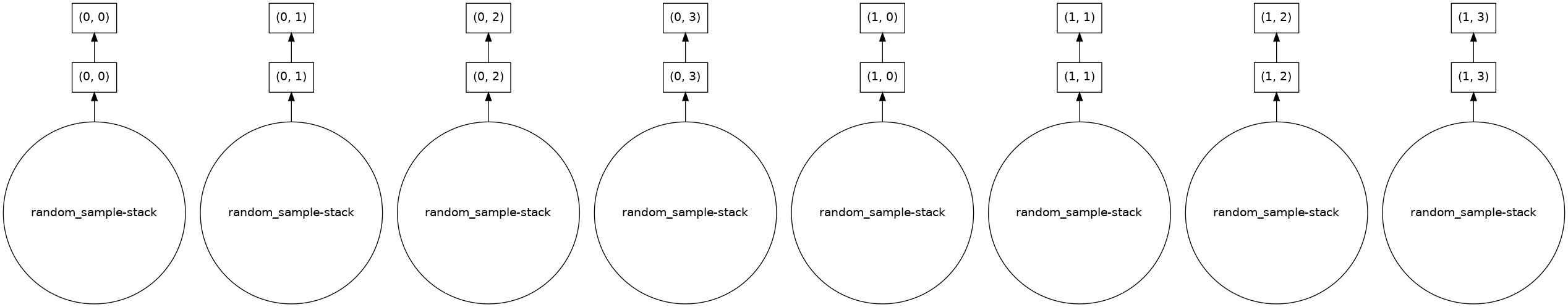

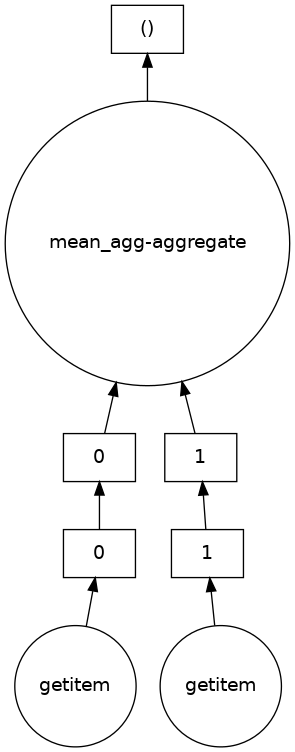

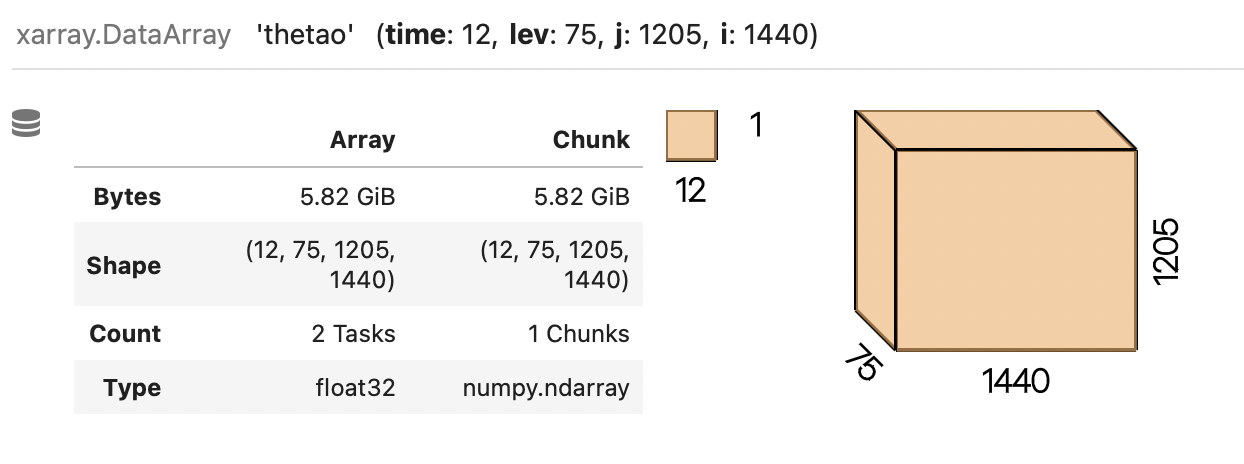

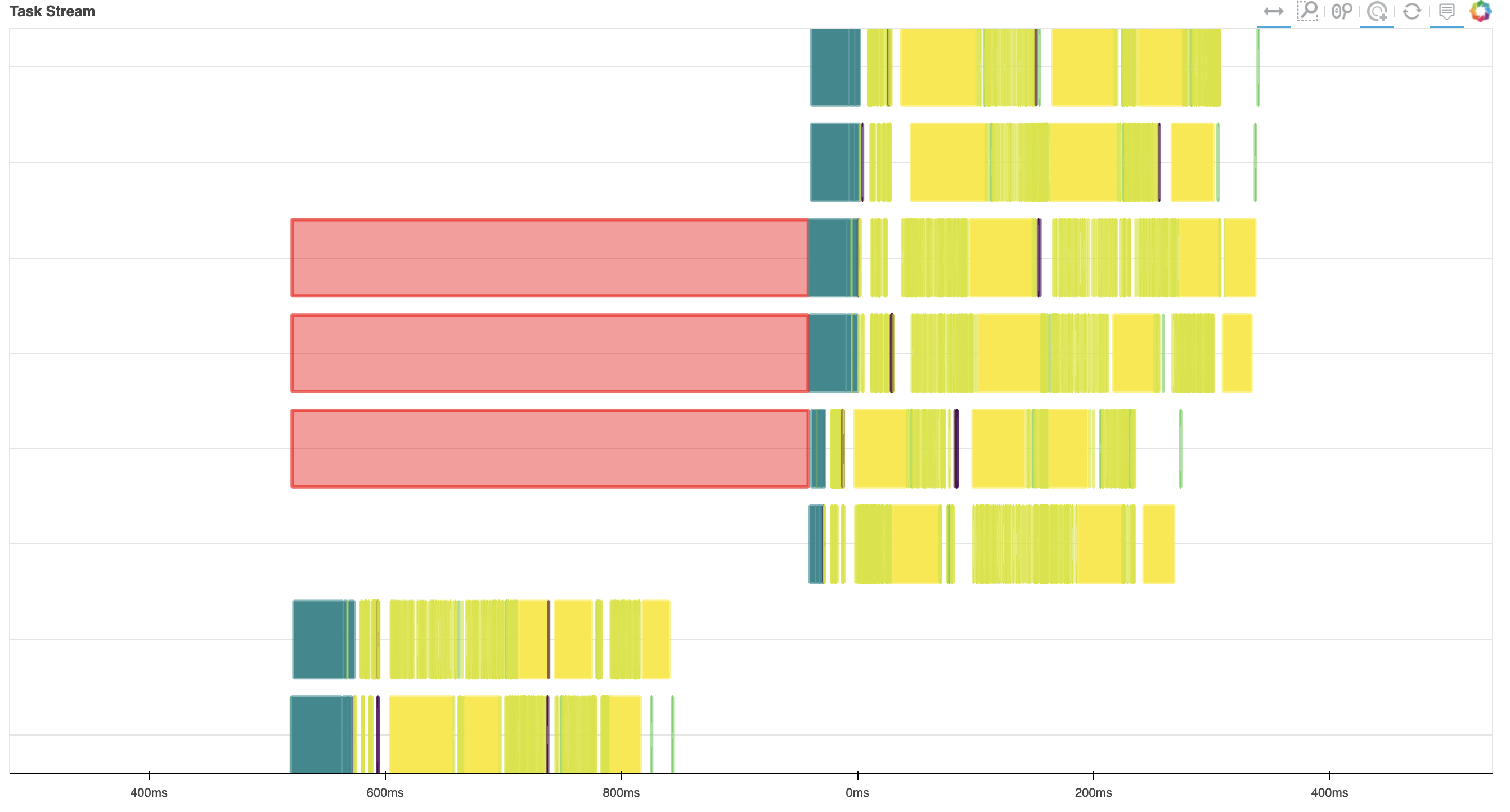

Here is an reproducible example On my Mac, the first approach takes double time and there is lot of reds in the task streams. From 1st approach (isel and then mean) From 2nd approach (mean from data snapshot) Also, I observed that using I do not know what is going wrong. I though that |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

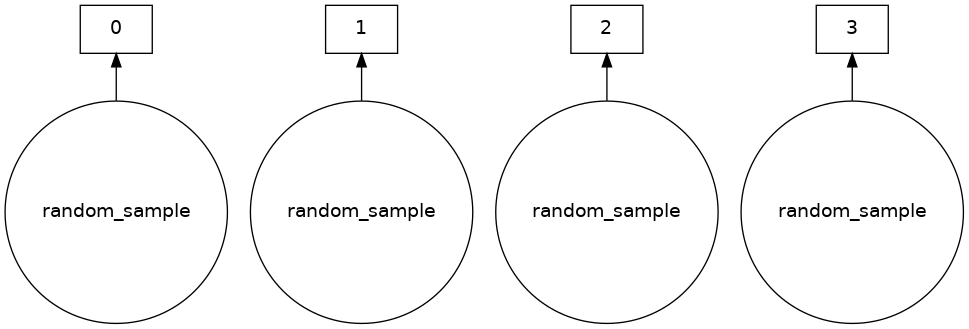

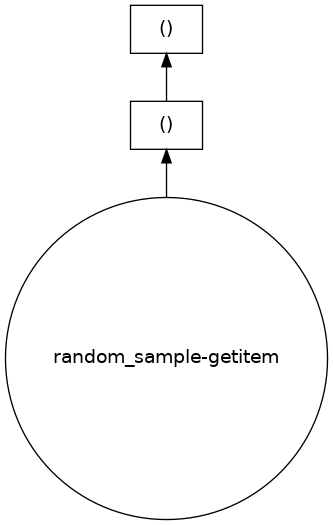

I am working with multi-ensemble output and I use

concatoperation to combine multiple files into one xarray dataset.The main issue

I want to compute mean over ensemble members for a specific time snapshot. I compared the required calculation using two methods (for consistency, chunks were defined the same way in both)

I was expecting both methods to have similar efficiencies. However, method 1 tends to be significantly slower with a lot of inter-worker communication and data transfer (see task stream below).

On the other hand, method 2 works very smoothly.

It seems that, in method 1, data for a lot of time snapshots is being loaded before slicing. This issue is related to issue dask/dask#3595. Based on suggestions @dcherian and others,

map_blocks(numpy.copy)should work fine. However, I could not make it work (I am new to map_blocks) and I get the following error.I don't know what is going is wrong with

map_blockscommand. Could someone help with this? Are there better ways to handle slicing of large datasets?I am using

dask 2021.10.0

Xarray 0.19.0

Beta Was this translation helpful? Give feedback.

All reactions