Overfitting #85

-

|

Hey Daniel, Recently I figured that it is not about what complex model you use but its how you use it. Suppose you get a Overfitting is constant pain in a** when we use transfer learning. So,

|

Beta Was this translation helpful? Give feedback.

Replies: 1 comment

-

|

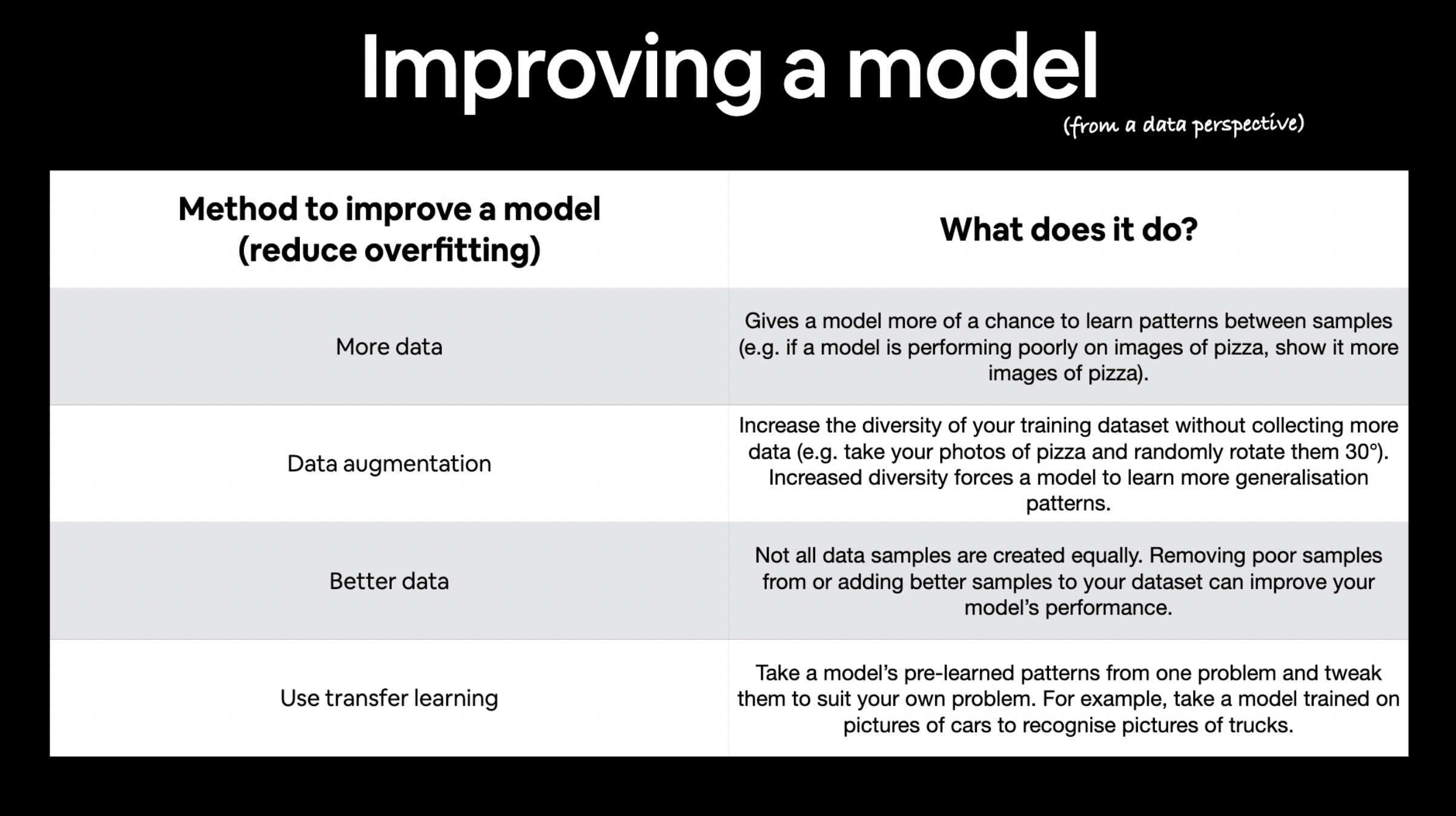

Hey Gaurav, There are several different ways to approach overfitting (image taken from: 03 Convolutional Neural Networks Slides):

Dropout layers randomly remove a certain number of connections between two layers (e.g. layer has 32 neurons, with a dropout rate of 50%, only 16 of them will connect to the next layer). Removing a certain number of connections has the hope of improving the remaining connections. See this explanation for more: https://stats.stackexchange.com/questions/241645/how-to-explain-dropout-regularization-in-simple-terms There's a dropout layer available in TensorFlow too: https://www.tensorflow.org/api_docs/python/tf/keras/layers/Dropout

Not always. I'd try reducing data augmentation/trying different kinds of augmentation. I'd look into methods such as RangAugment and AutoAugment (RandAugment seems to perform better in practice). Other overfitting methods you might want to research include (often referred to as regularization):

|

Beta Was this translation helpful? Give feedback.

Hey Gaurav,

There are several different ways to approach overfitting (image taken from: 03 Convolutional Neural Networks Slides):

Dropout layers randomly remove a certain number of connections between two layers (e.g. layer has 32 neurons, with a dropout rate of 50%, only 16 of them will connect to the next layer).

Removing a certain number of connections has the hope of improving the remaining connections.

See this explanation for more: https://stats.stackexchange.com/questions/241645/how-to-explain-dropout-regularization-in-simple-terms

There's a dropout layer available in TensorFlow too: https://www.tensorflow.org/api_docs/python/tf/keras…