What does affect the number of trainable values for fine-tuning when you unfreeze some top layers? #405

Unanswered

emrecicekyurt

asked this question in

Q&A

Replies: 1 comment 2 replies

-

|

This article may help |

Beta Was this translation helpful? Give feedback.

2 replies

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

-

Hello everybody,

I've just experienced an interesting thing: while setting a model's top layers as trainable, there were two ways to do that; both gave different results.

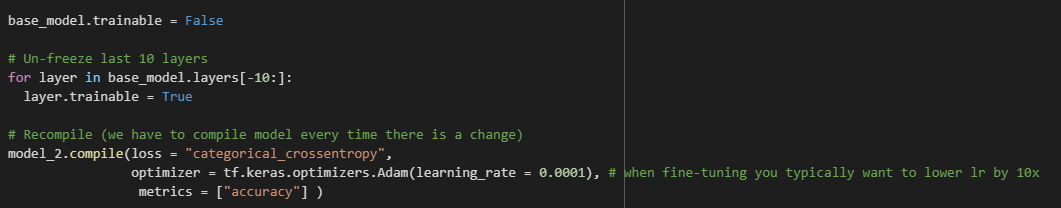

While setting the top layers as trainable = True, the first option was setting the base_model.trainable =False then assigning the top layers to True in a for a loop. This method gives only 2 trainable variables after compiling the model which causes a lower accuracy.

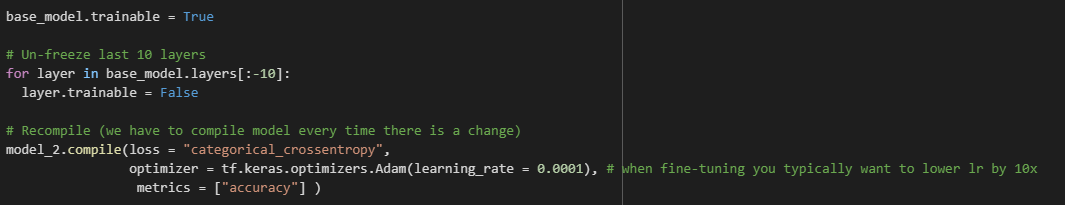

The second option is assigning the base_model.trainable= True then setting up all layers untrainable excluding the top 10 layers in a for a loop. It gives 12 numbers of trainable variables (of course after compiling) that cause better results after fitting.

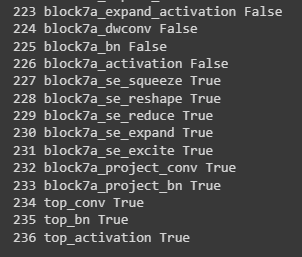

Trainable layers for both methods (only top 10 layer is unfrozen)

Beta Was this translation helpful? Give feedback.

All reactions