Section 2 Exercise: Softmax clone doesn't work the same as tensorflow's softmax #308

Answered

by

mrdbourke

Raunak-Singh-Inventor

asked this question in

Q&A

-

|

I tried the softmax clone given in the course repo, and it doesn't give the same results as |

Beta Was this translation helpful? Give feedback.

Answered by

mrdbourke

Jan 19, 2022

Replies: 1 comment 2 replies

-

|

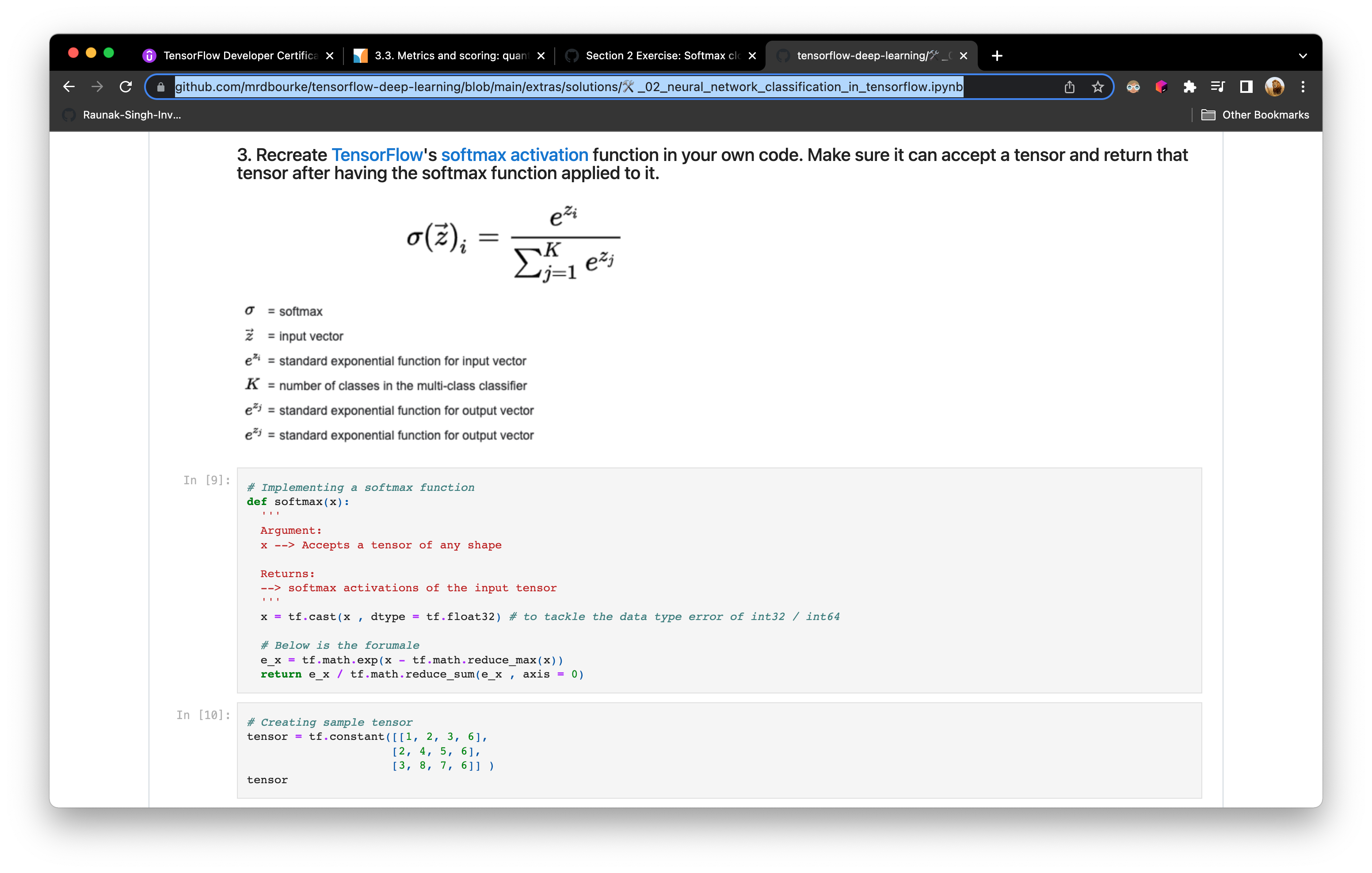

I updated your code to reflect the correct solution based on here: https://www.tensorflow.org/api_docs/python/tf/nn/softmax import tensorflow as tf

tf.__version__

# Creating sample tensor

tensor = tf.constant([[1, 2, 3, 6],

[2, 4, 5, 6],

[3, 8, 7, 6]], dtype=tf.float32)

print(f"input to softmax activation:{tensor[0, :]}")

print("--------------------------------------------------------------------------------------")

outputs = tf.keras.activations.softmax(tensor, axis=-1) # apply the softmax function on the input tensor, axis=-1 is default

print(f"output of softmax activation:{outputs[0, :]}")

print("--------------------------------------------------------------------------------------")

print(f"sum: {tf.reduce_sum(outputs[0, :])}")

# Replicate the functionality

def softmax_clone(x, axis=-1):

return tf.exp(x) / tf.reduce_sum(tf.exp(x), axis=axis, keepdims=True) # <- updated this line

print(f"input to softmax activation:{tensor[0, :]}")

clone_outputs = softmax_clone(tensor)

print(f"output of softmax_clone activation:{clone_outputs[0, :]}")

print("--------------------------------------------------------------------------------------")

print(f"sum: {tf.reduce_sum(clone_outputs[0, :])}") Do you have a link to where the code you posted came from? I can go back and fix it. |

Beta Was this translation helpful? Give feedback.

2 replies

Answer selected by

mrdbourke

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Hey @Raunak-Singh-Inventor,

I updated your code to reflect the correct solution based on here: https://www.tensorflow.org/api_docs/python/tf/nn/softmax