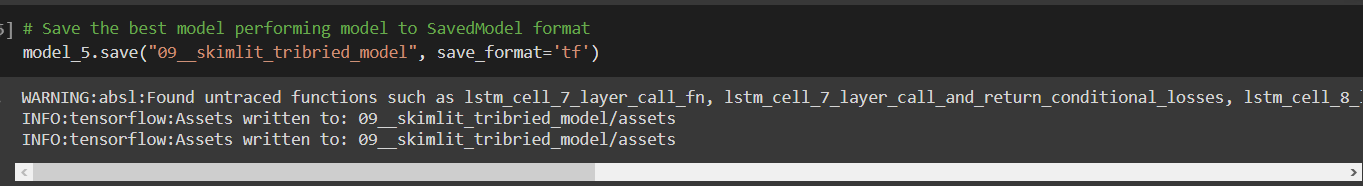

Cannot load the last model (model_5) in the Milestone project 2 Skimlit #170

-

|

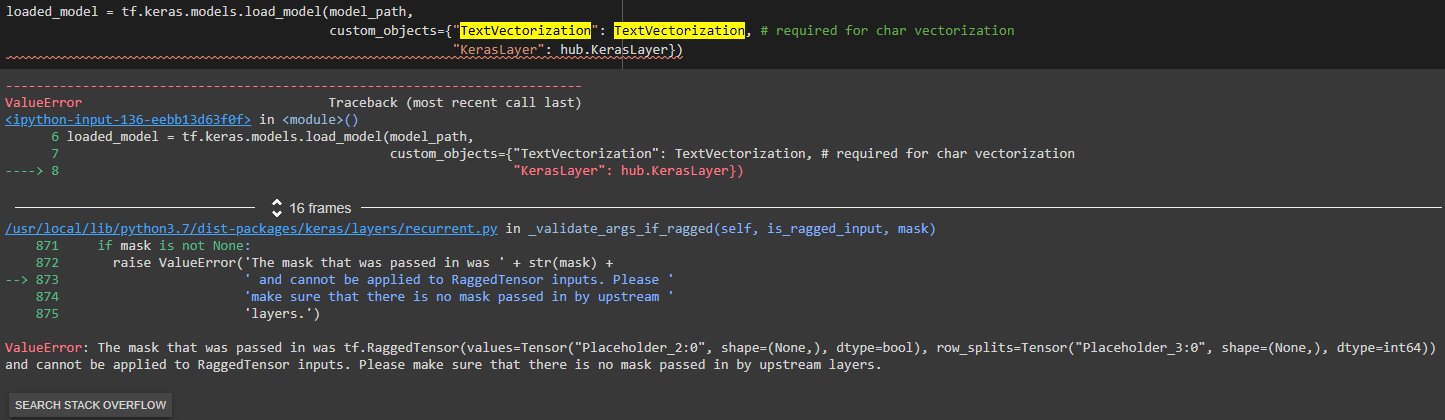

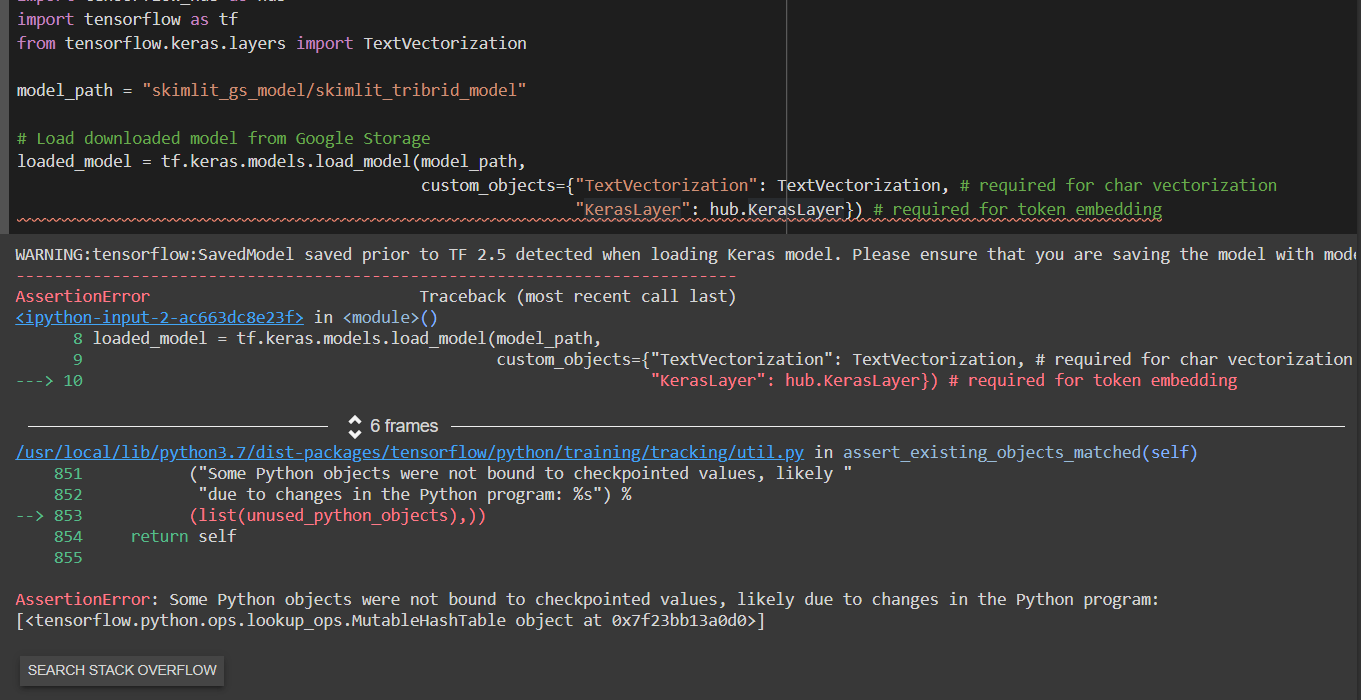

I'm stuck with two error for 2 days and still cannot load the model.

What i haved tried: |

Beta Was this translation helpful? Give feedback.

Replies: 2 comments 3 replies

-

|

Hmm... I haven't seen either of those before. Thank you for uploading what you've tried so far. Has anything else worked? Or not worked? I'll dig into the template notebook tomorrow and see what's going on - I'll post my findings here. Update: Looks like it's a TensorFlow 2.5+ issue: Will get deeper into this tomorrow. I think the model on GCP (the one downloaded into the notebook doesn't contain the |

Beta Was this translation helpful? Give feedback.

-

|

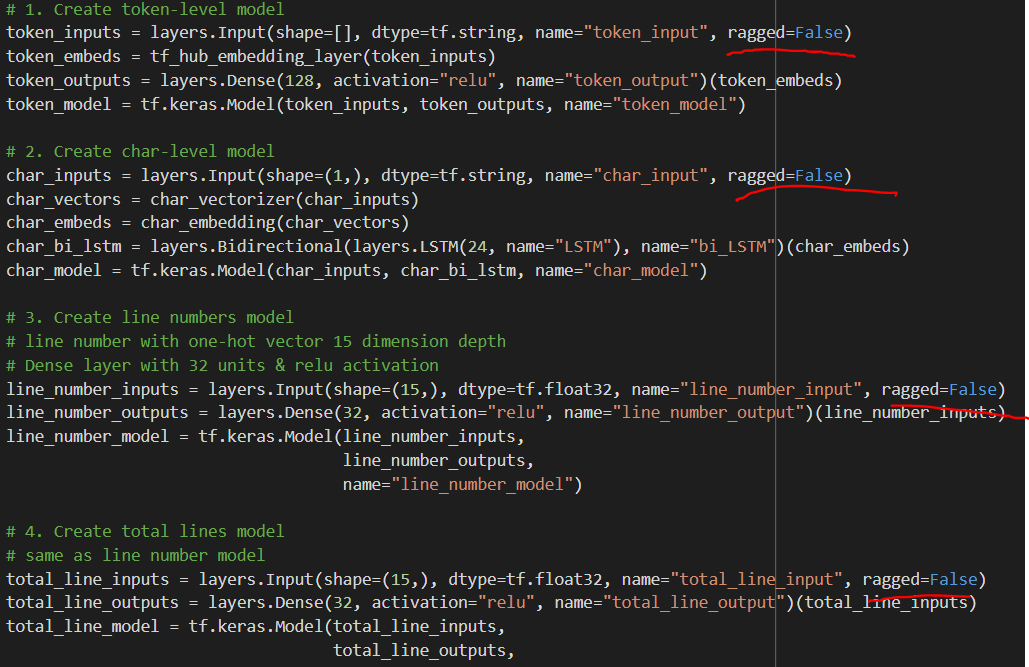

I've found a fix! It looks like the masking was an issue indeed. This error: Turns out the masking happened in the # Create char embedding layer (OLD)

char_embed = layers.Embedding(input_dim=NUM_CHAR_TOKENS, # number of different characters

output_dim=25, # embedding dimension of each character (same as Figure 1 in https://arxiv.org/pdf/1612.05251.pdf)

mask_zero=True, # <-- mask creation

name="char_embed")To remove the mask, I set # Create char embedding layer (NEW)

char_embed = layers.Embedding(input_dim=NUM_CHAR_TOKENS, # number of different characters

output_dim=25, # embedding dimension of each character (same as Figure 1 in https://arxiv.org/pdf/1612.05251.pdf)

mask_zero=False, # don't use masks (this messes up model_5 if set to True)

name="char_embed")This revolves the loading and saving of Also, I've updated the model stored in Google Storage (same URL download as in the course) to include the new With # Old

loaded_model = tf.keras.models.load_model("skimlit_gs_model/skimlit_tribrid_model",

custom_objects={"TextVectorization": TextVectorization,

"KerasLayer": hub.KerasLayer})

# New (with keras_metadata.pb, no custom_objects parameter)

loaded_model = tf.keras.models.load_model("skimlit_gs_model/skimlit_tribrid_model")I've updated the code in notebook 09 to reflect all of these changes - it should now run top to bottom. |

Beta Was this translation helpful? Give feedback.

I've found a fix!

It looks like the masking was an issue indeed.

This error:

Turns out the masking happened in the

char_embedcreation code: