-

|

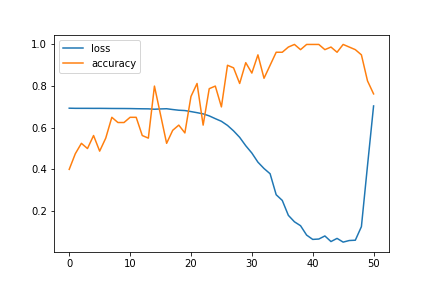

Hello everyone, Something really strange happened. I can't explain. I ran this: Well truth is, it would go down short after (epochs=60), but still. |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 1 reply

-

|

Hey Lombiz, Great question. It looks like you've run into the exploding gradient problem. In a nutshell, what happens is that a small error turns into a big error very quickly. This often happens after a neural networks finds a good set of patterns (also called weights) but then keeps training. Think of it like doing a workout, you get to a certain point and you feel good afterwards. But if you keep going, you'll probably end up feeling tired afterwards. To fix this problem you can:

|

Beta Was this translation helpful? Give feedback.

Hey Lombiz,

Great question.

It looks like you've run into the exploding gradient problem.

In a nutshell, what happens is that a small error turns into a big error very quickly.

This often happens after a neural networks finds a good set of patterns (also called weights) but then keeps training.

Think of it like doing a workout, you get to a certain point and you feel good afterwards. But if you keep going, you'll probably end up feeling tired afterwards.

To fix this problem you can: