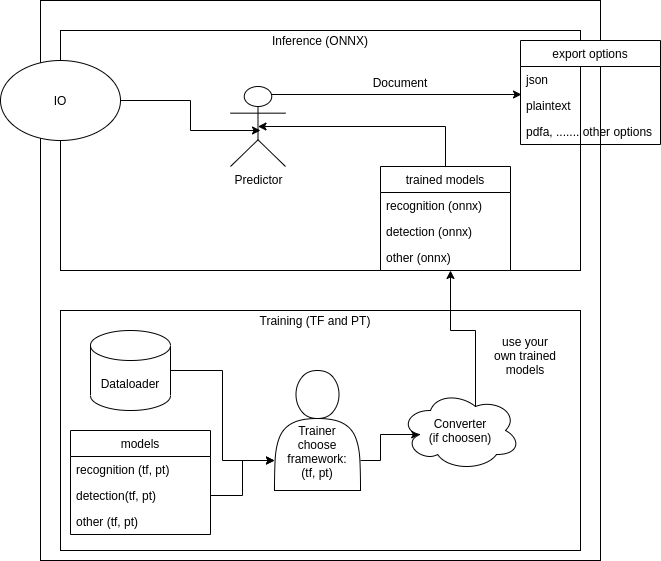

splitting lib into train and inference(onnx) #530

-

|

Hi @charlesmindee, Split the lib into train and inference parts:

Advantages:

Would be nice to work on this in my nearly vacation if you want before i work on adding any model ^^ |

Beta Was this translation helpful? Give feedback.

Replies: 4 comments 6 replies

-

|

Thanks for the suggestion! ONNX is indeed on our radar since #5 😅 That being said, the general idea is also something we were planning to do. But that needs in-depth discussion first on a few points:

Looking forward to this discussion! |

Beta Was this translation helpful? Give feedback.

-

|

Actually, this is a long-term discussion which might be more suitable for Github "discussions", let's continue over there! |

Beta Was this translation helpful? Give feedback.

-

|

@fg-mindee |

Beta Was this translation helpful? Give feedback.

-

|

@felixdittrich92 would you mind marking the relevant message as answer for this discussion? It will help potential future visitors to quickly identify the ins & outs of the topic :) |

Beta Was this translation helpful? Give feedback.

Hi @felixdittrich92

Thanks for the suggestion! ONNX is indeed on our radar since #5 😅

However, while ONNX should be one possible way to do inference, that should not close the door to others (OpenVINO, etc.)

That being said, the general idea is also something we were planning to do. But that needs in-depth discussion first on a few points:

Looking forward to this discussion!