Replies: 5 comments 11 replies

-

|

@Ipstenu this is absolutely brilliant. I just finished reading through the doc. |

Beta Was this translation helpful? Give feedback.

-

|

One aspect of such moderation that always concerns me is how to handle a individual node going rogue, if the node not the aggregator are solely responsible for moderation then the only tool an aggregator would have is to defederate from the node. This makes sense where there is obvious abuse but how would the aggregator know? I'm wondering if at the same time as standardising a moderation layer at the node, if building moderation for nodes themselves and a way beyond simply binary federated/not federated. This might be outside of scope of the current thinking, its also one with no simple answers. But until we have a way to trust the nodes, the nodes policing themselves is open opportunity for abuse. |

Beta Was this translation helpful? Give feedback.

-

|

Thanks, this looks great! Second time writing a comment as I think I misunderstood in the first instance! As I understand it now, the moderation system is an independent platform to the aggregator, so this proposal doesn't address the obligation / policies of an aggregator to what degree it may action the moderation labels (e.g. outright block a package that is malware). I do have questions about how a FAIR aggregator might handle cases such as malware and phishing / name squatting, but I think this isn't the place for I guess! |

Beta Was this translation helpful? Give feedback.

-

|

This is brilliant - THANK YOU!! I studied this as best as my poor brain can... I'm having a hard time understanding 3 things. If these should be obvious, I may not be the right audience for this. Non-coder here!

A 'shared accountable ecosystem' sounds like what we have. It depends on who we're accountable to. :) accountability to the node's community doesn't help a regular user who searches in Agreggator, unaware of the node's community.

As a user, I need to trust the labels- do they come from one source? If I accidentally find a plugin on a node that does not align with my values/standards, how would I know that?

Advisors can be ignored.

Responsible to who? That needs to be some entity with enough power to serve up repercussions. Without a real and perceived downside, node's will equal malware. |

Beta Was this translation helpful? Give feedback.

-

|

Update - I took comments (from here, the doc, social media) and made #14 so there's a grown up PR. You can still comment here :) I'm watching! |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

Related to #11

TL;DR - Moderation as Infrastructure

The docs (and this post) are long out of necessity. There are a lot of moving parts involved. But to summarize, the goal of moderation is to provide a shared infrastructure that:

There's a link at the bottom, be aware this is a first draft and needs work! Normally I'd share this next week, but given the discussions are running so fast, it seems sensible to do it now.

Intro

I’ve spent nearly two decades deeply involved in the WordPress ecosystem, including serving as the team representative for the WordPress.org Plugin Review team. That experience has given me a unique, front-line perspective on how the current centralized system works. I know its strengths, its limitations, and the real impact it has on developers, users, and the broader community. I’ve seen what happens when the burden of trust, safety, and scale falls on too few people, and I understand why things have evolved the way they have.

This proposal comes from that experience and my belief that we can build something better.

I want to create a federated plugin ecosystem where anyone can host and share code, with FAIR providing shared rules and transparent moderation to keep the system open, safe, and accountable.

Proposal: A Safer, Fairer Future for WordPress Plugins and Themes

The WordPress ecosystem thrives on openness and collaboration. The current system for managing plugins and themes depends on a small group of volunteers reviewing an overwhelming number of submissions. As the ecosystem grows, this model has become increasingly unsustainable.

Furthermore, the guidelines of the WordPress.org directories are prohibitive and limited by necessity. Guidelines like that are essential for legal protection and consistency at scale. By defining and enforcing their own clear participation rules, an Aggregator ensures that Developers and users alike benefit. All participants will know what standards are in place, what behaviour is expected, and how decisions are made.

We don't need a replacement for these policies, but a framework that allows multiple sets of guidelines to coexist. Each community or directory can define its own values while still participating in a shared, accountable ecosystem.

FAIR Protocol introduces a new, decentralized model: a federated network where anyone can operate a repository (called a Node) to host and share plugins or themes. This approach supports innovation, resilience, and community ownership. However, with that openness comes the need for shared standards and accountability.

FAIR would take on the role of an advisor not a regular. Instead of being the gatekeeper, a FAIR Working Group can act as a coordinating body that maintains technical guidelines, community norms, and tools for trust and transparency.

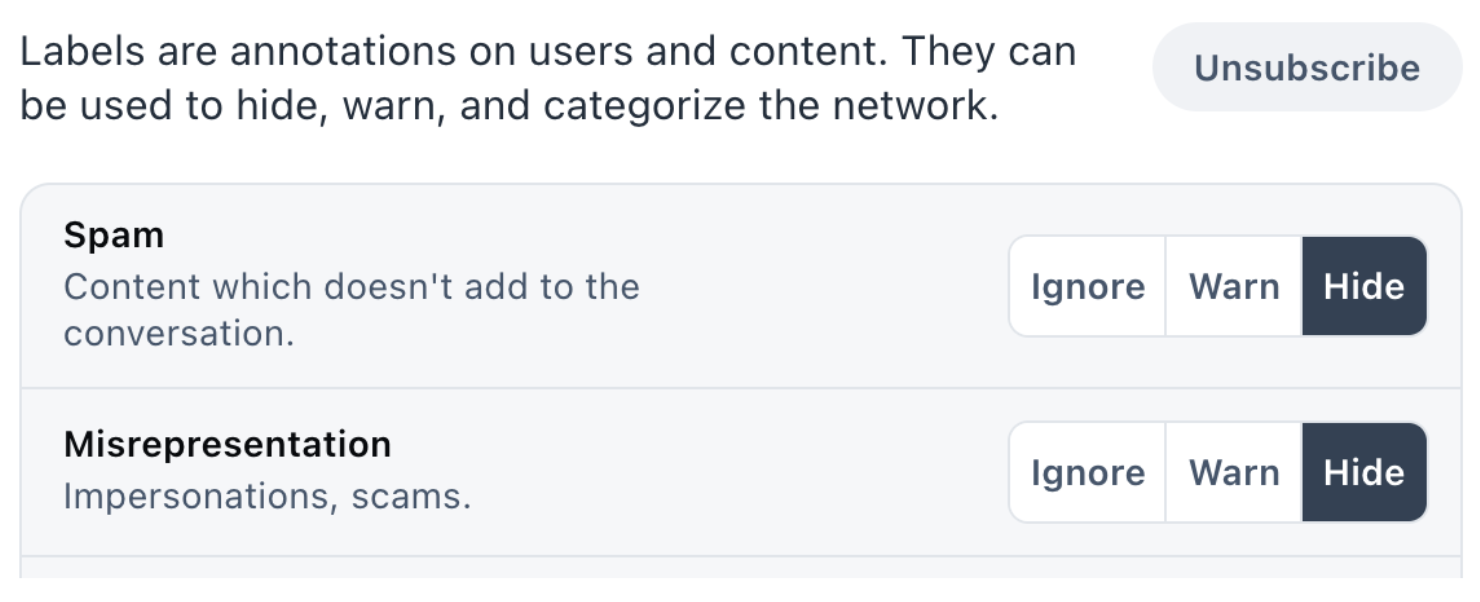

By using a decentralized moderation platform (I picked Ozone because it already works with AT Proto) we can apply clear, public labels such as “verified,” “deprecated,” or “security risk” to plugins, themes, and Nodes. Those labels won’t block access to content, but will allow users, Nodes, and Aggregators to make informed decisions based on shared trust signals.

We can also implement a threshold-based reporting system, where plugins or Nodes flagged by a large portion of their users will trigger reviews by their hosts (plugins alert Nodes, Nodes alert Aggregators, and so on). Anyone affected by these actions will have access to an appeals process, and all moderation actions will be logged transparently.

In this future model:

This system will preserve the freedom and diversity of the WordPress community while establishing practical tools to manage abuse, misinformation, and harmful code. It will give us all the tools we need to build a plugin ecosystem that is open and also safe, fair, and future-ready.

Call to Action

I’m asking you to read through the document I linked to below, question the assumptions, spot what’s missing, and share your perspective.

https://docs.google.com/document/d/1-cy26-XViofGlYaWcZ8NRS7vonJlM5boqnq8DtKOC-I/edit?usp=sharing(Everyone should be able to suggest changes and comment, if not, tell me as a reply here!)Leave comments in the docs, ask questions here. Let’s do this!PR: #14

Leave comments and questions here, critique the PR. Let's do this thing!

Beta Was this translation helpful? Give feedback.

All reactions