|

| 1 | +--- |

| 2 | +sidebar_position: 1 |

| 3 | +--- |

| 4 | + |

| 5 | +# Mediapipe solutions |

| 6 | + |

| 7 | +Mediapipe is a collection of highly popular AI models developed by Google. They focus on intelligent processing of media files |

| 8 | +and streams. The `mediapipe-rs` crate is a Rust library for data processing using the Mediapipe suite of models. The crate provides |

| 9 | +Rust APIs to pre-process the data in media files or streams, run AI model inference to analyze the data, and then post-process |

| 10 | +or manipulate the media data based on the AI output. |

| 11 | + |

| 12 | +## Prerequisite |

| 13 | + |

| 14 | +Besides the [regular WasmEdge and Rust requirements](../../rust/setup.md), please make sure that you have the [WASI-NN plugin with TensorFlow Lite installed](../../../start/install.md#wasi-nn-plug-in-with-tensorflow-lite-backend). |

| 15 | + |

| 16 | +## Quick start |

| 17 | + |

| 18 | +Clone the following demo project to your local computer or dev environment. |

| 19 | + |

| 20 | +```bash |

| 21 | +git clone https://github.com/juntao/demo-object-detection |

| 22 | +cd demo-object-detection/ |

| 23 | +``` |

| 24 | + |

| 25 | +Build an inference application using the Mediapipe object dection model. |

| 26 | + |

| 27 | +```bash |

| 28 | +cargo build --target wasm32-wasi --release |

| 29 | +wasmedge compile target/wasm32-wasi/release/demo-object-detection.wasm demo-object-detection.wasm |

| 30 | +``` |

| 31 | + |

| 32 | +Run the inference application against an image. The input `example.jpg` image is shown below. |

| 33 | + |

| 34 | + |

| 35 | + |

| 36 | +```bash |

| 37 | +wasmedge --dir .:. demo-object-detection.wasm example.jpg output.jpg |

| 38 | +``` |

| 39 | + |

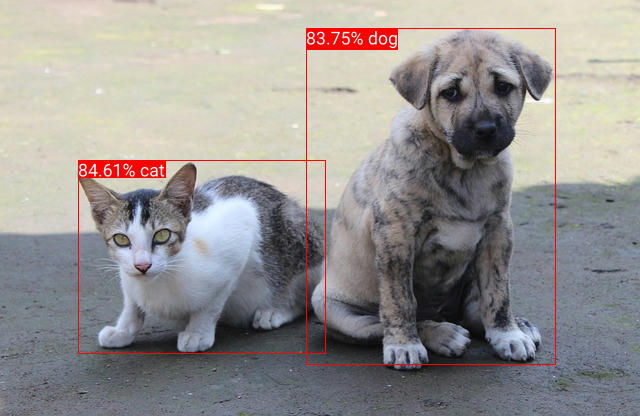

| 40 | +The inference result `output.jpg` image is shown below. |

| 41 | + |

| 42 | + |

| 43 | + |

| 44 | +The console output from the above inference command shows the detected objects and their boundaries. |

| 45 | + |

| 46 | +```bash |

| 47 | +DetectionResult: |

| 48 | + Detection #0: |

| 49 | + Box: (left: 0.47665566, top: 0.05484602, right: 0.87270254, bottom: 0.87143743) |

| 50 | + Category #0: |

| 51 | + Category name: "dog" |

| 52 | + Display name: None |

| 53 | + Score: 0.7421875 |

| 54 | + Index: 18 |

| 55 | + Detection #1: |

| 56 | + Box: (left: 0.12402746, top: 0.37931007, right: 0.5297544, bottom: 0.8517805) |

| 57 | + Category #0: |

| 58 | + Category name: "cat" |

| 59 | + Display name: None |

| 60 | + Score: 0.7421875 |

| 61 | + Index: 17 |

| 62 | +``` |

| 63 | + |

| 64 | +## Understand the code |

| 65 | + |

| 66 | +The [main.rs](https://github.com/juntao/demo-object-detection/blob/main/src/main.rs) is the complete example Rust source. |

| 67 | +All `mediapipe-rs` APIs follow a common pattern. A Rust struct is designed to work with a model. It contains functions |

| 68 | +required to pre- and post-process data for the model. For example, we can create an `detector` instance |

| 69 | +using the builder pattern, which can build from any "object detection" model in the Mediapipe model library. |

| 70 | + |

| 71 | +```rust |

| 72 | +let model_data: &[u8] = include_bytes!("mobilenetv2_ssd_256_uint8.tflite"); |

| 73 | +let detector = ObjectDetectorBuilder::new() |

| 74 | + .max_results(2) |

| 75 | + .build_from_buffer(model_data)?; |

| 76 | +``` |

| 77 | + |

| 78 | +The `detect()` function takes in an image, pre-processes it into a tensor array, runs inference on the mediapipe object detection model, |

| 79 | +and the post-processes the returned tensor array into a human redable format stored in the `detection_result`. |

| 80 | + |

| 81 | +```rust |

| 82 | +let mut input_img = image::open(img_path)?; |

| 83 | +let detection_result = detector.detect(&input_img)?; |

| 84 | +println!("{}", detection_result); |

| 85 | +``` |

| 86 | + |

| 87 | +Furthermore, the `mediapipe-rs` crate provides additional utility functions to post-process the data. For example, |

| 88 | +the `draw_detection()` utility function draws the data in `detection_result` onto the input image. |

| 89 | + |

| 90 | +```rust |

| 91 | +draw_detection(&mut input_img, &detection_result); |

| 92 | +input_img.save(output_path)?; |

| 93 | +``` |

| 94 | + |

| 95 | +## Available mediapipe models |

| 96 | + |

| 97 | +`AudioClassifierBuilder` builds from an audio classification model and uses `classify()` to process audio data. [See an example](https://github.com/WasmEdge/mediapipe-rs/blob/main/examples/audio_classification.rs). |

| 98 | + |

| 99 | +`GestureRecognizerBuilder` builds from a hand gesture recognition model and uses `recognize()` to process image data. [See an example](https://github.com/WasmEdge/mediapipe-rs/blob/main/examples/gesture_recognition.rs). |

| 100 | + |

| 101 | +`ImageClassifierBuilder` builds from an image classification model and uses `classify()` to process image data. [See an example](https://github.com/WasmEdge/mediapipe-rs/blob/main/examples/image_classification.rs). |

| 102 | + |

| 103 | +`ImageEmbedderBuilder` builds from an image embedding model and uses `embed()` to compute a vector representation (embedding) for an input image. [See an example](https://github.com/WasmEdge/mediapipe-rs/blob/main/examples/image_embedding.rs). |

| 104 | + |

| 105 | +`ObjectDetectorBuilder` builds from an object detection model and uses `detect()` to process image data. [See an example](https://github.com/WasmEdge/mediapipe-rs/blob/main/examples/object_detection.rs). |

| 106 | + |

| 107 | +`TextClassifierBuilder` builds from a text classification model and uses `classify()` to process text data. [See an example](https://github.com/WasmEdge/mediapipe-rs/blob/main/examples/text_classification.rs). |

0 commit comments