-

|

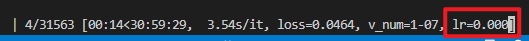

Hello, when I resume the checkpoint, why the learning rate keeps zero? train.py checkpoint_path = '2.ckpt'

cfg.trainer.resume_from_checkpoint = checkpoint_path

trainer = pl.Trainer(plugins=[NLPDDPPlugin()], **cfg.trainer)

exp_manager(trainer, cfg.get("exp_manager", None))

model = TokenClassificationModel(cfg.model, trainer=trainer)

model.setup_loss(class_balancing=cfg.model.dataset.class_balancing)

trainer.fit(model)subset of config.yaml |

Beta Was this translation helpful? Give feedback.

Answered by

titu1994

Oct 13, 2022

Replies: 2 comments 5 replies

-

|

You should use exp manager to resume training |

Beta Was this translation helpful? Give feedback.

5 replies

Answer selected by

asr-pub

-

|

Fyi @MaximumEntropy |

Beta Was this translation helpful? Give feedback.

0 replies

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

You should use exp manager to resume training