Replies: 3 comments 3 replies

-

|

RNNT always uses quite a bit of memory, our large models take around 32 GB to train on V100s at BS 8 with fp32. Fp16 can increase the batch size slightly. Never got a cuda kernel timeout so dunno how to fix that. Maybe a one off thing. Now to solve the actual question the first thing to ask is are you using cudnn auto tuning ? If so, disable it. It exhausts more memory for asr models and can cause inconsistency in runs if different kernel is picked. |

Beta Was this translation helpful? Give feedback.

-

|

@VahidooX if you hand some ideas, can you suggest ? |

Beta Was this translation helpful? Give feedback.

-

|

Thanks for the info. Makes sense that RNNT would be expensive. As for cudnn autotuning, is torch.backends.cudnn.benchmark the setting you're referring to? If so, this: gives me

I'm also getting NaN with these runs after a while, but the config documentation has some things to try tweaking for that. I think I also read FP16 can cause this? (which I'm running) |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

I was trying out training with conformer_transducer_bpe_streaming.yaml on Librispeech. I'm running the default settings except with batch size 8 (setting accumulate_grad_batches to 2 to compensate) and fused_batch_size 4 to get it to not OOM. (seems weird that would be necessary on a 24GB card, but that's beside the point).

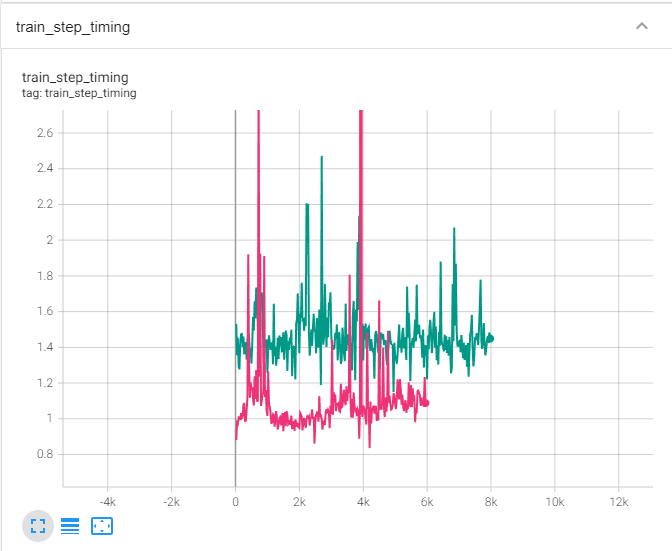

My first run failed after about 6k steps due to some CUDA kernel timeout error (which is probably another bug, but that's also beside the point). I was hitting about 2it/s (or 1it/s in terms of the effective batch). My second run has been ~1.24it/s (.62it/s effective batch) consistently. I changed nothing the second time around. Does anyone know why someone would see a sudden decrease in performance between runs with no changes to anything else, or external programs running, or anything?

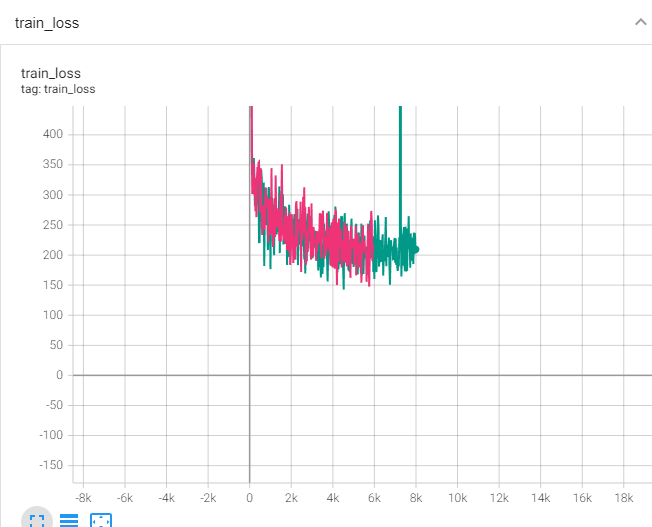

Loss graph looks the same (ignore the exploding loss in the second run, that's another separate issue I guess):

Training time is distinctly different:

The hyperparameters and computer load are seriously exactly the same between runs.

Beta Was this translation helpful? Give feedback.

All reactions